Welcome to Mix

Nuance Mix enables you to tackle complex conversational challenges using intuitive DIY tools, backed by Nuance’s industry-leading speech and AI technologies.

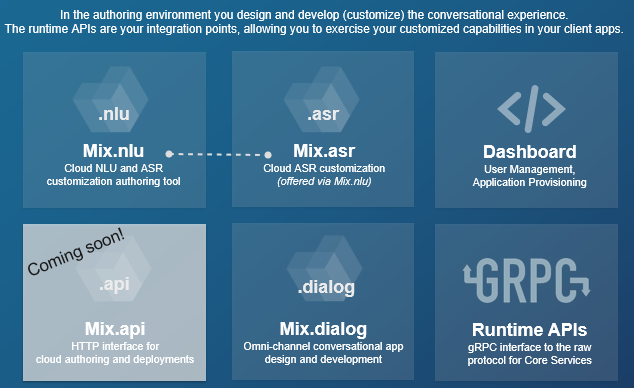

Using Mix's tool set, you define the use cases, the concepts (entities) and parameters (values), and the variety of ways consumers will interact with your app or service. This process is called authoring because you are creating (authoring) the speech (ASR), natural language understanding (NLU), and dialog resources needed to power your client applications.

Each of your projects is maintained in one place, the Mix Dashboard, where you can quickly deploy your application in a runtime environment. Your application can then interact with the ASR, NLU, and dialog resources you created using any one of the programming languages supported by the open source gRPC framework. Mix offers four runtime service APIs: ASR, NLU, and Dialog, as well as TTS (text-to-speech).

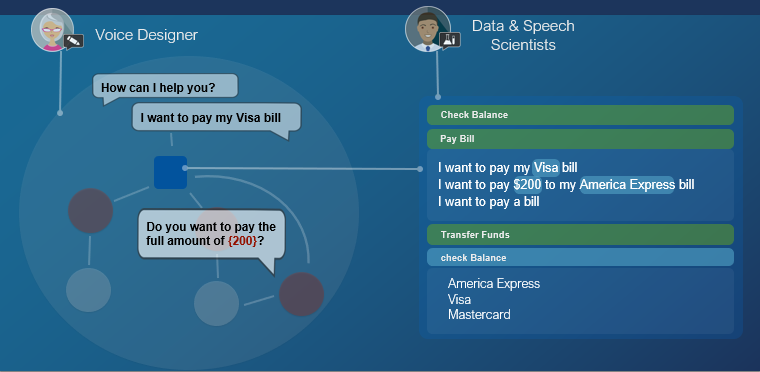

No matter your role in your organization—whether as a business stakeholder, voice designer, data/speech scientist, developer, or quality assurance tester—Mix helps you craft meaningful, interactive conversations with your users.

Mix fundamentals

Mix is an enterprise-grade software as a service (SaaS) platform that helps you create advanced conversational experiences for your customers.

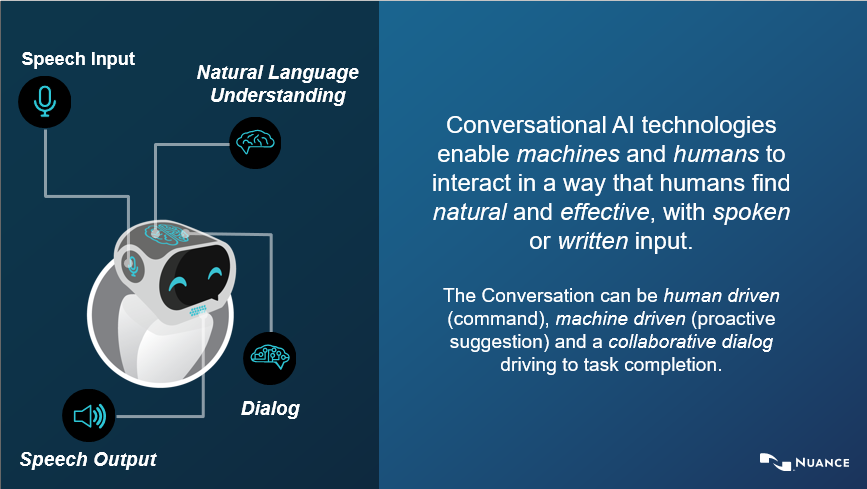

Whether you engage with customers via a web application, mobile app, interactive voice response (IVR) system, smart speakers, and/or chatbots, the conversation starts with natural language understanding.

Natural language understanding enables customers to make inquiries without being constrained by a fixed set of responses. This conversational experience allows individuals to self-serve and successfully resolve issues while interacting with your system naturally, in their own words.

Before you can design a multichannel conversational interaction (dialog), you need to understand what your customers mean, not just what they say, tap, or type.

NLU

Natural Language Understanding (NLU) is the ability to process what a user says (or taps or types) to figure out how it maps to actions the user intends. The application uses the result from NLU to take the appropriate action.

For example, suppose you have developed an application for ordering coffee. You want users of the application to be able to make requests, such as:

- "I'd like an iced vanilla latte."

- "Cup o' joe."

- "How much is a large cappuccino?"

- "What's in the espresso macchiato?"

Your users have countless ways of expressing their requests. To respond effectively, your application first needs to recognize the users’ actual words. When users type their own words or tap a selection, this step is easy. But when they speak, their voice audio needs to be turned into text by a process called Automatic Speech Recognition, or ASR.

Once your application has collected the words spoken by the user, it then needs to map these words to their underlying meaning, or intention, in a form the application can understand. This process is called Natural Language Understanding, or NLU.

Mix.nlu provides the complete flexibility to build your own natural language processing domain, and the power to continuously refine and evolve your NLU based on real word usage data.

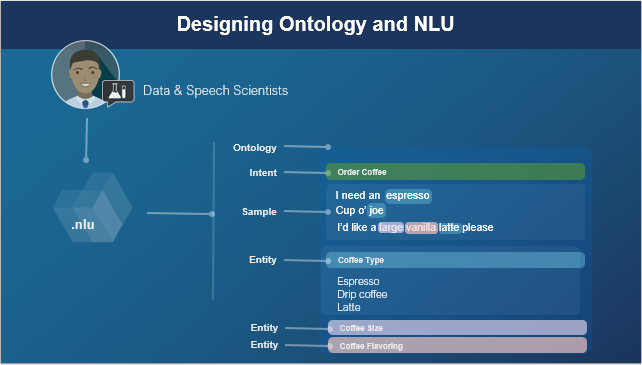

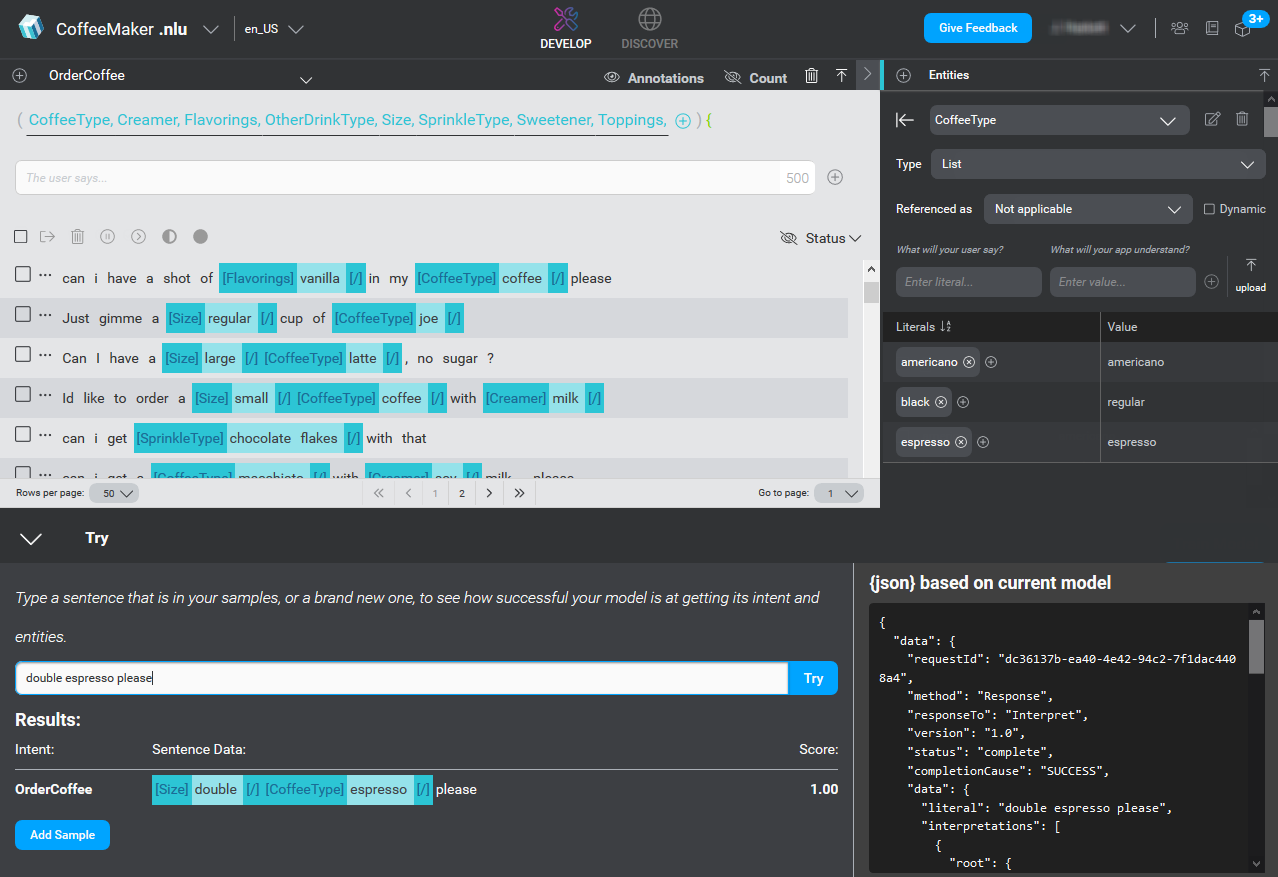

Developing an NLU model

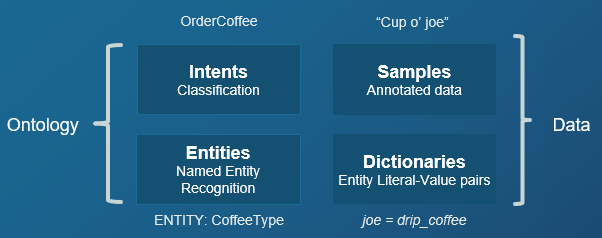

Within the Mix.nlu tool, your main activity is preparing data consisting of sample sentences that are representative of what your users say or do, and that are annotated to show how the sentences map to intended actions.

When annotating a sample sentence, you indicate:

- The function to be performed. For example, to order a drink, or to find out what is in one.

- The parameters for that function. For example, at minimum the type of drink and the size. You might also, depending on the needs of your business (and, therefore, the application), collect the type of milk or flavoring offered, and the temperature (iced or hot).

In "NLU-speak," functions are referred to as intents, and parameters are referred to as entities.

The example below shows a sample sentence, and then the same sentence annotated to show its entities:

- I'd like an iced vanilla latte

- I'd like an [Temperature]iced[/] [Flavor]vanilla[/] [CoffeeType]latte[/]

In the example, the intent of the whole sentence is orderCoffee and the entities within the sentence are [Temperature], [Flavor], and [CoffeeType]. By convention, the names of entities are enclosed in brackets and each entity in the sentence is delimited by a slash [/].

Together, intents and entities define the application (or project's) ontology.

The power of NLU models

In practice, it is impossible to list all of the ways that people order coffee. But given enough annotated representative sample sentences, your model can understand new sentences that it has never encountered before.

In Mix.nlu, this process is called training the NLU model. Once trained, the model is able to interpret the intended meaning of input such as utterances and selections, and provide that information back to the application as structured data in the form of a JSON object. Your application can then parse the data and take the appropriate action.

Iterate! Iterate! Iterate!

Training a model is an iterative process: you start with a small number of samples, train and test a model, add more samples, train and test another version of the model, and continue adding data and retraining the model through many iterations.

Here is a summary of the general process:

- What can the application do? Think about what you want your application to do.

- What types of things will users say? Add sample sentences that users might say to instruct the application to do those things.

- What does the user mean? Annotate the sample sentences with intents and entities.

- Train a simple model Use your annotated sentences to train an initial NLU model.

- How well does it work? Test the simple system with new sentences; continue adding annotated sentences to the training data.

- Test it with real users Build a version for users to try out; collect usage data; use the data to continue refining the model.

Dialog

From an end-user's perspective, a dialog-enabled app or device is one that understands natural language, can respond in kind, and, where appropriate, can extend the conversation by following up the user's input with appropriate questions and suggestions.

In other words, it's a system designed for your customers that integrates relevant, task-specific, and user-specific knowledge and intelligence to enhance the conversational experience.

For example, suppose you've developed an application for ordering coffee. You want your users to be able to make conversational requests such as:

- "I need an espresso"

- "Large dark roast coffee"

- "Can I have a large coffee, please? Wait, make that decaf."

- "How much is a cappuccino?"

Your dialog model depends on understanding the user's input, and that understanding is passed to the dialog in the form of ASR and NLU models. Mix.nlu is where you define the formal representation of the known entities (or concepts) for the purpose of the dialog and the relationships among them (the ontology), to continue the conversation. For example, by saying:

- "Sure, what size espresso would you like?"

- "OK, confirming a large coffee with no milk or sugar. Is that right?"

- "One large decaf coming up."

- "Our small cappuccino is $2.50. Should I get one started for you?"

Once you have a set of known entities from NLU, you're ready to start specifying your dialog. Your dialog will be defined as a set of conditional responses based on what the system has understood from NLU, plus what it knows from other sources that you integrate (for example, from the client device's temperature sensor or from a backend data source).

For example, if the user's known intent is orderCoffee, but the "size" concept is unknown, you might specify that the system:

- Explicitly ask the user to provide the size value: "Sure, what size?"

- Set a default value such as "regular" and ask the user to implicitly confirm the selection: "Sure, one regular coffee coming up!"

- Ask the client app to query a database to return the user's preferred coffee size: "Sure, your usual venti?"

Mix.dialog offers several response types that can be specified at each turn, including questions for clarifying input, gathering information, confirming information, or guiding the user through a task.

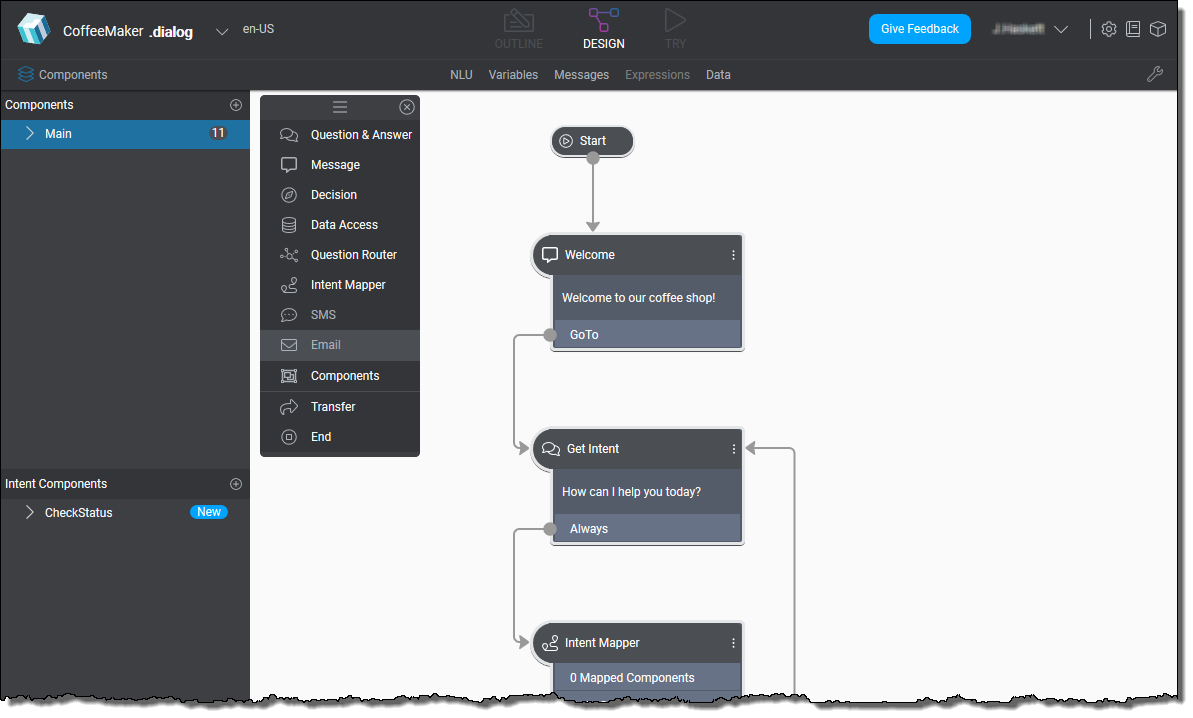

Developing a dialog model

The Mix.dialog tool enables you to design and develop advanced multichannel conversational experiences.

In particular, it allows you to:

Define a set of conditions that describe how your system should respond to what it understands (the "understanding" part is specified by you in Mix.nlu).

Drag and drop nodes to quickly create your conversational flow and fill in the details, sharing or branching behavior based on channel, mode, or language for optimal user experience.

Take in information that you have about your users, whether from a device or from a backend system, and use that information in real time to provide personalized information and guidance.

Instantly try out your conversations as you work at any time during the process in a preview mode. The Mix.dialog Try tab simulates the user experience on different engagement channels and lets you observe the dialog flow as it happens.

Like training an NLU model, building a natural language application is an iterative process. The ability to try out the dialogs you're defining before deploying them is a key part of dialog modeling.

**User**: Text Bob.

*A contact query is made to resolve Bob, and the client passes over 2 results.*

**System**: “Which Bob do you mean? Bob Smith from Metropolis or Robert Brown from Gotham City?”

**User**: “The one from Metropolis.”

In other words, queries are specific to a dialog task. They will get cleared once the task is finished. (Note: they may be kept in dialog history for some turns to resolve [anaphoras](#anaphora-and-ellipsis), but eventually they are wiped and no longer available to the dialog.) #### The system's responses (prompts and other behaviors) In addition to choosing the type of response the system should execute (asking a question, seeking confirmation, etc), you need to define the properties (also called facets) of the response. Common examples are: * Audio (Text To Speech) - what should the system say aloud? * Text - what should the system type back? * Graphic (e.g., emoticon, color theme, animation, etc) - what should the system "persona" display, if anything? * Video - should the system play a video in response? * URL - should the system offer a URL in response? * etc. You can define any response properties that are appropriate, but the most common responses are Audio and Text.

Quick start

This section takes you through the process of creating a simple coffee application.

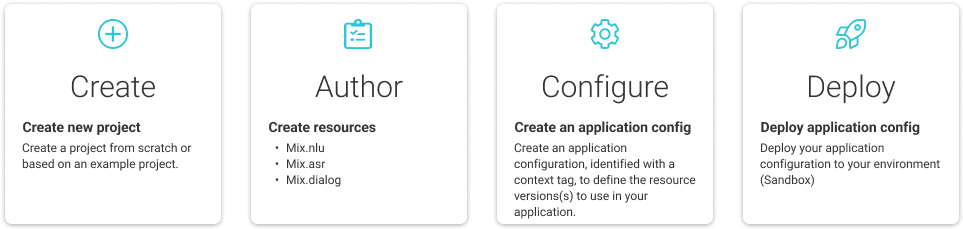

Creating applications in Mix involves the following steps:

- Create a project. A project contains all the data necessary for building Mix.asr, Mix.nlu, and Mix.dialog resources.

- Author ASR, NLU, and dialog resources using the Mix tools. You can also import existing resources from other projects. This is what you'll do in this section.

- Create an application configuration, which lets you define the resource version(s) you want to use in your application.

- Deploy the application configuration in a runtime environment, so that you can use the resources in your application, with one of the available runtime services:

- ASR as a Service

- NLU as a service

- Dialog as a Service

- TTS as a Service

- Run your client application.

Ready? Let's try it!

![]() This section takes you through the process of creating a simple coffee application, deploying it in the runtime environment, and running a client application that understands the following dialog:

This section takes you through the process of creating a simple coffee application, deploying it in the runtime environment, and running a client application that understands the following dialog:

- System: Hello and welcome to the coffee app. What can I get you today?

- User: I want a double espresso.

- System: Perfect, a double espresso coming right up!

Before you begin

To run this scenario, right-click the links to download the following files:

- order_coffee.trsx: Defines the intent and entities used in the app

- order_coffee.json: Defines the dialog

Try it out!

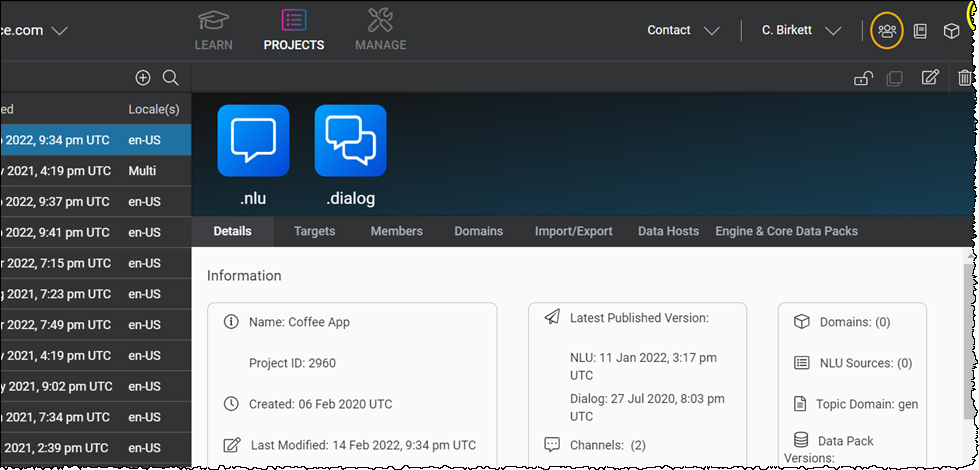

- Create a web chat project on the Mix dashboard:

- Use the Web chat project template

- Name your project: “Order Coffee”

- On the dashboard, select the Order Coffee project, and switch to the Import/Export tab.

- Click the TRSX upload icon

in the Import area, navigate to the location where you downloaded the two files, and select the order_coffee.trsx file.

in the Import area, navigate to the location where you downloaded the two files, and select the order_coffee.trsx file. - Click the JSON upload icon

in the Import area, and select the order_coffee.json file.

in the Import area, and select the order_coffee.json file. - Click the large .nlu button to open the project in Mix.nlu.

- Train your Mix.nlu model.

- Build the ASR, NLU, and Dialog resources.

- Set up a new application configuration.

- Deploy your application configuration.

- Install and run the Sample Python dialog application.

You can try the app with a few different utterances, specifying a coffee size and coffee type, for example:

- Give me a small coffee

- A large mocha please

- brew me an americano

This example uses the Dialog as a Service runtime service, but you can also try this project with the following services:

Learning about Mix

This section provides a list of resources available to help you learn about Mix.

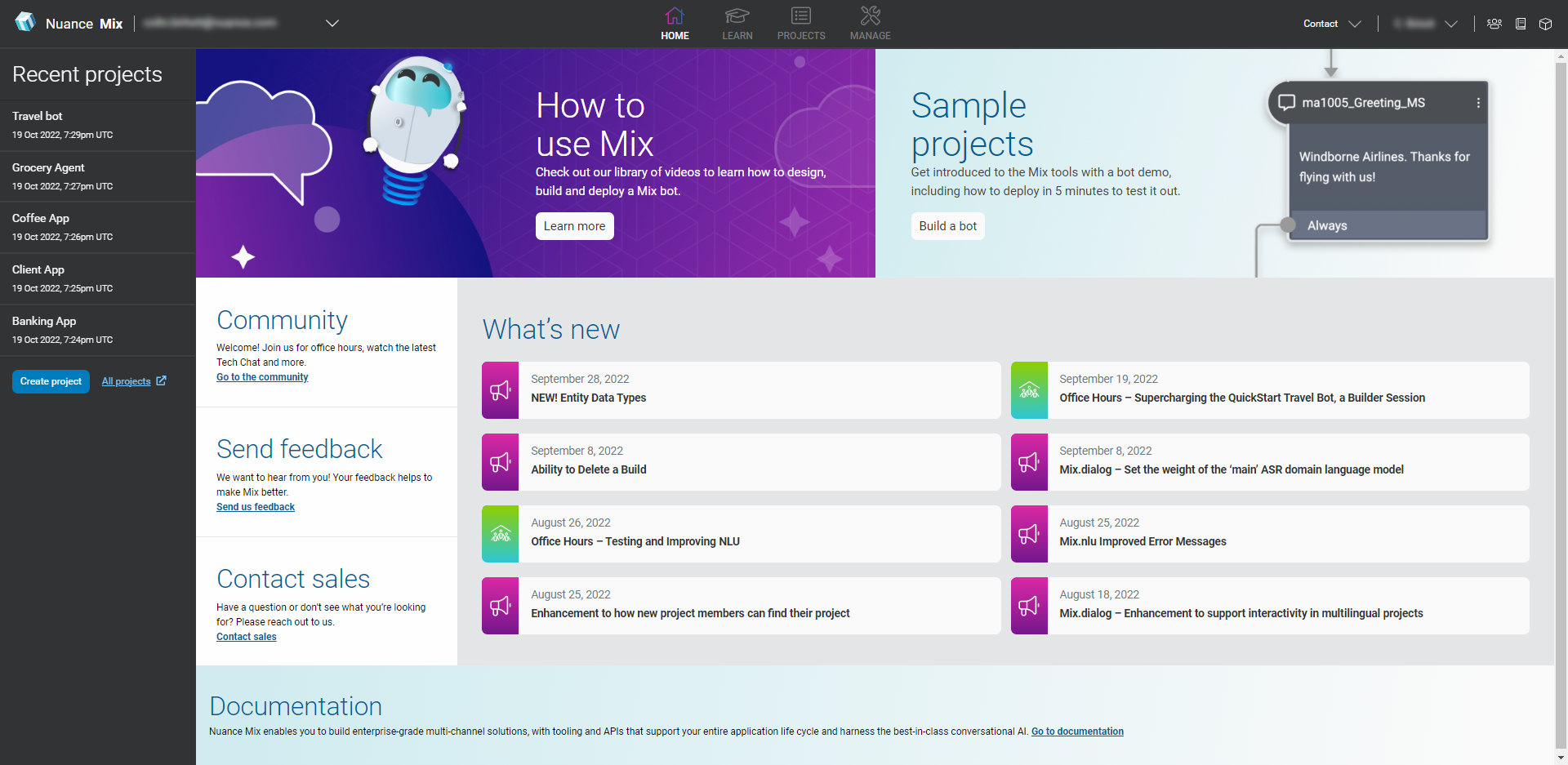

Home tab

After you log in to Mix, you first see the Home tab. The Home tab is a landing page that quickly onboards new users as well as provides experienced users a space to learn more about Mix, access recent projects, see latest announcements, join the Mix community, and find contact information for further assistance.

To access the Home tab, click Home in Mix.dashboard.

On the Home tab, you can:

- Learn more about Mix by accessing the Learn tab

- Try out sample projects through our Build a bot demo

- See the latest Mix announcements and features

- Join the Mix community

- Send us feedback

- Contact our sales team for further assistance

- Access our documentation

By default, your five most recent projects are listed in the left column. Click a project and it opens in the Projects tab. Click All projects to see all your projects in the Projects tab.

If this is your first time using Mix, click Create project to create your first Mix project.

Learn tab

The Learn tab helps both new and experienced users learn about Mix. The tab provides lessons and tutorials, sample projects, helpful tips and tricks, and announcements of new Mix features.

To access the Learn tab, click Learn in Mix.dashboard.

In the top bar, you will see four tabs:

| Tab | Description |

|---|---|

| QuickStart | Provides tutorials with sample projects to launch your own QuickStart bot. You can also see our Quick start documentation to see the process of creating a simple coffee application. |

| Lessons | Provides comprehensive training on using Mix tools (Mix.dashboard, Mix.nlu, Mix.dialog). |

| Mix.tricks | Provides answers to common questions and topics of the latest Mix features |

| Announcements | Provides information on recent Mix releases and highlights |

Have questions?

Mix provides a community forum where you can ask questions, find solutions, get information on the latest Mix releases, and share knowledge with other Mix users. You can find the forum here:

https://community.mix.nuance.com/

You can also get to the forum by clicking the forum icon ![]() , available from the Mix window:

, available from the Mix window:

Send feedback

You can submit product feedback on your experience with Nuance Mix. Using our feedback page, you can report a bug, suggest new features or usability improvements, or add any other feedback you may have.

You can find our feedback page here:

https://nuance-mix-beta.ideas.aha.io/ideas/new

Be sure to describe the context of your feedback, what problem you're trying to solve, and how you would like to see the functionality or usability improved.

Other resources

See the following additional topics:

- NLU Model Specification and Dialog Application Specification for information on the file formats (.trsx and .json) referenced above in Try it out.

- Languages and Voices for the current list of languages and voices available.

- Working with Data Packs for information on the data packs available and on predefined entities.

- Limits for current data limits for Mix projects.

- Technical Requirements for information on optimum screen resolution and preferred browsers.

- Glossary of Terms for a list of common terms and abbreviations.

Planning your app

Like any application, a successful multichannel conversational application starts with good user interface design: extensive upfront planning, from identification of business objectives and all the activities users may wish to accomplish, to consideration of design principles for creating dialog strategies, dialog flows, and prompts (called "messages" in Mix).

Five steps: An overview

The main objective of an application is to obtain information from the user to accomplish a goal or series of goals or activities. Here are five steps that should help you plan your client application.

Step 1: Define conversations

Start by making a list of all the activities you imagine a user should be able to perform using your application. Begin to think of these activities as dialogs—conversations between your user and your app with the intent to accomplish a specific goal.

Step 2: Identify items to collect for each dialog

Once you have a list of the activities you plan to enable, define the items to collect from the user to complete each activity. These are the specific items that users want and that your application can offer such as, for the order-coffee function or intent, a large coffee or double espresso. Begin to think of these items to collect as entities.

Step 3: Define intents

After you define the items to collect that complete the various activities or dialogs, make a list of the phrases a user may use to start a particular intent. You may wish to use a formal process to capture sample sentences, either via data collection (historical phrases stored in a database of an existing application or through brainstorming sessions with colleagues) or early usability testing. To import existing data, see Import data.

Step 4: Define entities

Once you’ve listed all the request phrases, you’ll want to start defining the words or phrases that users typically use to express the items to be collected. For example, for the “size” entity, the permitted values might be “small”, “medium”, “large”, and “x-large”.

Include synonyms and alternative words/phrases as well. For example, “smallest”, “normal”, “big”, “biggest you have”. Place yourself in your users’ shoes and think of how they will express what they want. For instance, users who frequent rival coffee shops may use different syntax such “short”, “tall,” “grande”, and “venti”.

Don’t forget the optional words and phrases, such as “decaf” or “hold the caffeine” for the decaffeinated coffee product; “chocolate”, “caramel”, and “vanilla” for flavoring and/or topping; and so on. Depending on your product list, a request simply for “chocolate” might require disambiguation to determine whether the user wants hot chocolate, caffè/café mocha, or, based on the dialog context, chocolate topping.

Once you have defined the phrases for each item to be collected, you’re ready to attach a meaning to each word/phrase. This process consists of assigning the word or phrase an entity and value.

You may want to create a table or chart to capture the relationship between the each word/phrase (literal) and the meaning. Another option is to capture in individual files, one per entity, a newline-separated list of literals and values (meanings). These files can then be imported directly into Mix. See Importing entity literals for the options available.

Sample size.nmlist, detailing literals and associated values:

small|small

short|short

medium|medium

regular|medium

tall|medium

primo|medium

large|large

grande|large

extra-large|extra-large

venti|extra-large

massimo|extra-large

supremo|extra-large

Sample coffeeType.nmlist:

coffee|drip

joe|drip

espresso|espresso

mocha|mocha

mocaccino|mocha

| Value to collect (entity) |

Literal (user request) |

Meaning (value) |

|---|---|---|

| Size | small short medium regular tall primo large grande extra large venti massimo supremo |

small small medium medium medium medium large large extra-large extra-large extra-large extra-large |

| CoffeeType | coffee joe espresso mocha mocaccino ... |

drip drip espresso mocha mocha ... |

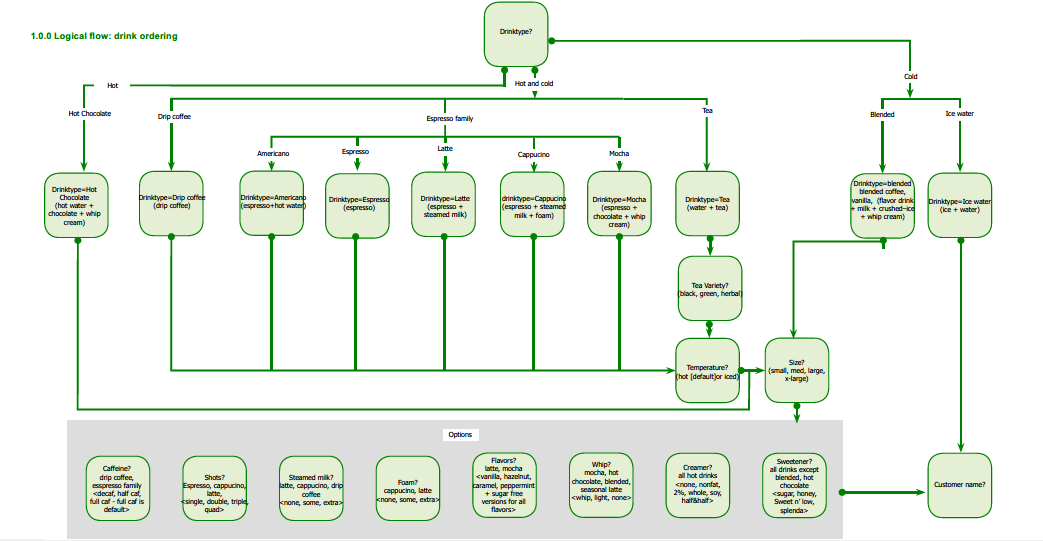

Step 5: Create sample conversation flows

Once you’ve defined your entry points (intents) and the associated items to collect (entities), your next step is to create sample conversations for each dialog. The flow of each conversation is called a dialog flow.

Dialog flows are essentially a mapping of interactions between user requests and application prompting. Conversational interactions are complex, since users can say similar things in different ways and may respond in ways that you’ve not anticipated. Dialog flows serve as an early test of your dialog model, to make sure that it flows naturally and to identify plausible responses you might have to consider in the design phase. Mix.nlu and Mix.dialog both provide the means to test (try) the models that you create and to improve upon them before integrating with a client application.

Armed with a list of requirements, goals, and primary use cases, you're ready to start defining the dialog in Mix so that all stakeholders—business owners, speech scientists, VUI designers, developers, and so on—have access to the requirements in Mix.

Defining the dialog

When you define the dialog, you must consider a number of factors before coming up with a strategy or set of strategies, such as:

- How the user will navigate through the system (determined to a large extent in the previous topic)

- How prompts (messages) will be worded to obtain information from the user

- How information (feedback) will be presented

- How failure scenarios will be handled

Dialog design principles

Comprehensive coverage of the entire design process is beyond the scope of this topic. Below is a list of major items to think about, to set you on the right track.

Analyze your application

You did this earlier in the five steps to planning your app. At this point you should have identified:

- Conversations or dialogs (activities) the application will handle

- Information to collect for each dialog

- Sample phrases that capture the user’s intent to start a dialog

- Words and phrases a user might say to express specific requests

- Sample conversation flows for each dialog

Map the user interface

Once you’ve decided on general requirements and your approach (tasks to perform, information to collect, information to return to the user, dialog flows), it’s time to sketch or map out how users will interact with the application. In these early stages you might choose to use a diagramming application like Microsoft® Visio to represent in a flow chart each dialog state in the application and use arrows to point to other dialog states based on what the user says. In Mix.dialog you will recreate and streamline this graphical representation and make it available to all stakeholders.

In the future, Mix will provide all the tools necessary to create dialog design documents, from early design flow mockups to detailed specifications, which can be reviewed with stakeholders, revised, and ultimately approved.

In the early stages remember to consider any constraints on information collection. For example, the business requirements of a drink-ordering app may demand that you first ascertain the user’s location before permitting an order to be placed (to ensure that a specific location carries the products requested). Similarly, information dependencies may exist that you need to build into the application; for example, the option to add “shots” may exist for coffees that fit within the “espresso family” but not for regular or “drip coffee”.

A flow chart indicating the information dependencies in your application will give you a visual map of the different branches your application will have to cover, and provide some guidance as to the best strategy to use in designing the dialog.

This sketch or map will help you build the conversational flow in Mix.dialog, with each specific task—such as asking a question, playing a message, and performing recognition—specified via a node. As you add nodes and connect them to one another, the dialog flow takes shape in the form of a graph, allowing you to visualize every piece of the conversational logic.

Be clear, consistent, and efficient

Design your messages to encourage the simplest and most direct responses possible. Users want speed and efficiency. The fewer the number of steps to complete a task, the greater the perceived efficiency of the system. For more information on directing user responses, see Prompting the user.

Support universal commands such as “main menu”, “escalate”, “goodbye”

Providing the ability to invoke these commands at any point in the conversation gives users control over the dialog. Design the application to allow users to say “main menu” should they miss or forget instructions, “escalate” if they get stuck, and “goodbye” if they wish to leave.

Gracefully handle errors

Errors and misunderstandings are inevitable, just as they are in regular, everyday conversation. Try to anticipate problems and give users effective instructions and feedback to get them back on track smoothly. Common errors include recognition/find-meaning failures such as no-match and no-input conditions. Provide the appropriate level of instruction given the failure condition/error to move the user along in as natural a way as possible. Suggestions are provided in Handling errors.

Confirm but don’t overdo it

Confirmation has its place: for example, for disambiguation, error handling, and when obtaining confirmation before committing a transaction. However, it’s not efficient to confirm each item at a time; unnecessary confirmation can double the length of the interaction and frustrate users. For this reason, it’s best to confirm when a block of information has been completed rather than after each individual piece of information. See Requesting confirmation.

Avoid cognitive overload

Reduce the short-term memory load on users by providing visual as well as auditory (multimodal) feedback, by limiting options whenever possible, and by splitting up complex tasks into a sequence of smaller interactions.

Maintain context

A well-designed application tracks what the user has said (or typed/tapped) and responds in context. Your strategy for maintaining conversational context should take into account factors such as the number of turns or user interactions to retain and when to release the context (for example, if the user is in the middle of a transaction and clicks the Back button or says “cancel”).

Another consideration is intent switching: Do you want to give your users the ability to switch between intents; for example, to move from the place-order dialog to the location dialog (to view a list of nearby coffee shops)? Are users able to switch back with no loss of contextual awareness, or would you prefer that they finish one task at a time? You’ll need to balance the benefits of usability against application complexity.

Remember, you are guiding the user

The best applications focus on the users’ goals and on achieving them in the most efficient and intuitive way possible. The structure of your application will depend on the natural logic of the application (the dialogs or actions to perform and the corresponding information to collect/return), and also on your users’ responses to your questions and on your messages—how you respond not only to successful results but also to errors, ambiguities, and incomplete information.

Prompting the user

Prompts (called "messages" in Mix) permit an application to interact with your users. Not only do they set the tone of the application, they also direct users toward the fulfillment of their goals.

For example, messages enable an application to:

- Greet users

- Convey the system’s readiness to perform an action or command

- Ask questions of users

- Provide information to users

- Respond to what a user says or does, in the most specific and relevant way to help them accomplish what they want to do

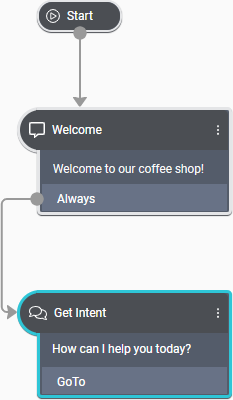

With the help of the sample dialog flows you defined in Planning your app, you should have a good understanding of what types of messages you will need. For example:

- Initial message comprised of a greeting and/or an open-ended question such as "Welcome to our coffee shop!" or "How can I help you today?" (The example shows how you might start such a conversation in Mix.dialog.)

- Open-ended questions at the start of specific dialogs to invite users to express their intent, which may be focused to guide the user, such as “What beverage would you like? Our specialty is coffee.”

- Error recovery responses in the event of silence, no-matches, failure to return interpretation results, and system/application errors. See Handling errors.

- Confirmation messages in the form of closed questions that require a yes- or no-type response such as “I’ll go ahead and place the order now, okay?” See Requesting confirmation.

Consider how you want to interact with the user. For example, in the form of:

- Text to be rendered using text-to-speech (TTS)

- Text to be visually displayed, for example, in a chat

- Audio file

- Buttons or other interactive elements, for example, in a web or multi-channel application

- DTMF in voice (IVR) applications

Your dialog design may, after all, support multiple channels (such as IVR/Voice or Digital) and use channel-specific messages as needed. Each channel, in turn, will support multiple modalities (such as rich text, audio, TTS, interactivity, DTMF).

Handling errors

No matter how clear your messages are, a user may still misunderstand, become confused, or encounter an application/system error. When designing your application, you need to communicate clearly, keep the user engaged, avoid ambiguities, and detect and recover from errors.

Your role is to guide your users: give them just enough information to keep them moving toward their goal, in as natural a way as possible. Anticipate errors and gently lead users by reinforcing information and by escalating instructions and feedback as required.

Common failure conditions include recognition/find-meaning failures:

No-match events. For example, when the user’s utterance is not recognized. This event may occur due to limitations in the NLU model, which indicate that retraining may be required, or due to background noise such as the user coughing or a dog barking.

No-input events. For example, when the user remained silent or the system was simply unable to detect an utterance.

Create special handling for these types of events: consider how many times your app should prompt the user when the failure occurs and what types of messages to use.

For example, for a second no-input result, you might use “Sorry, what was that?” and on the third no-input (user continues to remain silent), provide more detailed instructions or provide examples in case the user is uncertain what to do or say next, such as “Here are some things you can say...” Another option is to suggest that the user try a different action, such as “Sorry, you can try one of these...” As a final fallback strategy use a yes/no question to move the dialog along, such as “Do you still want to place an order? (Just say ‘Yes’ or ‘No’.)”

If many no-input timeouts are observed for a dialog state, consider refining the language. A message may be misleading, incorrect, or insufficient for the user to act on.

Failure scenarios don’t have to be negative experiences—instead, reinforce information about what is expected of the user at that point in the dialog flow and the available options. When appropriate, provide examples. Users pattern their responses on examples.

For system errors, provide a description of the error and suggestions as to how to recover. A recovery suggestion is preferable to cryptic error message and “Please try again”.

Handling ambiguity

You have a certain degree of control over what your users will say or do. Users will react to the views to which they have navigated and to the buttons they see displayed in your application’s interface. Their reactions are also influenced by their past experiences and expectations when dealing with your business.

Sometimes, however, a user’s request is too vague or general and a single interpretation cannot be made (meaning cannot be determined). In these cases, the application must prompt the user to fill in the missing information. For example, you might:

- Provide examples

- List and/or aggregate options

- Rely on the multimodal experience to enable all input methods including touch selection

- Reduce the information to collect; for example, at the application design level by setting default values or by tracking user behavior

When multiple, non-conclusive interpretations are identified and/or the user rejects the application’s response, you might use the interpretation results returned to use the confidence score to narrow down choices. Typically, applications are most interested in results with the highest score. However, you can use confidence scores to determine whether:

Confirmation is required from the user; for example, when confidence is low, to require explicit confirmation (“You want to view your list of favorite beverages, is that correct?”)

A list of possible interpretations should be presented to the user based on highest confidence score (for example, the top three), from which the user can then make a selection.

Requesting confirmation

Confirmation is the act of requesting that the user accept or reject the application’s understanding of one or more utterances. Confirmation is useful and necessary when:

- Confidence scores indicate that the system probably, but not definitely, returned the correct meaning

- The user has indicated an irreversible operation (for example, “cancel” or “buy now”)

- The application wants to assure the user that it has understood (or the user hasn’t changed his or her mind); for example, “I think you want to reload your debit card with $25. Is that correct?”

Role of confirmation in the dialog

In general, confirmation plays three key roles in dialog design. Confirmation ensures that:

- The application has an opportunity to validate recognition/interpretation hypotheses.

- Actions that have a high cost or may greatly impede performance are not inadvertently carried out, such as submitting payment or exiting the application.

- The user has confidence in the application’s ability to satisfy the task at hand, and knowledge of where the conversation is moving (a mental model of what comes next).

All of these factors influence the user’s confidence in the application and the business.

Confirmation strategies

In general, it is not efficient to confirm each item at a time. Unnecessary confirmation can double the length of the interaction and frustrate users. Instead, consider confirming when a block of information—ideally, a group of related items—has been collected rather than after each individual piece of information.

You will notice in the second, more natural-sounding example that the application uses both implicit and explicit confirmation:

- Implicit confirmation: Demonstrated in the prompt “Next, I need the size of your peppermint latte... ” The choice the user has made (to order a peppermint latte) is presented in the prompt as information, yet does not require the user to take any action to move the dialog forward.

- Explicit confirmation: As in “OK, confirming your order for a large peppermint latte with extra whipped cream. Have I got that right? Please say ‘yes’ or ‘no’.” The user must respond, negatively or positively, to the prompt to move the dialog forward.

Implicit confirmation acknowledges the user’s choice and moves the conversation along faster. Although the user has an opportunity at any time to correct the application, the user may not notice the error or feel unsure how (or reluctant) to correct it. For this reason, use implicit confirmation when the confidence level is high and when the potential consequence of an error in understanding is low.

Conversely, use explicit confirmation when:

- Confidence level is low

- The application is about to perform an action it cannot undo

- Business rules dictate use (for example, before submitting a payment)

Explicit confirmation instills confidence: gives users the chance to say “Yes” or “No” (or to make a forced choice decision) and helps prevent serious problems from occurring (such as an unintended purchase) due to false accepts.

Interacting with data

You may need to exchange data with an external system in your dialog application. For example, you may want to take into account information about the user's location from the client's GPS data, a user's stored preferences or contacts, or business-specific information such as the user's bank account balance or flight reservations—it all depends on the type of application you're building.

For example, consider these use cases:

- In a coffee application, after asking the user for the type and size of coffee to order, business requirements dictate that the dialog must provide the price of the order before completing the transaction. To get the price and provide it to the customer, the dialog must query an external system.

- In a banking application, after collecting all the information necessary to make a payment (the user's account, the payee, and the payment amount), the dialog is ready to complete the payment. In this use case, the dialog sends the transaction details to the client application, which will then process the payment and provide information back to the dialog, such as a return code and the account new balance.

Exchanging data is done through a data access node in Mix. This node tells the client application that the dialog expects data to continue the flow. It can also be used to exchange information between the client app and the dialog.

Data access nodes allow you to exchange information by:

- Configuring Mix.dialog so that the dialog service interacts directly with a backend system to exchange data.

- Exchanging data using the gRPC API. In this case, variables are exchanged between the dialog service and the client application using the DLGaaS API. For more information, see Data access actions.

You can also send data (variables or entities) to the client application from a Mix.dialog question and answer node. See Set up a question and answer node.

Mix.dialog also gives you the ability to handle interactions with external systems using the external actions node. For example, to:

- End the current conversation, and optionally send information to an external system.

- Transfer the user to a live agent or another system, optionally exchange information with an external system, and handle follow-up actions if the external system returns information. See Set up an external actions node.