Dialog as a Service gRPC API

DLGaaS allows conversational AI applications to interact with Mix dialogs

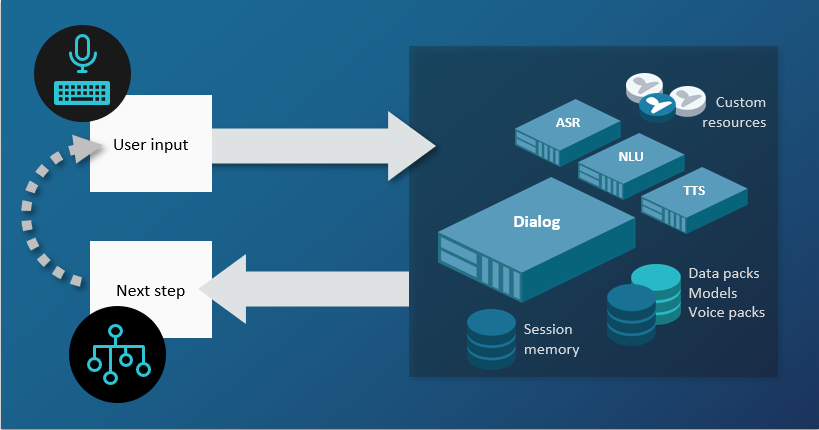

Dialog as a Service is Nuance's omni-channel conversation engine. The Dialog as a Service API allows client applications to interact with conversational agents created with the Mix.dialog web tool. These interactions are situated within a cohesive conversational session that keeps track of the ongoing context of the conversation, similar to what we do during the back and forth of a conversation with a person.

The gRPC protocol provided by Dialog as a Service allows a client application to interact with a dialog in all the programming languages supported by gRPC.

gRPC is an open source RPC (remote procedure call) software used to create services. It uses HTTP/2 for transport and protocol buffers to define the structure of the application. Dialog as a Service supports the gRPC proto3 version.

Version: v1

This release supports version v1 of the Dialog as a Service protocol. See gRPC setup to download the proto files and get started.

Dialog essentials

From an end-user's perspective, a dialog-enabled app is one that understands natural language, can respond in kind, and, where appropriate, can extend the conversation by following up the user's turn with appropriate questions and suggestions, all the while maintaining a memory of the context of what happened earlier in the conversation.

Dialogs are created using Mix.dialog; see Creating Mix.dialog Applications for more information. This document describes how to access a dialog at runtime from a client application using the DLGaaS gRPC API.

This section introduces concepts that you will need to understand to write your client application.

What is a conversation?

The flow of the DLGaaS API is based around the metaphor of a conversation between two parties. Specifically, with DLGaaS, this is a conversation between one human user—who enters text and speech inputs through some sort of client app UI—and a Dialog agent running on a server. Specifically, the API allows an interface between a client app and the Dialog agent. The model here is a conversation between a person and an agent from an organization or company that a person might want to contact.

Similar to a person dealing with a human agent, the human user is assumed to have some purpose in the conversation. They will come to the conversation with an intent, and the goal of the agent is to help understand that intent and help the person achieve it. The person might also introduce a new intent during the conversation.

To understand how the flow of the API works, it helps to reflect for a moment on what is a conversation. In its simplest form, a conversation is a series of more or less realtime exchanges between two people over a period of time. People take turns speaking, and communicate with each other in a back-and-forth pattern.

Structure of a conversation

Taken a little abstractly, a conversation between a user and a Dialog agent could look like this:

- Some formalities at the start to establish communications, agree to have a conversation, and establish resources to keep track of the conversation.

- Once the formalities are done, the user signals to the Dialog agent a desire to begin, and the agent replies to start off the conversation.

- They continue through a few rounds of back and forth where the user says something or provides some requested data, the Dialog agent processes this, and responds to carry on the dialog flow.

- This process continues until either the user or the Dialog agent ends the conversation.

What this looks like in the API

In the Dialog client runtime API, you use a Start request to establish a conversation. This creates a session on the Dialog side to hold the conversation and any resources it needs for a set timeframe.

The dialog proceeds in a series of steps, where at each step, the client app sends input from the user and possibly data, and the Dialog agent responds by sending informational messages, prompts for input, references to files for the client to use or play, or requests to the client for data. The way this works depends on the type of input:

- For text input, each step is is triggered by an Execute request from the client app.

- When there is audio input, each step is triggered by a series of ExecuteStream requests from the client app streaming the input audio.

- When the previous step Execute response included a request for the client app to look up and return data, the next cycle is triggered by an Execute request from the client app that includes the requested data.

- When the previous Execute response included messages and instructions to play to the user while a server-side data access is taking place, the client app has nothing to return, so the next cycle is triggered by an empty Execute request from the client app.

The flow of the API is structured around steps of user input, followed by the agent response. The agent's response at any step is a reply to the client input in the same step. But remember also that in conversations with some sort of agent, be it human or virtual, the agent also generally drives or steers the conversation. For example, opening the interaction with, "Welcome to our store. How may I help you?" In Mix.dialog, you create a dialog flow, and the conversation is driven by this flow.

By convention, an agent will generally start off the conversation and then continue to direct the flow of the conversation toward getting any additional information needed to fulfill the user's request. As well, when a user gives input, it is generally in response to something asked for in the previous step of the conversation by the agent. And when data is sent in a step, it is a response to a request for data in the previous step.

At the start of the conversation, the client app needs a way to "poke" the API to reply with the initial greeting prompts, but without sending any input. The API enables you do this by sending a first Execute request with an empty payload. This causes the Dialog agent to respond with its standard initial greeting prompts, and the conversation is underway.

See Client app development for a more detailed description of how to access and use the API to carry out a conversation.

Session

A session represents an ongoing conversation between a user and the Dialog service for the purpose of carrying out some task or tasks, where the context of the conversation is maintained for the duration. For example, consider the following scenario for a coffee app:

- Service: Hello and welcome to the coffee app! What can I do for you today?

- User: I want a cappuccino.

- Service: OK, in what size would you like that?

- User: Large.

- Service: Perfect, a large cappuccino coming up!

A session is started by the client, and ends when the natural flow of the conversation is complete or the session times out.

The length of a session is flexible, and can can handle different types of dialog, from a short burst of interaction to carry out one task for a user, or a series of interactions carrying out multiple tasks over an extended period of time.

Session ID

The interactions between the client application and the Dialog service for this scenario occur in the same session. A session is identified by a session ID. Each request and response exchanged between the client app and the Dialog service for that specific conversation must include that session ID referencing the conversation, and its context. If you do not provide a session ID, a new session is created and you are provided with a new session ID.

Session context

A session holds a context of the history of the conversation. This context is a memory of what the user said previously and what intents were identified previously. The context improves the performance of the dialog agent in subsequent interactions by giving additional hints to help with interpreting what the user is saying and wants to do. For example, if someone has just booked a flight to Boston, and then asks to book a hotel, it is quite likely the person wants to book a hotel in the Boston area, starting the same day as the flight arrives.

The session context is maintained throughout the lifetime of the session and added to as the conversation proceeds.

Session lifetime

A session's length in time is bounded by a session timeout limit, after which an idle session terminates if not already closed by the conclusion of the natural dialog flow.

Configure session lifetime

This limit is configurable up to a maximum of 259200 seconds or 72 hours (default of 900 seconds) and can be set at the start of the dialog using the Start method.

For more information on session IDs and session timeout values, see Step 3. Start conversation.

Check remaining session lifetime

Using the session ID, a client application can check whether the session is still active and get an estimate of how much time is left in the session using the Status method. For more information, see Step 5. Check session status.

Reset session time remaining

For asynchronous channels, you may want or need to keep the session going for longer than the upper limit. The client application can reset the time remaining in the session to the original limit by using either the Execute, ExecuteStream, or Update method.

If you simply want to reset the time remaining to keep the session alive without otherwise advancing the conversation, send an UpdateRequest specifying the session ID but with the payload left empty. For more information, see Step 6. Update session data.

Session data

Each session has memory designated to hold data related to the session. This includes contextual information about the user inputs during the session as well as session variables.

Session variables

Variables of different types can be used to hold data needed during a session. Dialog includes several useful predefined variables. You can also create new user-defined variables of various types in Mix.dialog.

For both predefined and user-defined variables, values can be assigned:

- In Mix.dialog when the dialog is defined

- Through data transfers from the client app or from external systems

Different variable types have their respective access methods defined, allowing you to retrieve variable values and components of those values in Mix.dialog. This allows you to define conditions, create dynamic messages content, and make assignments to other variables.

Assigning variables through data transfer

In some situations, you may want to send variables data from the client application to the Dialog service to be used during the session. For example, at the beginning of a session, you might want to send the geographical location of the user, the user name and phone number, and so on. You might also want to update the same values mid-session. As well, data transfers can be used during the session to provide wordsets specifying the relevant options for dynamic list entities.

Note: You can only assign values for variables that have already been defined in Mix.dialog, whether predefined or user-defined.

For more information, see Exchanging session data.

Session data lifetime

Values for variables stored in the session persist for the lifetime of the session or until the variable is updated or cleared during the session.

Playing messages and providing user input

The client application is responsible for playing messages to the user (for example, "What can I do for you today?") and for collecting and returning the user input to the Dialog service (for example, "I want a cappuccino").

Messages can be provided to the user in the form of:

- Text to be rendered using text-to-speech (TTS); this text can be generated directly through the DLGaaS API

- Text to be visually displayed, for example, in a chat

- Audio file to be played the the user

The client app can then send the user input to the Dialog service in a few ways:

- As audio to be recognized and interpreted by Nuance.

- As text to be interpreted by Nuance. In this case, the client application returns the input string to the dialog application.

- As interpretation results. This assumes that interpretation of the user input is performed by an external system. In this case, the client application is responsible for returning the results of the interpretation to the dialog application.

- As a selected item chosen by the user.

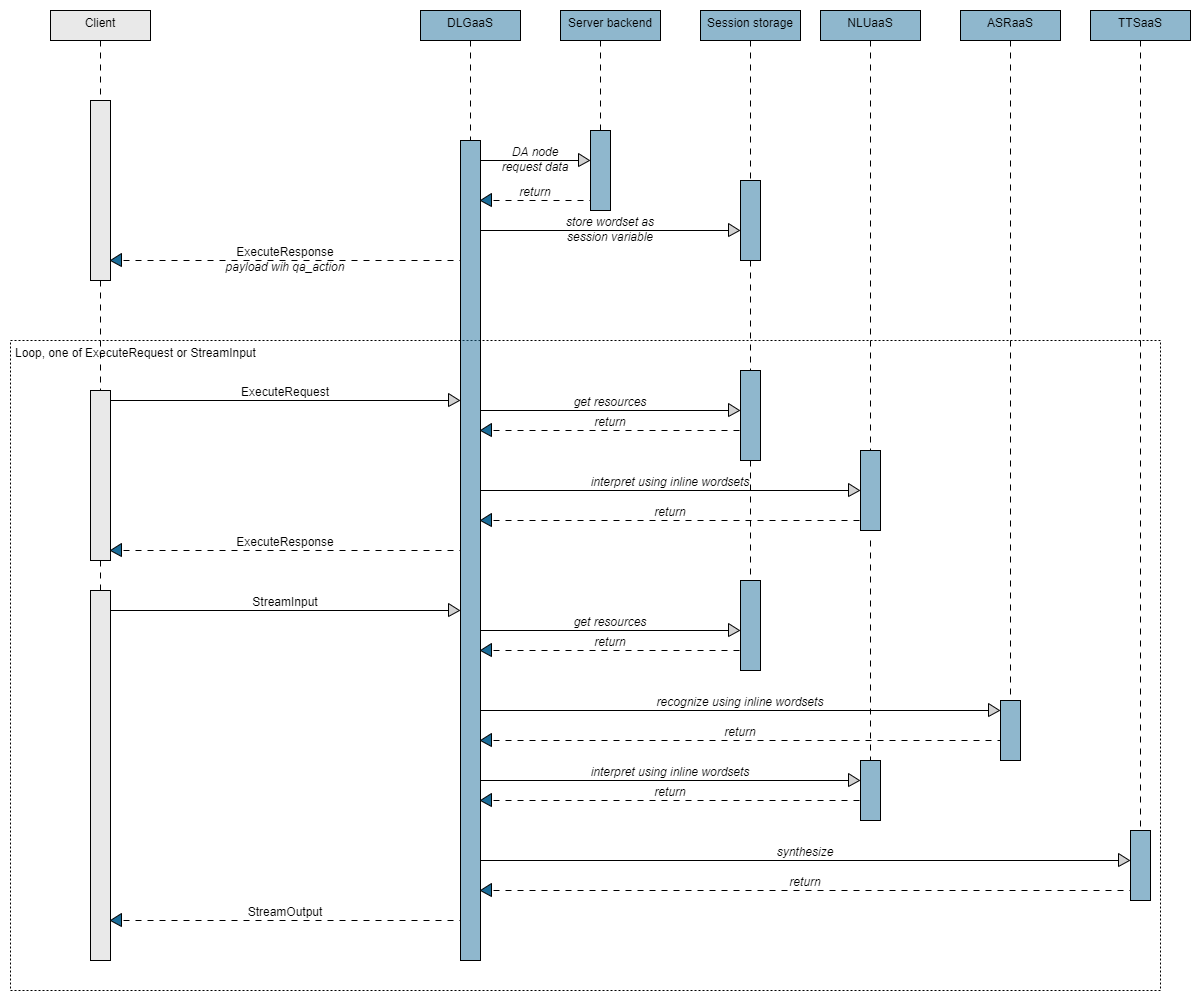

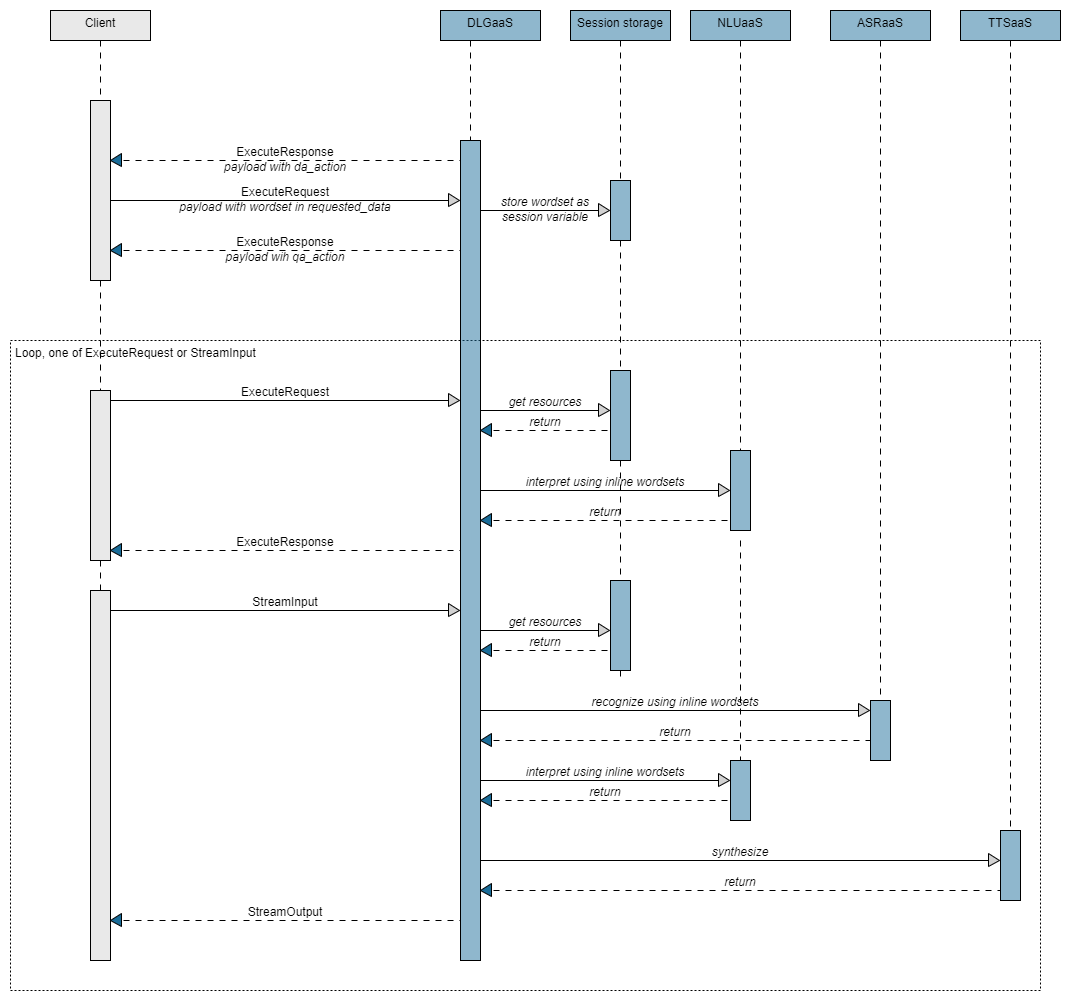

Orchestration with other Mix services

To support the Dialog service, different natural language and speech tasks will generally be required, depending on the channels your application is using and the types of input you are dealing with. You may need one or more of the following:

- Natural language understanding: For text inputs, taking in a text string and interpreting the intent of the sentence and any entities

- Speech recognition: For speech inputs, taking in speech audio and returning a text transcription

- Text to speech: For speech applications, taking in a text script and for the dialog response and returning this to the user as synthesized speech audio

The Dialog service does not itself perform these tasks but relies on other services to carry them out.

The Mix platform offers a set of Conversational AI services to handle these tasks:

- NLUaaS: For natural language understanding

- ASRaaS: For speech recognition

- TTSaaS: For generating text-to-speech

Your client application can handle these tasks either with the Mix services, or by using third party services.

Dialog service offers the possibility of special integration when using Mix services. Properly formatted requests sent to DLGaaS will automatically trigger calls to other Mix services. Rather than needing to separately call the other Mix services, Dialog can orchestrate with the other Mix services behind the scenes as follows. The Dialog service:

- Prepares and forwards a request to the specific Mix service

- Receives the response from the Mix service

- Prepares and forwards this response to the client application bundled as part of the standard DLGaaS response to the initial DLGaaS request

For orchestrated ASRaaS and TTSaaS requests, the DLGaaS service supports streaming of the audio input/output in both directions.

For more details about how to format inputs to trigger orchestration with Mix services, see Client app development.

Alternatively, if you prefer, you can directly handle the orchestration with the other Mix services or even third party tools rather than leaving it to Dialog.

Nodes and actions

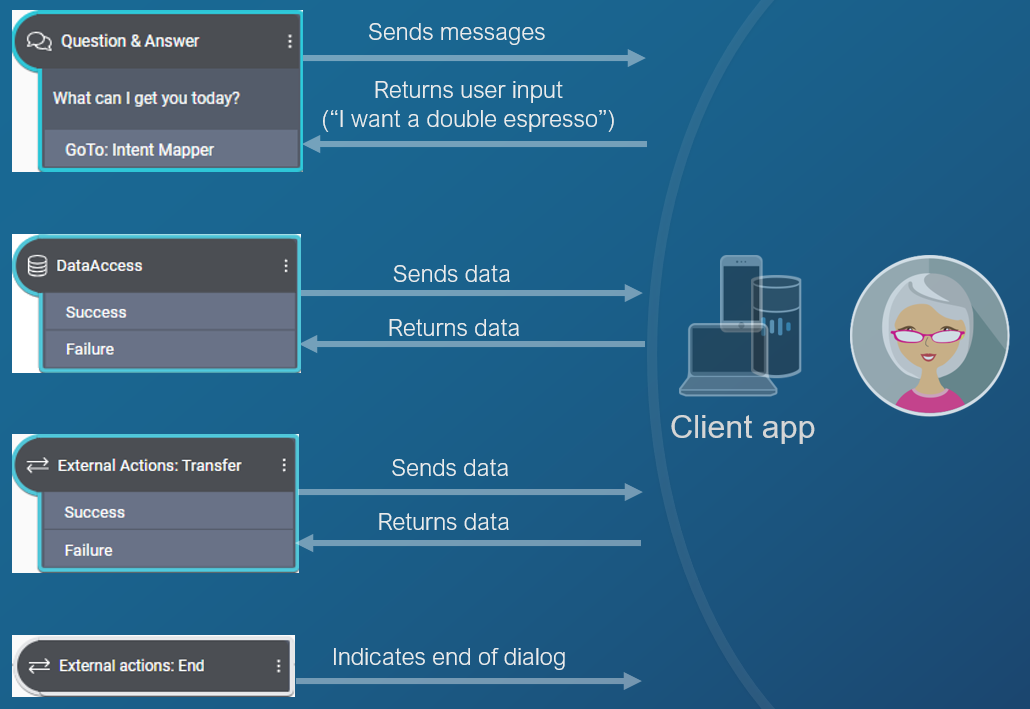

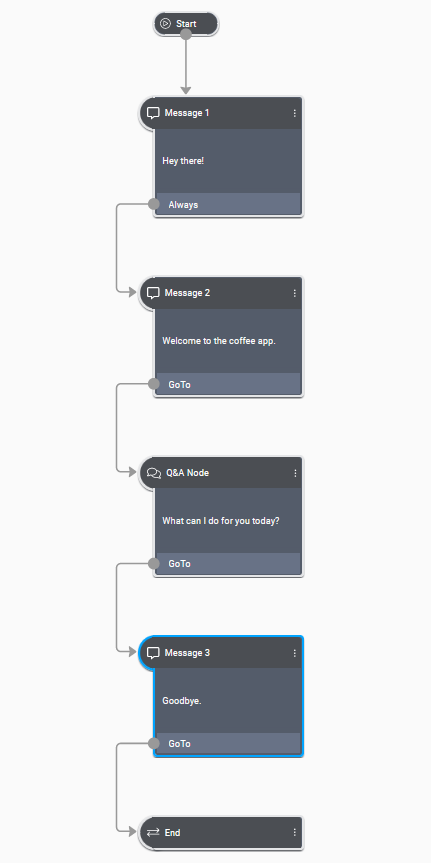

Mix.dialog nodes that trigger a call to the DLGaaS API

You create applications in Mix.dialog using nodes. Each node performs a specific task, such as asking a question, playing a message, and performing recognition. As you add nodes and connect them to one another, the dialog flow takes shape in the form of a graph.

At specific points in the dialog, when the Dialog service requires input from the client application, it sends an action to the client app. In the context of DLGaaS, the following Mix.dialog nodes trigger a call to the DLGaaS API and send a corresponding action:

Question and answer

The objective of the question and answer node is to collect user input. It sends a message to the client application and expects user input, which can be speech audio, a text utterance, or a natural language understanding interpretation. For example, in the coffee app, the dialog may tell the client app to ask the user "What type of coffee would you like today" and then to return the user's answer.

The message specified in a question and answer node is sent to the client application as a question and answer action. To continue the flow, the client application must then return the user input to the question and answer node.

See Question and answer actions for details.

Data access

A data access node expects data from a data source to continue the flow. The data source can either be a backend server or the client app, and this is configurable in Mix.dialog. For example, in a coffee app, the dialog may ask the client application to query the price of the order or to retrieve the name of the user.

When Mix.dialog is configured for client-side data access, information is sent to the client application in a data access action, identifying what data the Dialog service needs and providing any input data needed to retrieve that information. It also provides information to help the client application smooth over any delays while waiting for the data access. To continue the flow, the client application must return the requested data to DLGaaS.

See Data access actions for details.

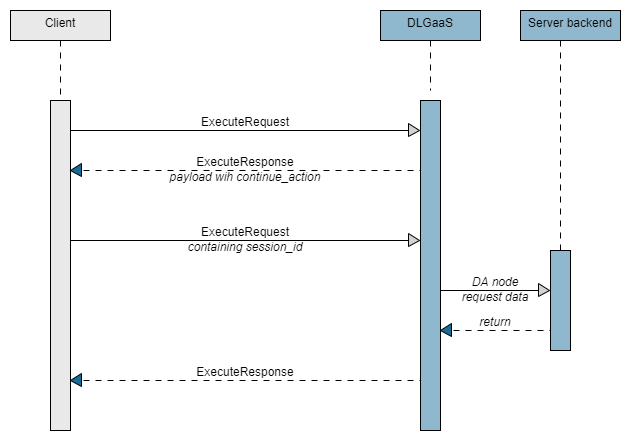

When Mix.dialog is configured for server-side backend data access, DLGaaS sends the client application a continue action and awaits a response before proceeding with the data access. The continue action provides information to help the client application smooth over any delays waiting on the DLGaaS communicating with the server backend. To continue the flow, the client application must respond to DLGaaS.

See Continue actions for details.

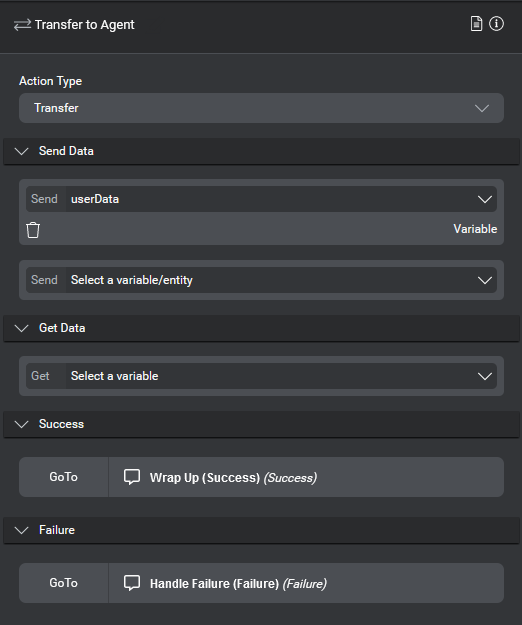

External actions: Transfer and End

There are two types of external actions nodes:

- Transfer: This node triggers an escalation action to be sent to the client application; it can be used, for example, to escalate to an IVR agent. It sends data to the client application. To continue the flow, the client application must return a

returnCode, at a minimum. See Transfer actions for details. - End: This node triggers an end action to indicate the end of the dialog application. It does not expect a response from the client app. See End actions for details.

Message node

The message node plays a message. The message specified in a message node is sent to the client application as a message action.

See Message actions for details.

Selectors

Most dialog applications can support multiple channels and languages, so you need to select which channel and language to use for an interaction in your API. This is done through a selector.

Selectors can be sent as part of a:

- StartRequest

- ExecuteRequest, whether a standalone ExecuteRequest or as part of a StreamInput

A selector is the combination of:

- The channel through which messages are transmitted to users, such as an IVR system, a live chat, a chatbot, and so on. The channels are defined when creating a Mix project.

- The language to use for the interactions.

- The library to use for the interaction. (Advanced customization reserved for future use. Use the default value for now, which is

default.)

You do not need to send the selector at each interaction. If the selector is not included, the values of the previous interaction will be used.

Prerequisites from Mix

Before developing your gRPC application, you need a Mix project that provides a dialog application as well as authorization credentials.

- Create a Mix project:

- Create a Mix.dialog application, as described in Creating Mix.dialog Applications.

- Build your dialog application.

- Set up your application configuration.

- Deploy your application configuration.

- Generate a "secret" and client ID of your Mix project: see Authorize your client application. Later you will use these credentials to request an access token to run your application.

- Learn the URL to call the Dialog service: see Accessing a runtime service.

- For DLGaaS, this is:

dlg.api.nuance.co.uk:443

- For DLGaaS, this is:

gRPC setup

Install gRPC for programming language, e.g. Python

$ pip install --upgrade pip

$ pip install grpcio

$ pip install grpcio-tools

Unzipped proto files

├── Your client apps here

├── nuance_dialog_dialogservice_protos_v1.zip

└── nuance

├── dlg

│ └── v1

│ ├── common

│ │ └── dlg_common_messages.proto

│ ├── dlg_interface.proto

│ └── dlg_messages.proto

│

├── asr

│ └── v1

│ ├── recognizer.proto

│ ├── resource.proto

│ └── result.proto

│

├── tts

│ └── v1

│ └── nuance_tts_v1.proto

├── nlu

│ └── v1

│ ├── interpretation-common.proto

│ ├── multi-intent-interpretation.proto

│ ├── result.proto

│ ├── runtime.proto

│ └── single-intent-interpretation.proto

└──rpc

├── error_details.proto

├── status.proto

└── status_code.proto

For Python, use protoc to generate stubs

$ echo "Pulling support files"

$ mkdir -p google/api

$ curl https://raw.githubusercontent.com/googleapis/googleapis/master/google/api/annotations.proto > google/api/annotations.proto

$ curl https://raw.githubusercontent.com/googleapis/googleapis/master/google/api/http.proto > google/api/http.proto

$ echo "generate the stubs for support files"

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ google/api/http.proto

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ google/api/annotations.proto

$ echo "generate the stubs for the DLGaaS gRPC files"

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ --grpc_python_out=./ nuance/dlg/v1/dlg_interface.proto

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/dlg/v1/dlg_messages.proto

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/dlg/v1/common/dlg_common_messages.proto

$ echo "generate the stubs for the ASRaaS gRPC files"

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ --grpc_python_out=. nuance/asr/v1/recognizer.proto

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/asr/v1/resource.proto

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/asr/v1/result.proto

$ echo "generate the stubs for the TTSaaS gRPC files"

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ --grpc_python_out=./ nuance/tts/v1/nuance_tts_v1.proto

$ echo "generate the stubs for the NLUaaS gRPC files"

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ --grpc_python_out=./ nuance/nlu/v1/runtime.proto

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/nlu/v1/result.proto

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/nlu/v1/interpretation-common.proto

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/nlu/v1/single-intent-interpretation.proto

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/nlu/v1/multi-intent-interpretation.proto

$ echo "generate the stubs for supporting files"

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/rpc/error_details.proto

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/rpc/status.proto

$ python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/rpc/status_code.proto

Final structure of protos and stubs for DLGaaS files after unzip and protoc compilation

├── Your client apps here

├── nuance_dialog_dialogservice_protos_v1.zip

└── nuance

├── dlg

│ └── v1

│ ├── common

│ │ ├── dlg_common_messages.proto

│ │ └── dlg_common_messages_pb2.py

│ ├── dlg_interface.proto

│ ├── dlg_interface_pb2.py

│ ├── dlg_interface_pb2_grpc.py

│ ├── dlg_messages.proto

│ └── dlg_messages_pb2.py

│

├── asr

│ └── v1

│ ├── recognizer_pb2_grpc.py

│ ├── recognizer_pb2.py

│ ├── recognizer.proto

│ ├── resource_pb2.py

│ ├── resource.proto

│ ├── result_pb2.py

│ └── result.proto

│

├── tts

│ └── v1

│ ├── nuance_tts_v1.proto

│ ├── nuance_tts_v1_pb2.py

│ └── nuance_tts_v1_pb2_grpc.py

├── nlu

│ └── v1

│ ├── interpretation_common_pb2.py

│ ├── interpretation-common.proto

│ ├── multi_intent_interpretation_pb2.py

│ ├── multi-intent-interpretation.proto

│ ├── result.proto

│ ├── result_pb2.py

│ ├── runtime.proto

│ ├── runtime_pb2.py

│ ├── runtime_pb2_grpc.py

│ ├── single_intent_interpretation_pb2.py

│ └── single-intent-interpretation.proto

└──rpc

├── error_details.proto

├── error_details_pb2.py

├── status.proto

├── error_details_pb2.py

├── status_code.proto

└── status_code_pb2.py

The basic steps in using the Dialog as a Service gRPC protocol are:

- Install gRPC for the programming language of your choice, including C++, Java, Python, Go, Ruby, C#, Node.js, and others. See gRPC Documentation for a complete list and instructions on using gRPC with each language.

- Download the zip file containing the gRPC .proto files for the Dialog service. These files contain a generic version of the functions or classes that can interact with the dialog service.

See Note about packaged proto files below. - Unzip the file in a location that your applications can access, for example in the directory that contains or will contain your client apps.

- Generate client stub files in your programming language from the proto files. Depending on your programming language, the stubs may consist of one file or multiple files per proto file. These stub files contain the methods and fields from the proto files as implemented in your programming language. You will consult the stubs in conjunction with the proto files. See gRPC API.

- Write your client app, referencing the functions or classes in the client stub files. See Client app development for details and a scenario.

Note about packaged proto files

The DLGaaS API provides features that require that you install the ASR, TTS, and NLU proto files, as well as certain supporting files:

- The StreamInput request performs recognition on streamed audio using ASRaaS and requests speech synthesis using TTSaaS.

- The ExecuteRequest allows you to specify interpretation results in the NLUaaS format.

For your convenience, these files are packaged with the DLGaaS proto files available here, and this documentation provides instructions for generating the stub files.

As such, the following files are packaged with this documentation:

- For DLGaaS API

- nuance/dlg/v1/dlg_interface.proto

- nuance/dlg/v1/dlg_messages.proto

- nuance/dlg/v1/common/dlg_common_messages.proto

- For ASRaaS audio streaming

- nuance/asr/v1/recognizer.proto

- nuance/asr/v1/resource.proto

- nuance/asr/v1/result.proto

- For TTSaaS streaming

- nuance/tts/v1/nuance_tts_v1.proto

- For NLUaaS interpretation

- nuance/nlu/v1/runtime.proto

- nuance/nlu/v1/result.proto

- nuance/nlu/v1/interpretation-common.proto

- nuance/nlu/v1/single-intent-interpretation.proto

- nuance/nlu/v1/multi-intent-interpretation.proto

- Supporting files for other services

- nuance/rpc/error_details.proto

- nuance/rpc/status.proto

- nuance/rpc/status_code.proto

Client app development

This section describes the main steps in a typical client application that interacts with a Mix.dialog application. In particular, it provides an overview of the different methods and messages used in a sample order coffee application.

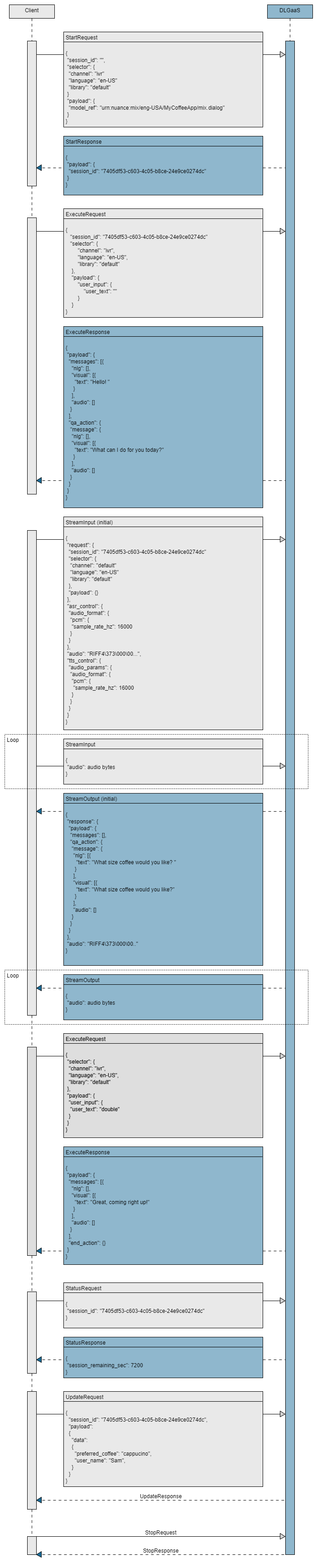

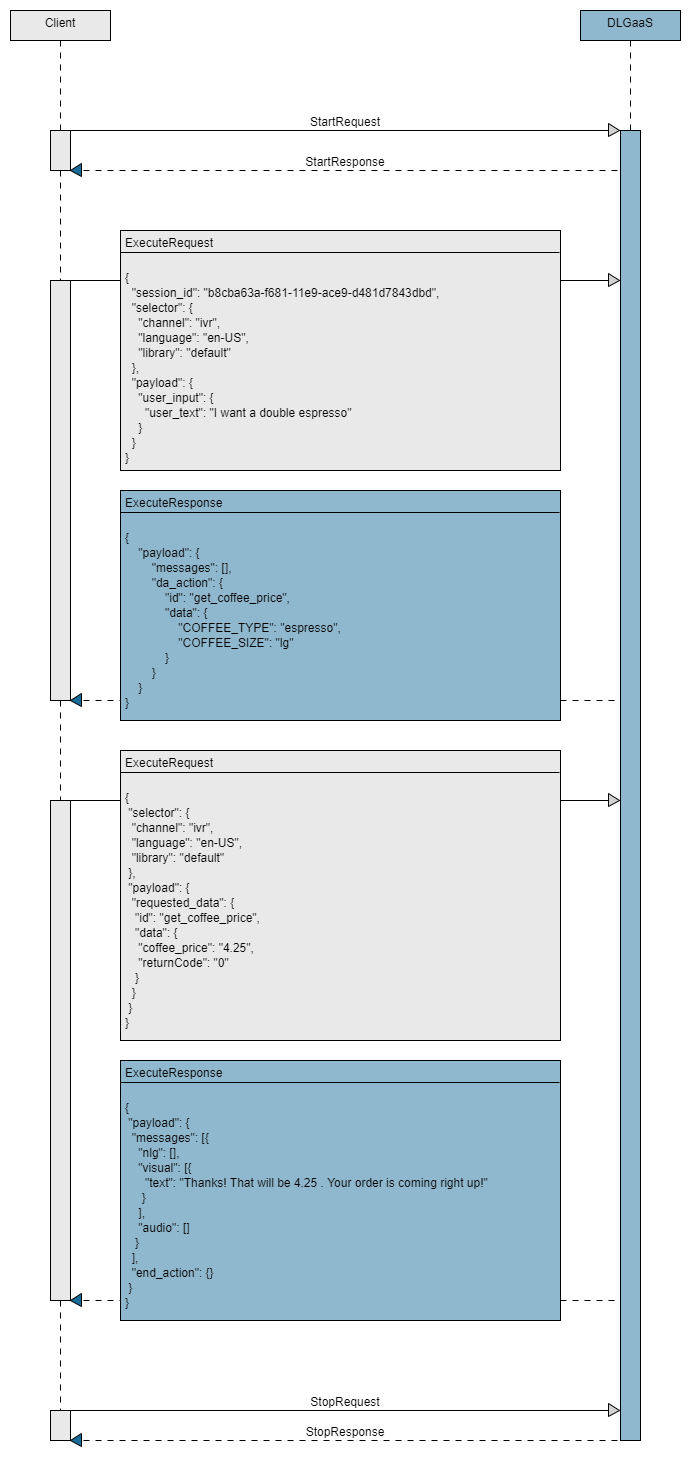

Sample dialog exchange

To illustrate how to use the API, this document uses the following simple dialog exchange between an end user and a dialog application:

- System: Hello! Welcome to the coffee app. What type of coffee would you like?

- User: I want an espresso.

- System: And in what size would like that?

- User: Double.

- System: Thanks, your order is coming right up!

Overview

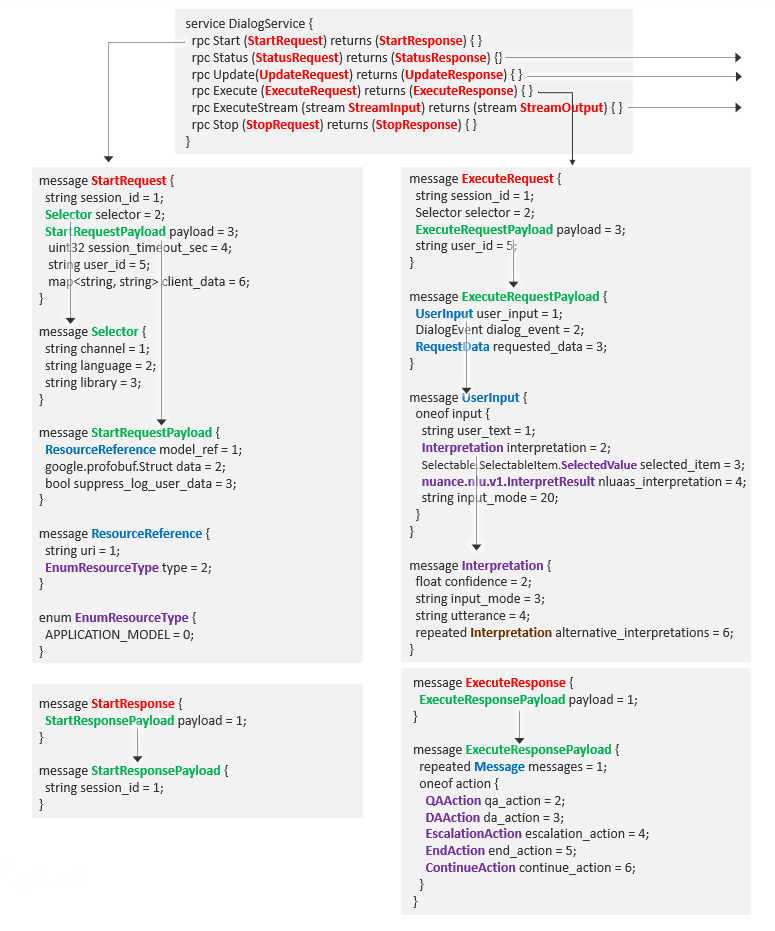

The DialogService is the main entry point to the Nuance Dialog service.

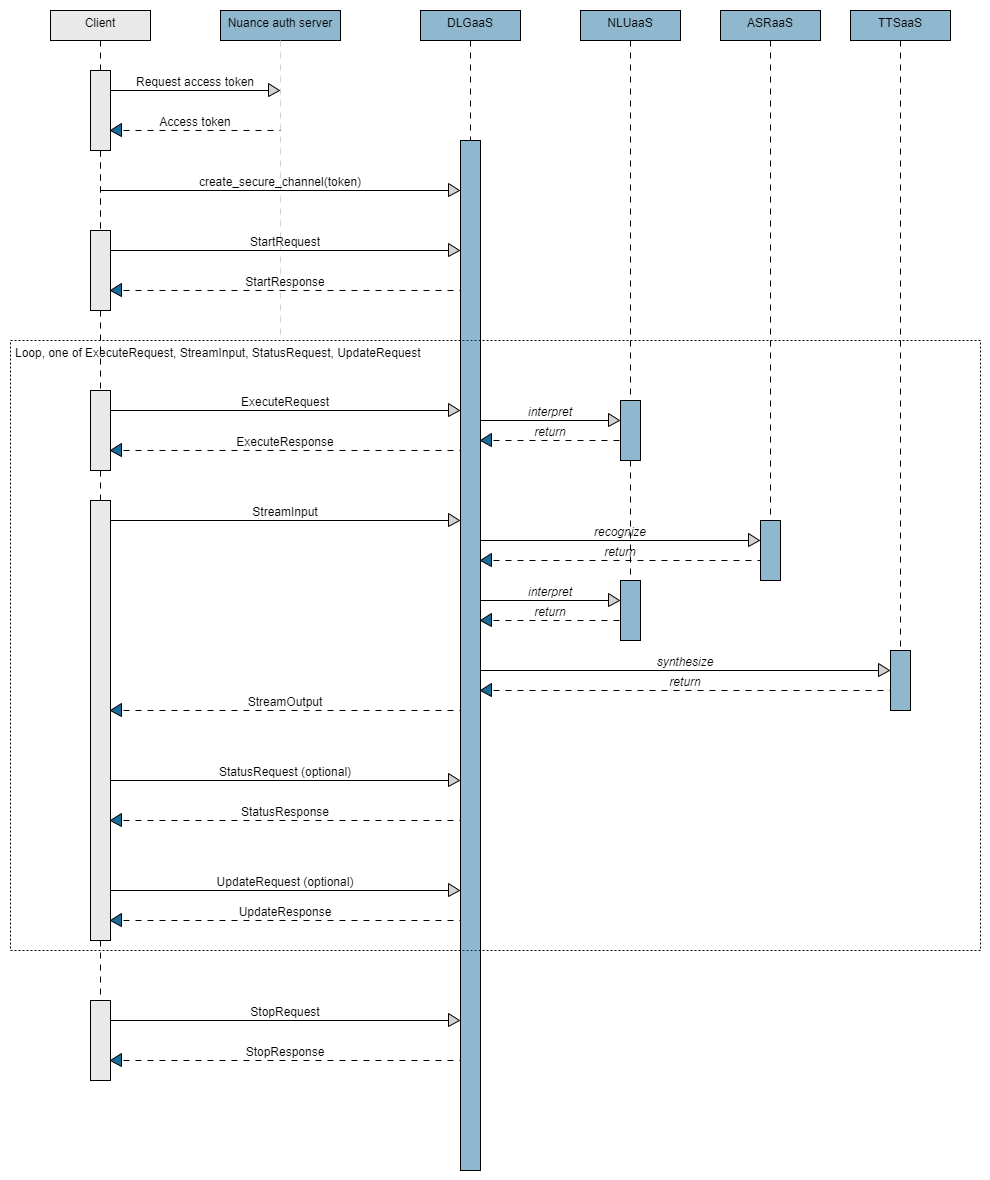

A typical workflow for accessing a dialog application at runtime is as follows:

- The client application requests the access token from the Nuance authorization server.

- The client application opens a secure channel using the access token.

- The client application creates a new conversation sending a StartRequest to the DialogService. The service returns a session ID, which is used at each interaction to keep the same conversation. The client application also sends an ExecuteRequest message with the session ID and an empty payload to kick off the conversation.

- As the user interacts with the dialog, the client application sends one of the following messages, as often as necessary:

- The ExecuteRequest message for text input and data exchange.

An ExecuteResponse is returned to the client application when a question and answer node, a data access node, or an external actions node is encountered in the dialog flow. - The StreamInput message for audio input (ASR) and/or audio output (TTS).

A StreamOutput is returned to the client application.

- The ExecuteRequest message for text input and data exchange.

- Optionally, at any point during the conversation, the client application can check that the session is still active by sending a StatusRequest message.

- Optionally, at any point during the conversation, the client application can update session variables by sending an UpdateRequest message.

- The client application closes the conversation by sending a StopRequest message.

This workflow is shown in the following high-level sequence flow:

(Click the image for a close-up view)

For a detailed sequence flow diagram, see Detailed sequence flow.

Step 1. Generate token

Get token and run simple Mix client (run-simple-mix-client.sh)

#!/bin/bash

# Remember to change the colon (:) in your CLIENT_ID to code %3A

CLIENT_ID="appID%3ANMDPTRIAL_your_name_company_com_20201102T144327123022%3Ageo%3Aus%3AclientName%3Adefault"

SECRET="5JEAu0YSAjV97oV3BWy2PRofy6V8FGmywiUbc0UfkGE"

export MY_TOKEN="`curl -s -u "$CLIENT_ID:$SECRET" "https://auth.crt.nuance.co.uk/oauth2/token" \

-d "grant_type=client_credentials" -d "scope=dlg" \

| python -c 'import sys, json; print(json.load(sys.stdin)["access_token"])'`"

python dlg_client.py --serverUrl "dlg.api.nuance.co.uk:443" --token $MY_TOKEN --modelUrn "$1" --textInput "$2"

Nuance Mix uses the OAuth 2.0 protocol for authorization. To call the Dialog runtime service, your client application must request and then provide an access token. The token expires after a short period of time so must be regenerated frequently.

Your client application uses the client ID and secret from the Mix.dashboard (see Prerequisites from Mix) to generate an access token from the Nuance authorization server, available at the following URL:

https://auth.crt.nuance.co.uk/oauth2/token

The token may be generated in several ways, either as part of the client application or as a script file. This Python example uses a Linux script to generate a token and store it in an environment variable. The token is then passed to the application, where it is used to create a secure connection to the Dialog service.

The curl command in these scripts generates a JSON object including the access_token field that contains the token, then uses Python tools to extract the token from the JSON. The resulting environment variable contains only the token.

In this scenario, the colon (:) in the client ID must be changed to the code %3A so curl can parse the value correctly:

appID:NMDPTRIAL_alex_smith_nuance_com_20190919T190532:geo:qa:clientName:default

-->

appID%3ANMDPTRIAL_alex_smith_company_com_20190919T190532%3Ageo%3Aqa%3AclientName%3Adefault

Step 2. Authorize the service

def create_channel(args):

log.debug("Adding CallCredentials with token %s" % args.token)

call_credentials = grpc.access_token_call_credentials(args.token)

log.debug("Creating secure gRPC channel")

channel_credentials = grpc.ssl_channel_credentials()

channel_credentials = grpc.composite_channel_credentials(channel_credentials, call_credentials)

channel = grpc.secure_channel(args.serverUrl, credentials=channel_credentials)

return channel

You authorize the service by creating a secure gRPC channel, providing:

- The URL of the Dialog service

- The access token

Step 3. Start the conversation

def start_request(stub, model_ref_dict, session_id, selector_dict={}, timeout):

selector = Selector(channel=selector_dict.get('channel'),

library=selector_dict.get('library'),

language=selector_dict.get('language'))

start_payload = StartRequestPayload(model_ref=model_ref_dict)

start_req = StartRequest(session_id=session_id,

selector=selector,

payload=start_payload,

session_timeout_sec=timeout)

log.debug(f'Start Request: {start_req}')

start_response, call = stub.Start.with_call(start_req)

response = MessageToDict(start_response)

log.debug(f'Start Request Response: {response}')

return response, call

To start the conversation, you need to do two things:

- Start a new Dialog session

- Kick off the conversation

Start a new session

Before you can start the new conversation, the client app first needs to send a StartRequest message with the following information:

- An empty session ID, which tells the Dialog service to create a new ID for this conversation.

- The selector, which provides the channel, library, and language used for this conversation. This information was determined by the dialog designer in the Mix.dialog tool.

- The StartRequestPayload, which contains the reference to the model, provided as a ResourceReference. For a Mix application, this is the URN of the Dialog model to use for this interaction. The StartRequestPayload can also be used to set session data.

- An optional

user_id, which identifies a specific user within the application. See UserID for details. - An optional

client_data, used to inject data in call logs. This data will be added to the call logs but will not be masked. - An optional session timeout value,

session_timeout_sec(in seconds), after which the session is terminated. The default value is 900 (15 minutes) and the maximum is 259200 (72 hours).

A new unique session ID is generated and returned as a response; for example:

'payload': {'session_id': 'b8cba63a-f681-11e9-ace9-d481d7843dbd'}

The client app must then use the same session ID in all subsequent requests that apply to this conversation.

Additional notes on session IDs

- The session ID is often used for logging purposes, allowing you to easily locate the logs for a session.

- If the client app specifies a session ID in the StartRequest message, then the same ID is returned in the response.

- If passing in your own session ID in the StartRequest message, please follow these guidelines:

- The session Id should not begin or end with white space or tab

- The session Id should not begin or end with hyphens

Kick off the conversation

The client app needs to signal to Dialog to start the conversation.

Send an empty ExecuteRequest to Dialog to get started. Include the session ID but leave the user_text field of the payload user_input empty.

payload_dict = {

"user_input": {

"user_text": None

}

}

response, call = execute_request(stub,

session_id=session_id,

selector_dict=selector_dict,

payload_dict=payload_dict

)

Step 4. Step through the dialog

At each step, the client app sends input to advance the dialog to the next step. This can take one of four different forms depending on the place in the dialog.

- Send text input from user with Execute

- Send audio input from user with ExecuteStream

- Send requested data from client-side data fetch with Execute

- Signal to proceed with server-side data fetch with Execute

Step 4a. Interact with the user (text input)

def execute_request(stub, session_id, selector_dict={}, payload_dict={}):

selector = Selector(channel=selector_dict.get('channel'),

library=selector_dict.get('library'),

language=selector_dict.get('language'))

input = UserInput(user_text=payload_dict.get('user_input').get('userText'))

execute_payload = ExecuteRequestPayload(

user_input=input)

execute_request = ExecuteRequest(session_id=session_id,

selector=selector,

payload=execute_payload)

log.debug(f'Execute Request: {execute_payload}')

execute_response, call = stub.Execute.with_call(execute_request)

response = MessageToDict(execute_response)

log.debug(f'Execute Response: {response}')

return response, call

Interactions that use text input and do not require streaming are done through multiple ExecuteRequest calls, providing the following information:

- The session ID returned by the StartRequest.

- The selector, which provides the channel, library, and language used for this conversation. (This is optional; it is required only if the channel, library, or language values have changed since they were last sent.)

- The ExecuteRequestPayload, which can contain the following fields:

- user_input: Provides the input to the Dialog engine. For the initial ExecuteRequest, the payload is empty to get the initial message. For the subsequent requests, the input provided depends on how text interpretation is performed. See Interpreting text user input for more information.

- dialog_event: Can be used to pass in events that will drive the dialog flow. If no event is passed, the operation is assumed to be successful.

- requested_data: Contains data that was previously requested by the Dialog.

- An optional

user_id, which identifies a specific user within the application. See UserID for details.

ExecuteResponse for output

The dialog runtime app returns the Execute response payload when a question and answer node, a data access node, or an external actions node is encountered in the dialog flow. This payload provides the actions to be performed by the client application.

There are many types of actions that can be requested by the dialog application:

- Messages action—Indicates that a message should be played to the user. See Message actions.

- Data access action—Indicates that the dialog needs data from the client to continue the flow. The dialog application obtains the data it needs from the client using the data access gRPC API. The client application is responsible for obtaining the data from a data source. See Data access actions

- Question and answer action—Tells the client app to play a message and to return the user input to the dialog. See Question and answer actions.

- End action—Indicates the end of the dialog. See End actions.

- Escalation action—Provides data that can be used, for example, to escalate to an IVR agent.

- Continue action—Prompts the client application to respond to initiate a backend data exchange on the server side. Provides a message to play to the user to smooth over any latency while waiting for the data exchange.

For example, the following question and answer action indicates that the message "Hello! How can I help you today?" must be displayed to the user:

Note: Examples in this section are shown in JSON format for readability. However, in an actual client application, content is sent and received as protobuf objects.

"payload": {

"messages": [],

"qa_action": {

"message": {

"nlg": [],

"visual": [{

"text": "Hello! How can I help you today?"

}

],

"audio": []

}

}

}

A question and answer node expects input from the user to continue the flow. This can be provided as text (either to be interpreted by Nuance or as already interpreted input) in the next ExecuteRequest call. To provide the user input as audio, use the StreamInput request, as described in Step 4b.

Step 4b. Interact with the user (using audio)

def execute_stream_request(args, stub, session_id, selector_dict={}):

# Receive stream outputs from Dialog

stream_outputs = stub.ExecuteStream(build_stream_input(args, session_id, selector_dict))

log.debug(f'execute_responses: {stream_outputs}')

responses = []

audio = bytearray(b'')

for stream_output in stream_outputs:

if stream_output:

# Extract execute response from the stream output

response = MessageToDict(stream_output.response)

if response:

responses.append(response)

audio += stream_output.audio.audio

return responses, audio

def build_stream_input(args, session_id, selector_dict):

selector = Selector(channel=selector_dict.get('channel'),

library=selector_dict.get('library'),

language=selector_dict.get('language'))

try:

with open(args.audioFile, mode='rb') as file:

audio_buffer = file.read()

# Hard code packet_size_byte for simplicity sake (approximately 100ms of 16KHz mono audio)

packet_size_byte = 3217

audio_size = sys.getsizeof(audio_buffer)

audio_packets = [ audio_buffer[x:x + packet_size_byte] for x in range(0, audio_size, packet_size_byte) ]

# For simplicity sake, let's assume the audio file is PCM 16KHz

user_input = None

asr_control_v1 = {'audio_format': {'pcm': {'sample_rate_hz': 16000}}}

except:

# Text interpretation as normal

asr_control_v1 = None

audio_packets = [b'']

user_input = UserInput(user_text=args.textInput)

# Build execute request object

execute_payload = ExecuteRequestPayload(user_input=user_input)

execute_request = ExecuteRequest(session_id=session_id,

selector=selector,

payload=execute_payload)

# For simplicity sake, let's assume the audio file is PCM 16KHz

tts_control_v1 = {'audio_params': {'audio_format': {'pcm': {'sample_rate_hz': 16000}}}}

first_packet = True

for audio_packet in audio_packets:

if first_packet:

first_packet = False

# Only first packet should include the request header

stream_input = StreamInput(

request=execute_request,

asr_control_v1=asr_control_v1,

tts_control_v1=tts_control_v1,

audio=audio_packet

)

log.debug(f'Stream input initial: {stream_input}')

else:

stream_input = StreamInput(audio=audio_packet)

yield stream_input

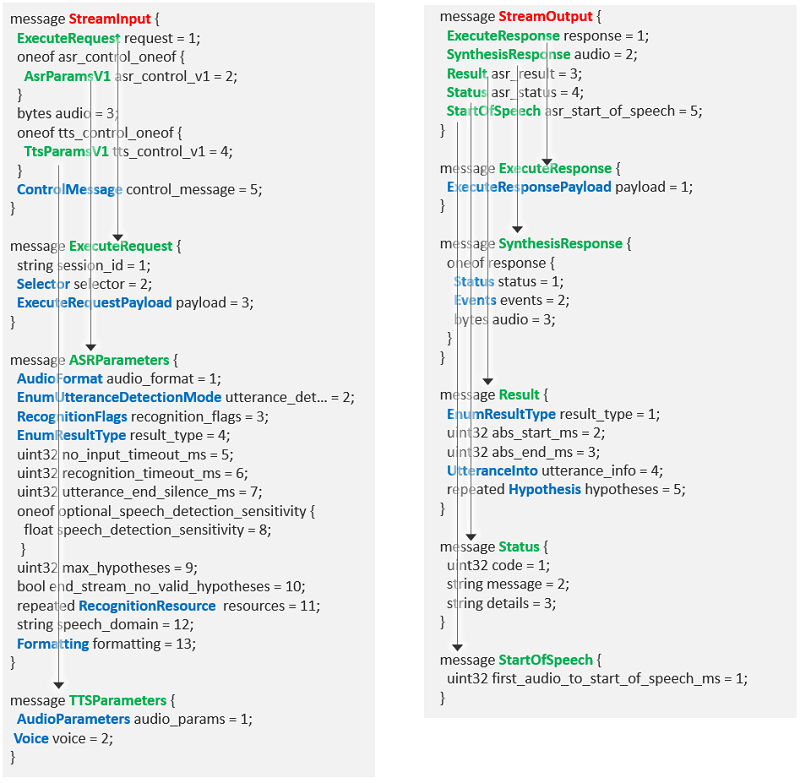

Interactions with the user that require audio streaming are done through multiple ExecuteStream calls. ExecuteStream takes in a StreamInput message and returns a StreamOutput message. This provides a streaming audio option to handle audio input and audio output in a smooth way.

Streaminput for input

The StreamInput message can be used to:

- Provide the user input requested by a question and answer action as audio input. In this scenario, audio is streamed to ASRaaS, which performs recognition on the audio. The recognition results are sent to NLUaaS, which provides the interpretation. This is then returned to DLGaaS, which continues the dialog flow.

- Configure, and initiate synthesis of an output message into audio output using text-to-speech (TTS). In this scenario, if a TTS message has been defined in Mix.dialog for this interaction, TTSaaS synthesizes the message and streams the audio back to the client application in a series of StreamOutput calls.

The StreamInput method has the following fields:

- request: Provides the ExecuteRequest with the session ID, selector, and request payload.

- asr_control_v1: Provides the parameters to be forwarded to the ASR service, such as the audio format, recognition flags, recognition resources to use (such as DLMs, wordsets, and speaker profiles), whether results are returned, and so on. Setting

asr_control_v1enables streaming of input audio. audio: Audio to stream for speech recognition.- tts_control_v1: Provides the parameters to be forwarded to the TTS service, such as the audio encoding and voice to use for speech synthesis. Setting

tts_control_v1enables streaming of audio output. - control_message: (Optional) Message to start the recognition no-input timer if it was disabled with a stall_timers recognition flag in asr_control_v1.

Streamoutput for output

ExecuteStream returns a StreamOutput, which has the following fields:

- response, which provides the ExecuteResponse

audio, which is the audio returned by TTS (if TTS was requested)asr_result, which contains the transcription resultasr_status, which indicates the status of the transcriptionasr_start_of_speech, which contains the start-of-speech message

Note that speech responses do not necessarily need to use synthesized speech from TTS. Another option is to use recorded speech audio files. For more information, see Providing speech response using recorded speech audio.

Additional details on handling speech input and output in your application are available under Reference topics.

Step 4c. Send requested data

If the last ExecuteResponse included a data acess action requesting client-side fetch of specified data, the client app needs to fetch the data and returns it as part of the payload of an ExecuteRequest under requested_data. The payload will otherwise be empty, not containing user input. This happens when the dialog gets to a data access node that is configured for client-side data access. For more information about this, see Data access actions.

payload_dict = {

"requested_data": {

"id": "get_coffee_price",

"data": {

"coffee_price": "4.25",

"returnCode": "0"

}

}

}

response, call = execute_request(stub,

session_id=session_id,

selector_dict=selector_dict,

payload_dict=payload_dict

)

Step 4d. Proceed with server-side data fetch

If Dialog is carrying out a data fetch on the server-side that will take some time, and a latency message has been configured in Mix.dialog, Dialog can send messages to play to fill up the time and make the user experience waiting more pleasant as part of a Continue action.

To move on, the client app has to signal that it is ready for Dialog to carry on when it is ready. As you would when you first kick off a conversation, send an ExecuteRequest that includes the session ID but leave the user_text field of the payload user_input empty.

payload_dict = {

"user_input": {

"user_text": None

}

}

response, call = execute_request(stub,

session_id=session_id,

selector_dict=selector_dict,

payload_dict=payload_dict

)

Step 5. Check session status

def status_request(stub, session_id):

status_request = StatusRequest(session_id=session_id)

log.debug(f'Status Request: {status_request}')

status_response, call = stub.Status.with_call(status_request)

response = MessageToDict(status_response)

log.debug(f'Status Response: {response}')

return response, call

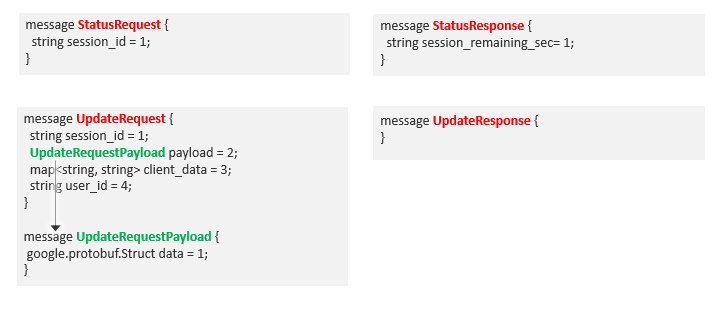

In a client application using asynchronous communication modalities such as text messaging, the client will not always necessarily know whether a session is still active, or whether it has expired. To check whether the session is still active, and if so, how much time is left in the ongoing session, the client app sends a StatusRequest message. This message has one field:

- The session ID returned by the StartResponse.

Some notes:

- This request can be sent at any time once a session is created. No user input is required, and this request does not trigger an event in the dialog and does not change the dialog state.

- This request can be called before an Execute, ExecuteStream, or Update call to check that the session is still active before sending the request.

A StatusResponse message is returned giving the approximate time left in the session. The status code can be one of the following:

- OK: The specified session was found.

- NOT_FOUND: The session specified could not be found.

Step 6. Update session data

def update_request(stub, session_id, update_data, client_data, user_id):

update_payload = UpdateRequestPayload(

data=update_data)

update_request = UpdateRequest(session_id=session_id,

payload=update_payload,

client_data=client_data,

user_id=user_id)

log.debug(f'Update Request: {update_request}')

update_response, call = stub.Update.with_call(update_request)

response = MessageToDict(update_response)

log.debug(f'Update Response: {response}')

return response, call

To update session data, the client app sends the UpdateRequest message; this message has the following fields:

- The session ID returned by the StartResponse.

- The UpdateRequestPayload, which contains the key-value pairs of variables to update. See Exchanging session data for details. The variables sent will be logged in the call logs, unless

suppressLogUserDatais set tofalsein the StartRequest. If one of the variables updated is identified as sensitive, its value will be masked in the log events. - An optional

client_data, used to inject data in call logs. This data will be added to the call logs but will not be masked. - An optional

user_id, which identifies a specific user within the application. See UserID for details.

Some notes:

- This request can be sent at any time once a session is created. No user input is required, and this request does not trigger an event in the dialog and does not change the dialog state.

- Session variables sent though the UpdateRequest payload should be defined in the Mix.dialog project. If they are not, the response will still be successful but no variables will be updated.

- This request resets the session timeout if the payload is empty.

- This request is usually called before an ExecuteRequest.

An empty UpdateResponse is returned. The status code can be one of the following:

- OK: The UpdateRequest was successful.

- NOT_FOUND: The session specified could not be found.

Step 7. Stop the conversation

def stop_request(stub, session_id=None):

stop_req = StopRequest(session_id=session_id)

log.debug(f'Stop Request: {stop_req}')

stop_response, call = stub.Stop.with_call(stop_req)

response = MessageToDict(stop_response)

log.debug(f'Stop Response: {response}')

return response, call

To stop the conversation, the client app sends the StopRequest message; this message has the following fields:

- The session ID returned by the StartRequest.

- An optional

user_id, which identifies a specific user within the application. See UserID for details.

The StopRequest message removes the session state, so the session ID for this conversation should not be used in the short term for any new interactions, to prevent any confusion when analyzing logs.

Note: If the dialog application concludes with an External Actions node of type End, your client application does not need to send the StopRequest message, since the End node closes the session. If both the StopRequest message is sent and the dialog application includes an End node, the StatusCode.NOT_FOUND error code is returned, since the session is closed and could not be found.

Detailed sequence flow

Sample Python app

dlg_client.py sample app

import argparse

import logging

import uuid

from google.protobuf.json_format import MessageToJson, MessageToDict

from grpc import StatusCode

from nuance.dlg.v1.common.dlg_common_messages_pb2 import *

from nuance.dlg.v1.dlg_messages_pb2 import *

from nuance.dlg.v1.dlg_interface_pb2 import *

from nuance.dlg.v1.dlg_interface_pb2_grpc import *

log = logging.getLogger(__name__)

def parse_args():

parser = argparse.ArgumentParser(

prog="dlg_client.py",

usage="%(prog)s [-options]",

add_help=False,

formatter_class=lambda prog: argparse.HelpFormatter(

prog, max_help_position=45, width=100)

)

options = parser.add_argument_group("options")

options.add_argument("-h", "--help", action="help",

help="Show this help message and exit")

options.add_argument("--token", nargs="?", help=argparse.SUPPRESS)

options.add_argument("-s", "--serverUrl", metavar="url", nargs="?",

help="Dialog server URL, default=localhost:8080", default='localhost:8080')

options.add_argument('--modelUrn', nargs="?",

help="Dialog App URN, e.g. urn:nuance-mix:tag:model/A2_C16/mix.dialog")

options.add_argument("--textInput", metavar="file", nargs="?",

help="Text to preform interpretation on")

return parser.parse_args()

def create_channel(args):

log.debug("Adding CallCredentials with token %s" % args.token)

call_credentials = grpc.access_token_call_credentials(args.token)

log.debug("Creating secure gRPC channel")

channel_credentials = grpc.ssl_channel_credentials()

channel_credentials = grpc.composite_channel_credentials(channel_credentials, call_credentials)

channel = grpc.secure_channel(args.serverUrl, credentials=channel_credentials)

return channel

def read_session_id_from_response(response_obj):

try:

session_id = response_obj.get('payload').get('sessionId', None)

except Exception as e:

raise Exception("Invalid JSON Object or response object")

if session_id:

return session_id

else:

raise Exception("Session ID is not present or some error occurred")

def start_request(stub, model_ref_dict, session_id, selector_dict={}):

selector = Selector(channel=selector_dict.get('channel'),

library=selector_dict.get('library'),

language=selector_dict.get('language'))

start_payload = StartRequestPayload(model_ref=model_ref_dict)

start_req = StartRequest(session_id=session_id,

selector=selector,

payload=start_payload)

log.debug(f'Start Request: {start_req}')

start_response, call = stub.Start.with_call(start_req)

response = MessageToDict(start_response)

log.debug(f'Start Request Response: {response}')

return response, call

def execute_request(stub, session_id, selector_dict={}, payload_dict={}):

selector = Selector(channel=selector_dict.get('channel'),

library=selector_dict.get('library'),

language=selector_dict.get('language'))

input = UserInput(user_text=payload_dict.get('user_input').get('userText'))

execute_payload = ExecuteRequestPayload(

user_input=input)

execute_request = ExecuteRequest(session_id=session_id,

selector=selector,

payload=execute_payload)

log.debug(f'Execute Request: {execute_payload}')

execute_response, call = stub.Execute.with_call(execute_request)

response = MessageToDict(execute_response)

log.debug(f'Execute Response: {response}')

return response, call

def execute_stream_request(args, stub, session_id, selector_dict={}):

# Receive stream outputs from Dialog

stream_outputs = stub.ExecuteStream(build_stream_input(args, session_id, selector_dict))

log.debug(f'execute_responses: {stream_outputs}')

responses = []

audio = bytearray(b'')

for stream_output in stream_outputs:

if stream_output:

# Extract execute response from the stream output

response = MessageToDict(stream_output.response)

if response:

responses.append(response)

audio += stream_output.audio.audio

return responses, audio

def build_stream_input(args, session_id, selector_dict):

selector = Selector(channel=selector_dict.get('channel'),

library=selector_dict.get('library'),

language=selector_dict.get('language'))

try:

with open(args.audioFile, mode='rb') as file:

audio_buffer = file.read()

# Hard code packet_size_byte for simplicity sake (approximately 100ms of 16KHz mono audio)

packet_size_byte = 3217

audio_size = sys.getsizeof(audio_buffer)

audio_packets = [ audio_buffer[x:x + packet_size_byte] for x in range(0, audio_size, packet_size_byte) ]

# For simplicity sake, let's assume the audio file is PCM 16KHz

user_input = None

asr_control_v1 = {'audio_format': {'pcm': {'sample_rate_hz': 16000}}}

except:

# Text interpretation as normal

asr_control_v1 = None

audio_packets = [b'']

user_input = UserInput(user_text=args.textInput)

# Build execute request object

execute_payload = ExecuteRequestPayload(user_input=user_input)

execute_request = ExecuteRequest(session_id=session_id,

selector=selector,

payload=execute_payload)

# For simplicity sake, let's assume the audio file is PCM 16KHz

tts_control_v1 = {'audio_params': {'audio_format': {'pcm': {'sample_rate_hz': 16000}}}}

first_packet = True

for audio_packet in audio_packets:

if first_packet:

first_packet = False

# Only first packet should include the request header

stream_input = StreamInput(

request=execute_request,

asr_control_v1=asr_control_v1,

tts_control_v1=tts_control_v1,

audio=audio_packet

)

log.debug(f'Stream input initial: {stream_input}')

else:

stream_input = StreamInput(audio=audio_packet)

yield stream_input

def stop_request(stub, session_id=None):

stop_req = StopRequest(session_id=session_id)

log.debug(f'Stop Request: {stop_req}')

stop_response, call = stub.Stop.with_call(stop_req)

response = MessageToDict(stop_response)

log.debug(f'Stop Response: {response}')

return response, call

def main():

args = parse_args()

log_level = logging.DEBUG

logging.basicConfig(

format='%(asctime)s %(levelname)-5s: %(message)s', level=log_level)

with create_channel(args) as channel:

stub = DialogServiceStub(channel)

model_ref_dict = {

"uri": args.modelUrn,

"type": 0

}

selector_dict = {

"channel": "default",

"language": "en-US",

"library": "default"

}

response, call = start_request(stub,

model_ref_dict=model_ref_dict,

session_id=None,

selector_dict=selector_dict

)

session_id = read_session_id_from_response(response)

log.debug(f'Session: {session_id}')

assert call.code() == StatusCode.OK

log.debug(f'Initial request, no input from the user to get initial prompt')

payload_dict = {

"user_input": {

"userText": None

}

}

response, call = execute_request(stub,

session_id=session_id,

selector_dict=selector_dict,

payload_dict=payload_dict

)

assert call.code() == StatusCode.OK

log.debug(f'Second request, passing in user input')

payload_dict = {

"user_input": {

"userText": args.textInput

}

}

response, call = execute_request(stub,

session_id=session_id,

selector_dict=selector_dict,

payload_dict=payload_dict

)

assert call.code() == StatusCode.OK

response, call = stop_request(stub,

session_id=session_id

)

assert call.code() == StatusCode.OK

if __name__ == '__main__':

main()

The sample Python application consists of these files:

- dlg_client.py: The main client application file.

- run-mix-client.sh: A script file that generates the access token and runs the application.

Requirements

To run this sample app, you need:

- Python 3.6 or later. Use

python3 --versionto check which version you have. - Credentials from Mix (a client ID and secret) to generate the access token. See Prerequisites from Mix.

Procedure

To run this sample application:

Step 1. Download the sample app here and unzip it in a working directory (for example, /home/userA/dialog-sample-python-app).

Step 2. Download the gRPC .proto files here and unzip the files in the sample app working directory.

Step 3. Navigate to the sample app working directory and install the required dependencies. The details will depend on the platform and command shell you are using.

For a POSIX OS using bash:

python3 -m venv env

source env/bin/activate

pip install --upgrade pip

pip install grpcio

pip install grpcio-tools

pip install uuid

For Windows using cmd.exe:

python -m venv env

env/Scripts/activate

python -m pip install --upgrade pip

pip install grpcio

pip install grpcio-tools

pip install uuid

For Windows using Git Bash command shell, the details are almost the same, but substitute source env/Scripts/activate for env/Scripts/activate.

Step 4. Generate the stubs:

echo "Pulling support files"

mkdir -p google/api

curl https://raw.githubusercontent.com/googleapis/googleapis/master/google/api/annotations.proto > google/api/annotations.proto

curl https://raw.githubusercontent.com/googleapis/googleapis/master/google/api/http.proto > google/api/http.proto

echo "generate the stubs for support files"

python -m grpc_tools.protoc --proto_path=./ --python_out=./ google/api/http.proto

python -m grpc_tools.protoc --proto_path=./ --python_out=./ google/api/annotations.proto

echo "generate the stubs for the DLGaaS gRPC files"

python -m grpc_tools.protoc --proto_path=./ --python_out=. --grpc_python_out=. nuance/dlg/v1/dlg_interface.proto

python -m grpc_tools.protoc --proto_path=./ --python_out=. nuance/dlg/v1/dlg_messages.proto

python -m grpc_tools.protoc --proto_path=./ --python_out=. nuance/dlg/v1/common/dlg_common_messages.proto

echo "generate the stubs for the ASRaaS gRPC files"

python -m grpc_tools.protoc --proto_path=./ --python_out=. --grpc_python_out=. nuance/asr/v1/recognizer.proto

python -m grpc_tools.protoc --proto_path=./ --python_out=. nuance/asr/v1/resource.proto

python -m grpc_tools.protoc --proto_path=./ --python_out=. nuance/asr/v1/result.proto

echo "generate the stubs for the TTSaaS gRPC files"

python -m grpc_tools.protoc --proto_path=./ --python_out=. --grpc_python_out=. nuance/tts/v1/nuance_tts_v1.proto

echo "generate the stubs for the NLUaaS gRPC files"

python -m grpc_tools.protoc --proto_path=./ --python_out=. --grpc_python_out=. nuance/nlu/v1/runtime.proto

python -m grpc_tools.protoc --proto_path=./ --python_out=. nuance/nlu/v1/result.proto

python -m grpc_tools.protoc --proto_path=./ --python_out=. nuance/nlu/v1/interpretation-common.proto

python -m grpc_tools.protoc --proto_path=./ --python_out=. nuance/nlu/v1/single-intent-interpretation.proto

python -m grpc_tools.protoc --proto_path=./ --python_out=. nuance/nlu/v1/multi-intent-interpretation.proto

echo "generate the stubs for supporting files"

python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/rpc/error_details.proto

python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/rpc/status.proto

python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/rpc/status_code.proto

Step 5. Edit the run script, run-mix-client.sh, to add your CLIENT_ID and SECRET. These are your Mix credentials as described in Generate token.

CLIENT_ID="appID%3A...ENTER MIX CLIENT_ID..."

SECRET="...ENTER MIX SECRET..."

export MY_TOKEN="`curl -s -u "$CLIENT_ID:$SECRET" \

"https://auth.crt.nuance.co.uk/oauth2/token" \

-d "grant_type=client_credentials" -d "scope=dlg" \

| python -c 'import sys, json; print(json.load(sys.stdin)["access_token"])'"

python dlg_client.py --serverUrl "dlg.api.nuance.co.uk:443" --token $MY_TOKEN --modelUrn "$1" --textInput "$2"

Step 6. Run the application using the script file, passing it the URN and a text to interpret:

./run-mix-client.sh modelUrn textInput

Where:

- modelUrn: Is the URN of the application configuration for the Coffee App created in the Quick Start

- textInput: Is the text to interpret

For example:

$ ./run-mix-client.sh "urn:nuance-mix:tag:model/TestMixClient/mix.dialog" "I want a double espresso"

An output similar to the following is provided:

2020-12-07 17:04:05,414 DEBUG: Creating secure gRPC channel

2020-12-07 17:04:05,420 DEBUG: Start Request: selector {

channel: "default"

language: "en-US"

library: "default"

}

payload {

model_ref {

uri: "urn:nuance-mix:tag:model/TestMixClient/mix.dialog"

}

}

2020-12-07 17:04:05,945 DEBUG: Start Request Response: {'payload': {'sessionId': '92705444-cd59-4a04-b79c-e67203f04f0d'}}

2020-12-07 17:04:05,948 DEBUG: Session: 92705444-cd59-4a04-b79c-e67203f04f0d

2020-12-07 17:04:05,949 DEBUG: Initial request, no input from the user to get initial prompt

2020-12-07 17:04:05,952 DEBUG: Execute Request: user_input {

}

2020-12-07 17:04:06,193 DEBUG: Execute Response: {'payload': {'messages':

[{'visual': [{'text': 'Hello and welcome to the coffee app.'}], 'view': {}}],

'qaAction': {'message': {'visual': [{'text': 'What can I get you today?'}]},

'data': {}, 'view': {}}}}

2020-12-07 17:04:06,198 DEBUG: Second request, passing in user input

2020-12-07 17:04:06,199 DEBUG: Execute Request: user_input {

user_text: "I want a double espresso"

}

2020-12-07 17:04:06,791 DEBUG: Execute Response: {'payload': {'messages':

[{'visual': [{'text': 'Perfect, a double espresso coming right up!'}], 'view':

{}}], 'endAction': {'data': {}, 'id': 'End dialog'}}}

Reference topics

This section provides more detailed information about objects used in the gRPC API.

Note: Examples in this section are shown in JSON format for readability. However, in an actual client application, content is sent and received as protobuf objects.

Status messages and codes

gRPC error codes

In addition to the standard gRPC error codes, DLGaaS uses the following codes:

| gRPC code | Message | Indicates |

|---|---|---|

| 0 | OK | Normal operation |

| 5 | NOT FOUND | The resource specified could not be found; for example:

Troubleshooting: Make sure that the resource provided exists or that you have specified it correctly. See URN for details on the URN syntax. |

| 9 | FAILED_PRECONDITION | ASRaaS and/or NLUaaS returned 400 range status codes |

| 11 | OUT_OF_RANGE | The provided session timeout is not in the expected range. Troubleshooting: Specify a value between 0 and 90000 seconds (default is 900 seconds) and try again. |

| 12 | UNIMPLEMENTED | The API version was not found or is not available on the URL specified. For example, a client using DLGaaS v1 is trying to access the dlgaas.beta.nuance.co.uk URL. Troubleshooting: See URLs to runtime services for the supported URLs. |

| 13 | INTERNAL | There was an issue on the server side or interactions between sub systems have failed. Troubleshooting: Contact Nuance. |

| 16 | UNAUTHENTICATED | The credentials specified are incorrect or expired. Troubleshooting: Make sure that you have generated the access token and that you are providing the credentials as described in Authorize your client application. Note that the token needs to be regenerated regularly. See Access token lifetime for details. |

HTTP return codes

In addition to the standard HTTP error codes, DLGaaS uses the following codes:

| HTTP code | Message | Indicates |

|---|---|---|

| 200 | OK | Normal operation |

| 400 | BAD_REQUEST | Server cannot process the request due to client error such as a malformed request |

| 401 | UNAUTHORIZED | The credentials specified are incorrect or expired. Troubleshooting: Make sure that you have generated the access token and that you are providing the credentials as described in Authorize your client application. Note that the token needs to be regenerated regularly. See Access token lifetime for details. |

| 404 | NOT_FOUND | The resource specified could not be found; for example:

Troubleshooting: Make sure that the resource provided exists or that you have specified it correctly. See URN for details on the URN syntax. |

| 500 | INTERNAL_SERVER_ERROR | There was an issue on the server side. Troubleshooting: Contact Nuance. |

Values in the 400 range indicate an error in the request that your client app sent. Values in the 500 range indicate an internal error within DLGaaS or another Mix service.

Examples

Incorrect URN

"grpc_message":"model [urn:nuance:mix/eng-USA/coffee_app_typo/mix.dialog] could not be found","grpc_status":5

Incorrect channel

"grpc_message":"channel is invalid, supported values are [Omni Channel VA, default] (error code: 5)","grpc_status":5}"

Session not found

"grpc_message":"Could not find session for [12345]","grpc_status":5}"

Incorrect credentials

"{"error":{"code":401,"status":"Unauthorized","reason":"Token is expired","message":"Access credentials are invalid"}\n","grpc_status":16}"

Message actions

Example message action as part of QA Action

{

"payload": {

"messages": [],

"qa_action": {

"message": {

"nlg": [{

"text": "What type of coffee would you like?"

}

],

"visual": [{

"text": "What <b>type</b> of coffee would you like? For the list of options, see the <a href=\"www.myserver.com/menu.html\">menu</a>."

}

],

"audio": [{

"text": "What type of coffee would you like? ",

"uri": "en-US/prompts/default/default/Message_ini_01.wav?version=1.0_1602096507331"

}

]

}

}

}

}

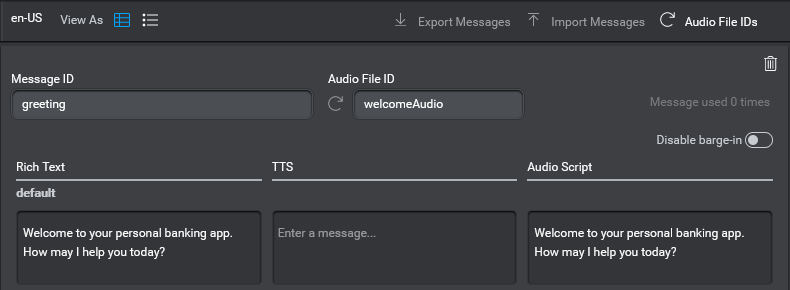

A message action indicates that a message should be played to the user. A message can be provided as:

- Text to be rendered using Text-to-speech: The

nlgfield provides backup text as a fallback for speech outputs sythesized using TTSaaS. For more information about how to generate TTSaaS speech audio, see Step 4b. Interact with the user (using audio). - Text to be visually displayed to the user: The

visualfield provides text that can be displayed, for example, in a chat or in a web application. This field supports rich text format, so you can include HTML markups, URLs, etc. - Audio file to play to the user: The

audiofield provides a link to a recorded audio file that can be played to the end user. Theurifield provides the link to the file, while thetextfield provides text that can be used as backup TTS if the audio file is missing or cannot be played.

Message actions can be configured in the following Mix.dialog nodes:

- Message node: In this case they are returned in the

messagesfield of the ExecuteRequestPayload. Messages specified in a message node are returned only when a question and answer, data access, or external actions node occurs in the dialog flow. See Message nodes for details. - Question and answer node: In this case they are returned in the message field of the ExecuteRequestPayload qa_action

- Data access node: A latency message can be defined to be played while the user is waiting for a data transfer to take place, whether client-side or server-side.

Message nodes

A message node is used to play or display a message. The message specified in a message node is sent to the client application as a message action. A message node also performs non-recognition actions, such as playing a message, assigning a variable, or defining the next node in the dialog flow.

Messages configured in a message node are cumulative and sent only when a question and answer node, a data access node, or an external actions node occurs in the dialog flow. For example, consider the following dialog flow:

This would be handled as follows:

- The Dialog service sends an ExecuteResponse when encountering the question and answer node, with the following messages:

# First ExecuteResponse { "payload": { "messages": [{ "nlg": [], "visual": [{ "text": "Hey there!" } ], "audio": [] }, { "nlg": [], "visual": [{ "text": "Welcome to the coffee app." } ], "audio": [] } ], "qa_action": { "message": { "nlg": [], "visual": [{ "text": "What can I do for you today?" } ], "audio": [] } } } }

- The client application sends an ExecuteRequest with the user input.

- The Dialog service sends an ExecuteResponse when encountering the end node, with the following message action:

# Second ExecuteResponse { "payload": { "messages": [{ "nlg": [], "visual": [{ "text": "Goodbye." } ], "audio": [] } ], "end_action": {} } }

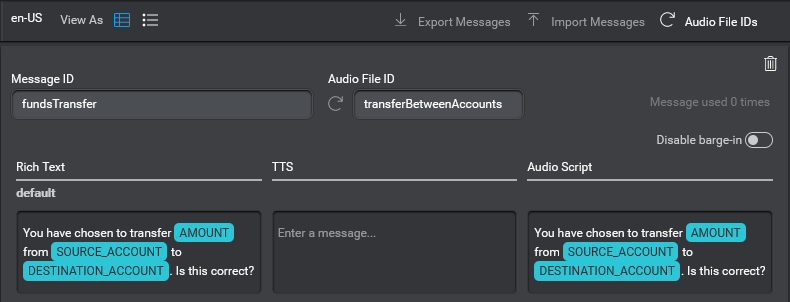

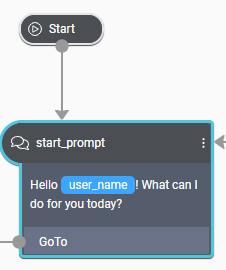

Using variables in messages

Messages can include variables. For example, in a coffee application, you might want to personalize the greeting message:

"Hello Miranda ! What can I do for you today?"

Variables are configured in Mix.dialog. They are resolved by the Dialog engine and then returned to the client application. For example:

{

"payload": {

"messages": [],

"qa_action": {

"message": {

"nlg": [],

"visual": [

{

"text": "Hello Miranda ! What can I do for you today?"

}

],

"audio": []

}

}

}

}

Question and answer actions

A question and answer action is returned by a question and answer node. A question and answer node is the basic node type in dialog applications. It first plays a message and then recognizes user input.

The message specified in a question and answer node is sent to the client application as a message action.

The client application must then return the user input to the question and answer node. This can be provided in four ways:

- As audio to be recognized and interpreted by Nuance. This is implemented in the client app through the StreamInput method. See Step 4b. Interact with the user (using audio) for details.

- As text to be interpreted by Nuance. In this case, the client application returns the input string to the dialog application. See Interpreting text user input for details.

- As interpretation results. This assumes that interpretation of the user input is performed by an external system. In this case, the client application is responsible for returning the results of the interpretation to the dialog application. See Interpreting text user input for details.

- As a selected option from an interactive element.

In a question and answer node, the dialog flow is stopped until the client application has returned the user input.

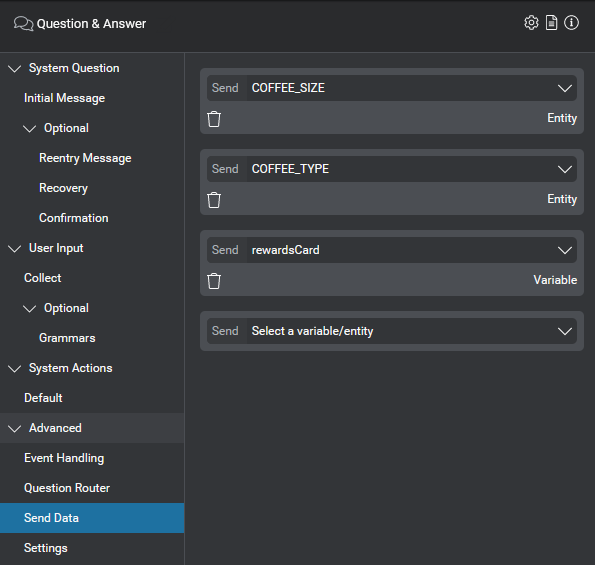

Sending data

A question and answer node can specify data to send to the client application. This data is configured in Mix.dialog, in the Send Data tab of the question and answer node. For the procedure, see Send data to the client application in the Mix.dialog documentation.

For example, in the coffee application, you might want to send entities that you have collected in a previous node (COFFEE_TYPE and COFFEE_SIZE) as well as data that you have retrieved from an external system (the user's rewards card number):

This data is sent to the client application in the data field of the qa_action; for example:

{

"payload": {

"messages": [],

"qa_action": {

"message": {

"nlg": [],

"visual": [

{

"text": "Your order was processed. Would you like anything else today?"

}

],

"audio": [],

"view": {

"id": "",

"name": ""

}

},

"data": {

"rewardsCard": "5367871902680912",

"COFFEE_TYPE": "espresso",

"COFFEE_SIZE": "lg"

}

}

}

}

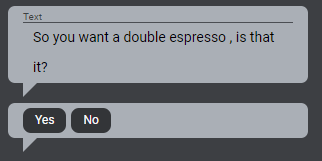

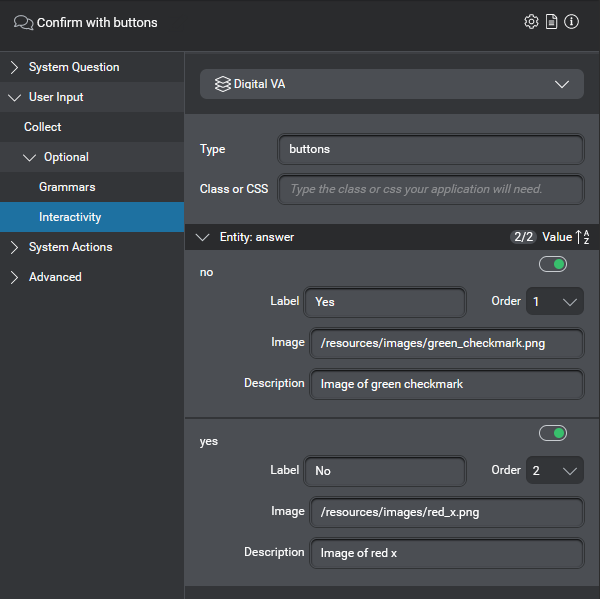

Interactive elements

Question and answer actions can include interactive elements to be displayed by the client app, such as clickable buttons or links.

For example, in a web version of the coffee application, you may want to display Yes/No buttons so that users can confirm their selection for an entity named answer which takes values of Yes or No:

Interactive elements are configured in Mix.dialog in question and answer nodes. For the procedure, see Define interactive elements in the Mix.dialog documentation.

For example, for the Yes/No buttons scenario above, you could configure two elements, one for each button, as follows:

This information is sent to the client app in the selectable field of the qa_action. For example:

{

"payload": {

"messages": [],

"qa_action": {

"message": {

"nlg": [],

"visual": [{

"text": "So you want a double espresso , is that it?"

}

],

"audio": []

},

"selectable": {

"selectable_items": [{

"value": {

"id": "answer",

"value": "yes"

},

"description": "Image of green checkmark",

"display_text": "Yes",

"display_image_uri": "/resources/images/green_checkmark.png"

}, {

"value": {

"id": "answer",

"value": "no"

},

"description": "Image of Red X",

"display_text": "No",

"display_image_uri": "/resources/images/red_x.png"

}

]

}

}

}

}

The application is then responsible for displaying the elements (in this case, the two buttons) and for returning the choice made by the user in the selected_item field of the Execute Request payload. For example:

"payload": {

"user_input": {

"selected_item": {

"id": "answer",

"value": "no"

}

}

}

In both cases the field "id" corresponds to the name of the entity as defined in Mix.dialog or Mix.nlu.

Data access actions

A data access action tells the client app that the dialog needs data from the client to continue the flow. For example, consider the following use cases:

- In a coffee application, after asking the user for the type and size of coffee to order, the dialog must provide the price of the order before completing the transaction. In this use case, the dialog sends a data access action to the client application, providing the type and size of coffee and requesting the price.