TTS as a Service gRPC API

Nuance TTS provides speech synthesis

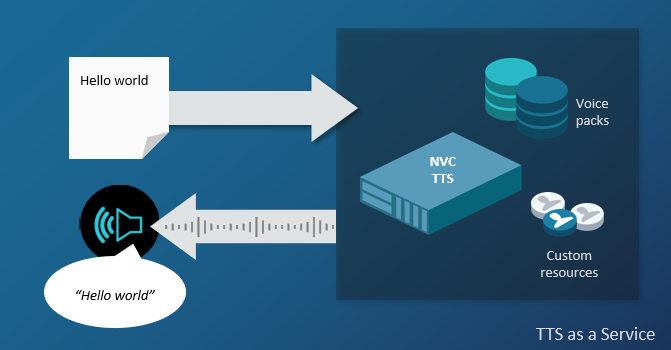

Nuance TTS (Text to Speech) as a Service is powered by the Nuance Vocalizer for Cloud (NVC) engine, which synthesizes speech from plain text, SSML, or Nuance control codes. NVC works with Nuance Vocalizer for Enterprise (NVE) and Nuance voice packs to generate speech.

TTS as a Service lets you request speech synthesis from NVC engines running on Nuance-hosted machines. It works with voices in many languages and locales, with choices of gender and age.

The gRPC synthesizer protocol provided by NVC can request synthesis services in any of the programming languages supported by gRPC. An HTTP API for synthesis is also available.

An additional gRPC storage protocol can upload synthesis resources to cloud storage.

gRPC is an open source RPC (remote procedure call) software used to create services. It uses HTTP/2 for transport, and protocol buffers to define the structure of the application. NVC supports Protocol Buffers version 3, also known as proto3.

Version: v1

This release supports version v1 of the synthesizer API and v1beta1 of the storage API.

Prerequisites from Mix

Before developing your TTS gRPC application, you need a Nuance Mix project. This project provides credentials to run your application against the Nuance-hosted NVC engine.

Create a Mix project and model: see Mix.nlu workflow to:

Create a Mix project.

Optionally build a model in the project. If you are using other Nuance "as a service" products (such as ASRaaS or NLUaaS), you may use the same Mix project for NVC. A model is not needed for your NVC application.

Create and deploy an application configuration for the project.

Generate a client ID and "secret" in your Mix project: see Authorize your client application. Later you will use these credentials to request an access token to run your application.

Learn the URL to call the TTS service: see Accessing a runtime service.

The URLs for NVC in the hosted Mix environment are:

- Runtime:

tts.api.nuance.co.uk:443 - Authorization:

https://auth.crt.nuance.co.uk/oauth2/token

gRPC setup

Install gRPC for programming language

$ python -m pip install --upgrade pip

$ python -m pip install grpcio

$ python -m pip install grpcio-tools

Download and unzip proto files

$ unzip nuance_tts_and_storage_protos.zip

Archive: nuance_tts_and_storage_protos.zip

inflating: nuance/rpc/error_details.proto

inflating: nuance/rpc/status.proto

inflating: nuance/rpc/status_code.proto

inflating: nuance/tts/storage/v1beta1/storage.proto

inflating: nuance/tts/v1/synthesizer.proto

Generate client stubs

# Generate Python stubs from TTS proto files

python -m grpc_tools.protoc --proto_path=./ --python_out=./ --grpc_python_out=./ nuance/tts/v1/synthesizer.proto

python -m grpc_tools.protoc --proto_path=./ --python_out=./ --grpc_python_out=./ nuance/tts/storage/v1beta1/storage.proto

# Generate Python stubs from RPC proto files

python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/rpc/error_details.proto

python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/rpc/status_code.proto

python -m grpc_tools.protoc --proto_path=./ --python_out=./ nuance/rpc/status.proto

Final structure of protos and stubs for TTS and storage

├── Your client apps here

└── nuance

├── rpc

│ ├── error_details_pb2.py

│ ├── error_details.proto

│ ├── status_code_pb2.py

│ ├── status_code.proto

│ ├── status_pb2.py

│ └── status.proto

└── tts

├── storage

│ └── v1beta1

│ ├── storage_pb2.py

│ ├── storage_pb2_grpc.py

│ └── storage.proto

└── v1

├── synthesizer_pb2.py

├── synthesizer_pb2_grpc.py

└── synthesizer.proto

The basic steps in using the NVC gRPC protocol are:

Install gRPC for the programming language of your choice, including C++, Java, Python, Go, Ruby, C#, Node.js, and others. See gRPC Documentation for a complete list and instructions on using gRPC with each one.

Download the NVC gRPC proto files, which contain a generic version of the functions or classes that perform speech synthesis and upload operations:

Synthesizer and storage gRPC protos: nuance_tts_and_storage_protos.zip

Unzip the file in a location that your applications can access, for example in the directory that contains or will contain your client apps.

If your programming language requires client stub files, generate the stubs from the proto files using gRPC protoc, following the Python example as guidance. The resulting files contain the information in the proto files in your programming language.

Once you have the proto files and optionally the client stubs, you are ready to start writing client applications with the help of the API and several sample applications. See:

| Topic | Description |

|---|---|

| Synthesizer API | The gRPC protocol for synthesis. |

| Client app development | A walk-through of the major components of a synthesis client using a simple client. |

| Sample synthesis client | A full-fledged synthesis client, written in Python. |

| Storage API | The gRPC protocol for uploading resources to cloud storage. |

| Sample storage client | A client application for uploading synthesis resources to cloud storage, written in Python. |

Client app development

The synthesizer gRPC protocol for NVC lets you create client applications for synthesizing text and obtaining information about available voices.

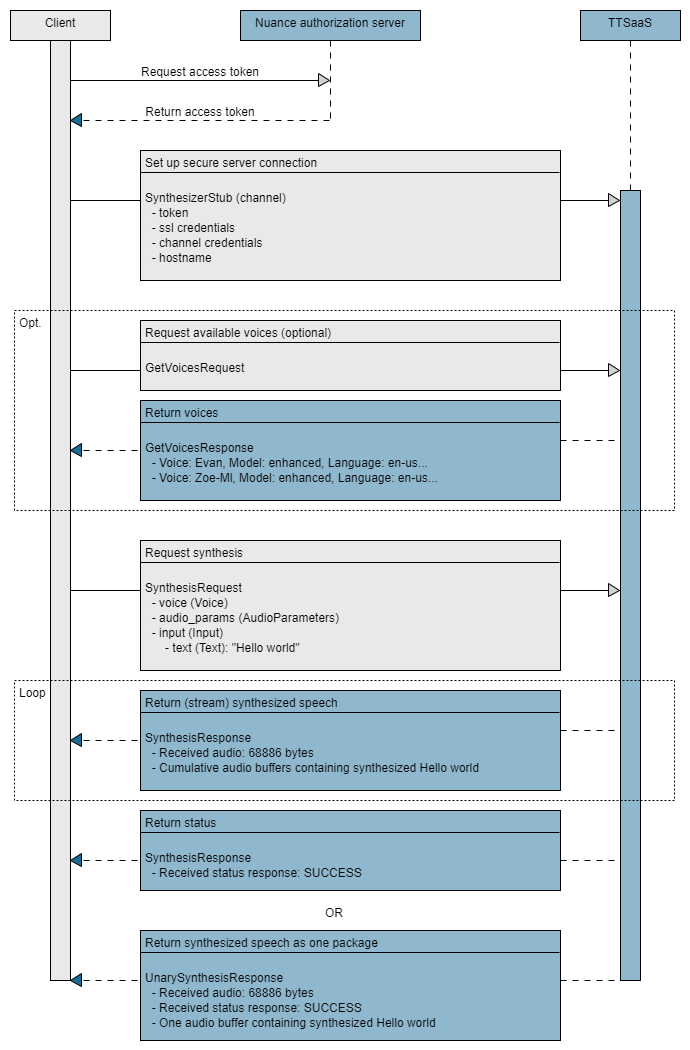

Sequence flow

The essential tasks are illustrated in the following high-level sequence flow of an application at run time.

Development steps

Try it out: Copy client files into place (some proto files are omitted for clarity)

├── simple-mix-client.py

├── run-simple-mix-client.sh

└── nuance

├── rpc (RPC message files)

└── tts

├── storage (Storage files)

└── v1

├── synthesizer_pb2_grpc.py

├── synthesizer_pb2.py

└── synthesizer.proto

run-simple-mix-client.sh: Shell script to authorize and run simple client

#!/bin/bash

CLIENT_ID=<Mix client ID, colons replaced with %3A>

SECRET=<Mix client secret>

export MY_TOKEN="`curl -s -u "$CLIENT_ID:$SECRET" \

https://auth.crt.nuance.co.uk/oauth2/token \

-d "grant_type=client_credentials" -d "scope=tts" \

| python -c 'import sys, json; print(json.load(sys.stdin)["access_token"])'`"

./simple-mix-client.py --server_url tts.api.nuance.co.uk:443 \

--token $MY_TOKEN \

--name "Zoe-Ml" \

--model "enhanced" \

--text "The wind was a torrent of darkness, among the gusty trees." \

--output_wav_file "highwayman.wav"

simple-mix-client.py: Simple client: adjust the first line for your environment

#!/usr/bin/env python3

# Import functions

import sys

import grpc

import argparse

from nuance.tts.v1.synthesizer_pb2 import *

from nuance.tts.v1.synthesizer_pb2_grpc import *

from google.protobuf import text_format

# Generate a .wav file header

def generate_wav_header(sample_rate, bits_per_sample, channels, audio_len, audio_format):

# (4byte) Marks file as RIFF

o = bytes("RIFF", 'ascii')

# (4byte) File size in bytes excluding this and RIFF marker

o += (audio_len + 36).to_bytes(4, 'little')

# (4byte) File type

o += bytes("WAVE", 'ascii')

# (4byte) Format Chunk Marker

o += bytes("fmt ", 'ascii')

# (4byte) Length of above format data

o += (16).to_bytes(4, 'little')

# (2byte) Format type (1 - PCM)

o += (audio_format).to_bytes(2, 'little')

# (2byte) Will always be 1 for TTS

o += (channels).to_bytes(2, 'little')

# (4byte)

o += (sample_rate).to_bytes(4, 'little')

o += (sample_rate * channels * bits_per_sample // 8).to_bytes(4, 'little') # (4byte)

o += (channels * bits_per_sample // 8).to_bytes(2,'little') # (2byte)

# (2byte)

o += (bits_per_sample).to_bytes(2, 'little')

# (4byte) Data Chunk Marker

o += bytes("data", 'ascii')

# (4byte) Data size in bytes

o += (audio_len).to_bytes(4, 'little')

return o

# Define synthesis request

def create_synthesis_request(name, model, text, ssml, sample_rate, send_log_events, client_data):

request = SynthesisRequest()

request.voice.name = name

request.voice.model = model

pcm = PCM(sample_rate_hz=sample_rate)

request.audio_params.audio_format.pcm.CopyFrom(pcm)

if text:

request.input.text.text = text

elif ssml:

request.input.ssml.text = ssml

else:

raise RuntimeError("No input text or SSML defined.")

request.event_params.send_log_events = send_log_events

return request

def main():

parser = argparse.ArgumentParser(

prog="simple-mix-client.py",

usage="%(prog)s [-options]",

add_help=False,

formatter_class=lambda prog: argparse.HelpFormatter(

prog, max_help_position=45, width=100)

)

# Set arguments

options = parser.add_argument_group("options")

options.add_argument("-h", "--help", action="help",

help="Show this help message and exit")

options.add_argument("--server_url", nargs="?",

help="Server hostname (default=localhost)", default="localhost:8080")

options.add_argument("--token", nargs="?",

help="Access token", required=True)

options.add_argument("--name", nargs="?", help="Voice name", required=True)

options.add_argument("--model", nargs="?",

help="Voice model", required=True)

options.add_argument("--sample_rate", nargs="?",

help="Audio sample rate (default=22050)", type=int, default=22050)

options.add_argument("--text", nargs="?", help="Input text")

options.add_argument("--ssml", nargs="?", help="Input SSML")

options.add_argument("--send_log_events",

action="store_true", help="Subscribe to Log Events")

options.add_argument("--output_wav_file", nargs="?",

help="Destination file path for synthesized audio")

options.add_argument("--client_data", nargs="?",

help="Client information in key value pairs")

args = parser.parse_args()

# Create channel and stub

call_credentials = grpc.access_token_call_credentials(args.token)

channel_credentials = grpc.composite_channel_credentials(

grpc.ssl_channel_credentials(), call_credentials)

# Send request and process results

with grpc.secure_channel(args.server_url, credentials=channel_credentials) as channel:

stub = SynthesizerStub(channel)

request = create_synthesis_request(name=args.name, model=args.model, text=args.text,

ssml=args.ssml, sample_rate=args.sample_rate, send_log_events=args.send_log_events,

client_data=args.client_data)

stream_in = stub.Synthesize(request)

audio_file = None

wav_header = None

total_audio_len = 0

try:

if args.output_wav_file:

audio_file = open(args.output_wav_file, "wb")

# Write an empty wav header for now, until we know the final audio length

wav_header = generate_wav_header(sample_rate=args.sample_rate, bits_per_sample=16, channels=1, audio_len=0, audio_format=1)

audio_file.write(wav_header)

for response in stream_in:

if response.HasField("audio"):

print("Received audio: %d bytes" % len(response.audio))

total_audio_len = total_audio_len + len(response.audio)

if(audio_file):

audio_file.write(response.audio)

elif response.HasField("events"):

print("Received events")

print(text_format.MessageToString(response.events))

else:

if response.status.code == 200:

print("Received status response: SUCCESS")

else:

print("Received status response: FAILED")

print("Code: {}, Message: {}".format(response.status.code, response.status.message))

print('Error: {}'.format(response.status.details))

except Exception as e:

print(e)

if audio_file:

wav_header = generate_wav_header(sample_rate=args.sample_rate, bits_per_sample=16, channels=1, audio_len=total_audio_len, audio_format=1)

audio_file.seek(0, 0)

audio_file.write(wav_header)

audio_file.close()

print("Saved audio to {}".format(args.output_wav_file))

if __name__ == '__main__':

main()

This section describes how to implement basic speech synthesis in the context of a simple Python client application, shown at the right.

This client synthesizes plain text or SSML input, streaming the audio back to the client and optionally creating an audio file containing the synthesized speech.

Try it out

You can try out this simple client application to synthesize text and save it in an audio file. To run it, you need:

- Python 3.6 or later.

- The generated Python stub files from gRPC setup.

- Your client ID and secret from Prerequisites from Mix.

- run-simple-mix-client.sh: Copy the shell script at the right into the directory above your proto files and stubs.

Give it execute permissionchmod +x run-simple-mix-client.sh

Edit the shell script to add your client ID and secret (see Authorize next). - simple-mix-client.py: Copy the Python file at the right into the same directory.

Run the client using the shell script. All the arguments are in the shell script, including the text to synthesize and the output file.

$ ./run-simple-mix-client.sh

Received audio: 24926 bytes

Received audio: 11942 bytes

Received audio: 10580 bytes

Received audio: 9198 bytes

Received audio: 6316 bytes

Received audio: 8908 bytes

Received audio: 27008 bytes

Received audio: 59466 bytes

Received status response: SUCCESS

Saved audio to highwayman.wav

The synthesized speech is in the audio file, highwayman.wav, which you can play in an audio player.

Optionally synthesize your own text: edit the shell script to change the text and output_wav_file arguments, then rerun the client.

Read on to learn more about how this simple client is constructed.

Authorize

Nuance Mix uses the OAuth 2.0 protocol for authorization. The client application must provide an access token to be able to access the NVC runtime service. The token expires after a short period of time so must be regenerated frequently.

Your client application uses the client ID and secret from the Mix Dashboard (see Prerequisites from Mix), along with the OAuth scope for NVC, to generate an access token from the Nuance authorization server.

The client ID starts with appID: followed by a unique identifier. If you are using the curl command, replace the colon with %3A so the value can be parsed correctly:

appID:NMDPTRIAL_your_name_company_com_2020...

-->

appID%3ANMDPTRIAL_your_name_company_com_2020...

The OAuth scope for the NVC service is tts.

The token may be generated in several ways, either as part of the client application or as a script file. This Python example uses a Linux script to generate a token and store it in an environment variable. The token is then passed to the application, where it is used to create a secure connection to the TTS service.

Import functions

The first step is to import all functions from the NVC client stubs, synthesizer*.py, generated from the proto files in gRPC setup, along with other utilities. The client stubs (and the proto files) are in the following path under the location of the simple client:

nuance/tts/v1/synthesizer_pb2.py, synthesizer_pb2_grpc.py

Do not edit these synthesizer*.* files.

Set arguments

The client includes arguments that that it can accept, allowing users to customize its operation. For example:

--server_url: The Mix endpoint and port number for the NVC service.--token: An access token.--nameand--model: The name and model of a voice to perform the synthesis. To learn which voices are available, see Sample synthesis client.--textorssml: The material to be synthesized, in this case either plain text or SSML.--output_wav_file: Optionally, a filename for saving the synthesized audio as a wave file.

To see the arguments, run the app with the --help option:

$ ./simple-mix-client.py --help

usage: simple-mix-client.py [-options]

options:

-h, --help Show this help message and exit

--server_url [SERVER_URL] Server hostname (default=localhost)

--token [TOKEN] Access token

--name [NAME] Voice name

--model [MODEL] Voice model

--sample_rate [SAMPLE_RATE] Audio sample rate (default=22050)

--text [TEXT] Input text

--ssml [SSML] Input SSML

--send_log_events Subscribe to Log Events

--output_wav_file [OUTPUT_WAV_FILE] Destination file path for synthesized audio

--client_data [CLIENT_DATA] Client information in key value pairs

Define synthesis request

The client creates a synthesis request using SynthesisRequest, including the arguments received from the end user. In this example, the request looks for a voice name and model plus the input to synthesize, either plain text or SSML.

The input is provided in the script file that runs the client, for example:

Plain text input and an audio file to hold the results:

--text "The wind was a torrent of darkness, among the gusty trees." \ --output_wav_file "highwayman.wav"

SSML input, with optional SSML elements, and an audio file:

--ssml '<speak>This is the normal volume of my voice. \ <prosody volume="10">I can speak rather quietly,</prosody> \ <prosody volume="90">But also very loudly.</prosody></speak>' \ --output_wav_file "ssml-loud.wav"

Create channel and stub

To call NVC, the client creates a secure gRPC channel and authorizes itself by providing the URL of the hosted service and an access token.

In many situations, users can pass the service URL and token to the client as arguments. In this Python app, the URL is in the --server_url argument and the token is in --token.

A client stub function or class is defined using this channel information.

In some languages, this stub is defined in the generated client files: in Python it is named SynthesizerStub and in Go it is SynthesizerClient. In other languages, such as Java, you must create your own stub.

Send request and process results

Finally, the client calls the stub to send the synthesis request, then processes the response (a stream of responses) using the fields in SynthesisResponse.

The response returns the synthesized audio to the client, streaming it and optionally saving it in an audio file. In this client, the audio is saved to a file named in the --output_wav_file argument.

More features

Features not shown in this simple application are described in the sample synthesis client and other sections:

Get voices: To learn which voices are available, see Run client for voices.

Control codes: To provide input in the form of a tokenized sequence of text and Nuance control codes, see Input to synthesize and Control codes.

More SSML: For more information about SSML input and tags, see SSML tags.

Upload resources. See Reference topics - Synthesis resources and Sample storage client.

User dictionary: To provide a user dictionary or other resources, see Run client with resources.

Unary: If you prefer a non-streamed response, see Run client for unary response.

Multi requests: If you have multiple requests, direct them all to the same channel and stub. See Multiple requests.

Sample synthesis client

Download and extract the sample synthesis client

$ unzip sample-synthesis-client.zip

Archive: sample-synthesis-client.zip

inflating: mix-client.py

inflating: flow.py

inflating: run-mix-client.sh

$ chmod +x mix-client.py

$ chmod +x run-mix-client.sh

Location of application files, above the directory holding the Python stubs

├── flow.py

├── mix-client.py

├── run-mix-client.sh

└── nuance

├── rpc (RPC message files)

└── tts

├── storage (Storage files)

└── v1

├── synthesizer_pb2_grpc.py

├── synthesizer_pb2.py

└── synthesizer.proto

This section contains a fully-functional Python client that you may download and use to synthesize speech using the Synthesizer API. To run this client, you need:

- Python 3.6 or later.

- The generated Python stub files from gRPC setup.

- Your client ID and secret from Prerequisites from Mix.

- The OAuth scope for the NVC service:

tts. - A zip file containing the client files: sample-synthesis-client.zip. Download this zip file and extract its files into the same directory as the nuance directory, which contains your proto files and Python stubs.

- Give mix-client.py and run-mix-client.sh execute permission with

chmod +x

You can use the application to check for available voices and/or request synthesis. Here are a few scenarios you can try.

Run client for help

For a quick check that the client is working, and to see the arguments it accepts, run it using the help (-h or --help) option.

$ ./mix-client.py -h

The token option is required. Other defaults mean you do not need to specify an input file or a server URL as you run the client.

| Option | Description |

|---|---|

| -h, --help | Show help message. |

| -f, --file file(s) | List of flow files to execute sequentially. Default is flow.py. For multiple flow files, enter: --files flow.py flow2.py |

| -p, --parallel | Run each flow in a separate thread. |

| -i, --iterations num | Number of times to run the list of files. Default is 1. |

| -s, --serverUrl url | Mix TTS server URL, default is tts.api.nuance.co.uk |

| --token token | Mandatory. Access token. See step 1 in Run client for voices next. |

| --saveAudio | Save whole audio to disk. |

| --saveAudioChunks | Save each individual audio chunk to disk. |

| --saveAudioAsWav | Save each audio file in WAV format. |

| --sendUnary | Receive one response (UnarySynthesis) instead of a stream of responses (Synthesize). |

| ‑‑maxReceiveSizeMB mb | Maximum length of gRPC server response in megabytes. Default is 50 MB. |

Run client for voices

Results from get-voices request

$ ./run-mix-client.sh

2020-09-09 13:46:27,629 (140276734273344) INFO Iteration #1

2020-09-09 13:46:27,638 (140276734273344) DEBUG Creating secure gRPC channel

2020-09-09 13:46:27,640 (140276734273344) INFO Running file [flow.py]

2020-09-09 13:46:27,640 (140276734273344) DEBUG [voice {

language: "en-us"

}

]

2020-09-09 13:46:27,640 (140276734273344) INFO Sending GetVoices request

2020-09-09 13:46:27,976 (140276734273344) INFO voices {

name: "Ava-Mls"

model: "enhanced"

language: "en-us"

gender: FEMALE

sample_rate_hz: 22050

language_tlw: "enu"

version: "2.0.1"

}

...

voices {

name: "Evan"

model: "enhanced"

language: "en-us"

gender: MALE

sample_rate_hz: 22050

language_tlw: "enu"

version: "1.1.1"

}

voices {

name: "Nathan"

model: "enhanced"

language: "en-us"

gender: MALE

sample_rate_hz: 22050

language_tlw: "enu"

version: "3.0.1"

}

...

voices {

name: "Zoe-Ml"

model: "enhanced"

language: "en-us"

gender: FEMALE

sample_rate_hz: 22050

language_tlw: "enu"

version: "1.0.2"

}

2020-09-09 13:46:27,977 (140276734273344) INFO Done running file [flow.py]

2020-09-09 13:46:27,977 (140276734273344) INFO Iteration #1 complete

2020-09-09 13:46:27,978 (140276734273344) INFO Done

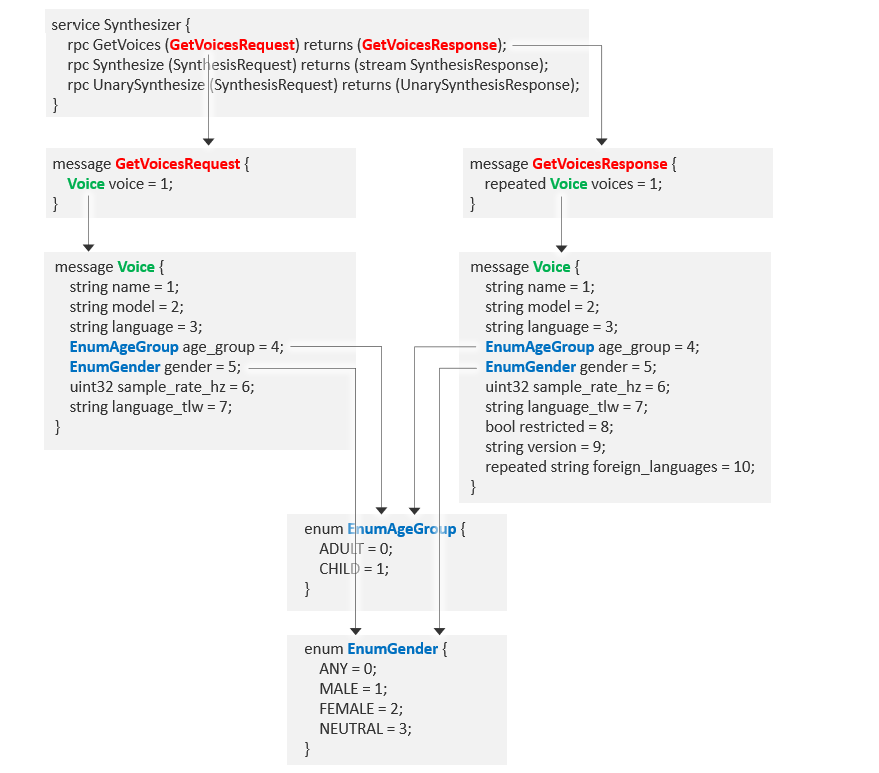

When you ask NVC to synthesize text, you must specify a named voice. To learn which voices are available, send a GetVoicesRequest, entering your requirements in the flow.py input file.

- Edit the run script, run-mix-client.sh, to add your CLIENT_ID and SECRET and generate an access token. These are your Mix credentials as described in Authorize. The OAuth scope,

tts, is included in the script, along with scopes for other Mix services.#!/bin/bash CLIENT_ID=<Mix client ID, replace colons with %3A> SECRET=<Mix client secret> export MY_TOKEN="`curl -s -u "$CLIENT_ID:$SECRET" \ "https://auth.crt.nuance.co.uk/oauth2/token" \ -d "grant_type=client_credentials" -d "scope=asr nlu tts" \ | python -c 'import sys, json; print(json.load(sys.stdin)["access_token"])'`" ./mix-client.py --token $MY_TOKEN --saveAudio --saveAudioAsWav

- Edit the input file, flow.py, to request all American English voices, and turn off synthesis.

from nuance.tts.v1.synthesizer_pb2 import * list_of_requests = [] # GetVoices request request = GetVoicesRequest() #request.voice.name = "Evan" request.voice.language = "en-us" # Request all en-us voices # Add request to list list_of_requests.append(request) # Enable voice request # Synthesis request ... #Add request to list #list_of_requests.append(request) # Disable synthesis with #

- Run the client using the script file.

$ ./run-mix-client.sh

See the results at the right.

Get more voices

You can experiment with this request: for example, to see all available voices, remove or comment out all the request.voice lines, leaving only the main GetVoicesRequest.

# GetVoices request request = GetVoicesRequest() # Keep only this line #request.voice.name = "Evan" #request.voice.language = "en-us"

The results include all voices available from the Nuance-hosted NVC service.

Run client for synthesis

Results from synthesis request (some events are omitted)

$ ./run-mix-client.sh

2020-09-09 13:58:52,142 (140022203164480) INFO Iteration #1

2020-09-09 13:58:52,151 (140022203164480) DEBUG Creating secure gRPC channel

2020-09-09 13:58:52,153 (140022203164480) INFO Running file [flow.py]

2020-09-09 13:58:52,153 (140022203164480) DEBUG [voice {

name: "Evan"

}

, voice {

name: "Evan"

model: "enhanced"

}

audio_params {

audio_format {

pcm {

sample_rate_hz: 22050

}

}

volume_percentage: 80

speaking_rate_factor: 1.0

audio_chunk_duration_ms: 2000

}

input {

text {

text: "This is a test. A very simple test."

}

}

event_params {

send_log_events: true

}

user_id: "MyApplicationUser"

]

2020-09-09 13:58:52,154 (140022203164480) INFO Sending GetVoices request

2020-09-09 13:58:52,303 (140022203164480) INFO voices {

name: "Evan"

model: "enhanced"

language: "en-us"

gender: MALE

sample_rate_hz: 22050

language_tlw: "enu"

version: "1.1.1"

}

2020-09-09 13:58:52,303 (140022203164480) INFO Sending Synthesis request

. . .

2020-09-09 13:58:52,663 (140022203164480) INFO Received status response: SUCCESS

2020-09-09 13:58:52,664 (140022203164480) INFO Wrote audio to flow.py_i1_s1.wav

2020-09-09 13:58:52,664 (140022203164480) INFO Done running file [flow.py]

2020-09-09 13:58:52,665 (140022203164480) INFO Iteration #1 complete

2020-09-09 13:58:52,665 (140022203164480) INFO Done

Once you know the voice you want to use, you can ask NVC to synthesize a simple test string and save the resulting audio in a wave file using a SynthesisRequest. Again enter your requirements in flow.py.

- Look at run-mix-client.sh and notice the –saveAudio and –saveAudioAsWav arguments. There is no need to include the ‑‑file argument since flow.py is the default input filename.

. . . ./mix-client.py --token $MY_TOKEN --saveAudio --saveAudioAsWav

Edit flow.py to verify that your voice is available, then request synthesis using that voice.

from nuance.tts.v1.synthesizer_pb2 import * list_of_requests = [] # GetVoices request request = GetVoicesRequest() request.voice.name = "Evan" # Request a specific voice # Add request to list list_of_requests.append(request) # Synthesis request request = SynthesisRequest() request.voice.name = "Evan" # Request synthesis using that voice request.voice.model = "enhanced" pcm = PCM(sample_rate_hz=22050) request.audio_params.audio_format.pcm.CopyFrom(pcm) request.audio_params.volume_percentage = 80 request.audio_params.speaking_rate_factor = 1.0 request.audio_params.audio_chunk_duration_ms = 2000 request.input.text.text = "This is a test. A very simple test." request.event_params.send_log_events = True request.user_id = "MyApplicationUser" #Add request to list list_of_requests.append(request) # Enable synthesis request

Run the client using the script file.

$ ./run-mix-client.sh

See the results at the right and notice the audio file created:

- flow.py_i1_s1.wav: Evan saying: "This is a test. A very simple test."

Multiple requests

Results from multiple synthesis requests

$ ./run-mix-client.sh

2020-09-27 14:26:27,209 (140665436571456) INFO Iteration #1

2020-09-27 14:26:27,219 (140665436571456) DEBUG Creating secure gRPC channel

2020-09-27 14:26:27,221 (140665436571456) INFO Running file [flow.py]

2020-09-27 14:26:27,221 (140665436571456) DEBUG [voice {

name: "Evan"

model: "enhanced"

}

audio_params {

audio_format {

pcm {

sample_rate_hz: 22050

}

}

}

input {

text {

text: "This is a test. A very simple test."

}

}

, 2, voice {

name: "Evan"

model: "enhanced"

}

audio_params {

audio_format {

pcm {

sample_rate_hz: 22050

}

}

}

input {

text {

text: "Your coffee will be ready in 5 minutes."

}

}

, 2, voice {

name: "Zoe-Ml"

model: "enhanced"

}

audio_params {

audio_format {

pcm {

sample_rate_hz: 22050

}

}

}

input {

text {

text: "The wind was a torrent of darkness, among the gusty trees."

}

}

]

2020-09-27 14:26:27,221 (140665436571456) INFO Sending Synthesis request

2020-09-27 14:26:27,673 (140665436571456) INFO Wrote audio to flow.py_i1_s1.wav

2020-09-27 14:26:27,673 (140665436571456) INFO Waiting for 2 seconds

2020-09-27 14:26:29,675 (140665436571456) INFO Sending Synthesis request

2020-09-27 14:26:29,883 (140665436571456) INFO Wrote audio to flow.py_i1_s2.wav

2020-09-27 14:26:29,883 (140665436571456) INFO Waiting for 2 seconds

2020-09-27 14:26:31,885 (140665436571456) INFO Sending Synthesis request

2020-09-27 14:26:32,102 (140665436571456) INFO Wrote audio to flow.py_i1_s3.wav

2020-09-27 14:26:32,102 (140665436571456) INFO Done running file [flow.py]

2020-09-27 14:26:32,102 (140665436571456) INFO Iteration #1 complete

2020-09-27 14:26:32,102 (140665436571456) INFO Done

You can send multiple requests for synthesis (and/or get voices) in the same session. For efficient communication with the NVC server, all requests use the same channel and stub. This scenario sends three synthesis requests.

Edit flow.py to add two more synthesis requests. (You may keep the get-voices request or remove it.) Optionally pause for a couple of seconds after each synthesis request.

from nuance.tts.v1.synthesizer_pb2 import * list_of_requests = [] # Synthesis request request = SynthesisRequest() # First request request.voice.name = "Evan" request.voice.model = "enhanced" pcm = PCM(sample_rate_hz=22050) request.audio_params.audio_format.pcm.CopyFrom(pcm) request.input.text.text = "This is a test. A very simple test." list_of_requests.append(request) list_of_requests.append(2) # Optionally pause after request # Synthesis request request = SynthesisRequest() # Second request request.voice.name = "Evan" request.voice.model = "enhanced" pcm = PCM(sample_rate_hz=22050) request.audio_params.audio_format.pcm.CopyFrom(pcm) request.input.text.text = "Your coffee will be ready in 5 minutes." list_of_requests.append(request) list_of_requests.append(2) # Optionally pause after request # Synthesis request request = SynthesisRequest() # Third request request.voice.name = "Zoe-Ml" request.voice.model = "enhanced" pcm = PCM(sample_rate_hz=22050) request.audio_params.audio_format.pcm.CopyFrom(pcm) request.input.text.text = "The wind was a torrent of darkness, among the gusty trees." list_of_requests.append(request)

Run the client using the script file.

$ ./run-mix-client.sh

See the results at the right and notice the three audio files created:

- flow.py_i1_s1.wav: Evan saying: "This is a test..."

- flow.py_i1_s2.wav: Evan saying: "Your coffee will be ready..."

- flow.py_i1_s3.wav: Zoe saying: "The wind was a torrent of darkness..."

Run client with resources

Results from synthesis request

$ ./run-mix-client.sh

2021-05-23 15:56:19,442 (140367419443008) INFO Iteration #1

2021-05-23 15:56:19,454 (140367419443008) DEBUG Creating secure gRPC channel

2021-05-23 15:56:19,458 (140367419443008) INFO Running file [flow.py]

2021-05-23 15:56:19,458 (140367419443008) DEBUG [voice {...}

audio_params {...}

input {

text {

text: "This is a test. A very simple test."

}

resources {

uri: "urn:nuance-mix:tag:tuning:lang/coffee_app/coffee_dict/en-us/mix.tts"

}

}

]

2021-05-23 15:56:19,458 (140367419443008) INFO Sending Synthesis request

2021-05-23 15:56:20,015 (140367419443008) INFO Wrote audio to flow.py_i1_s1.wav

2021-05-23 15:56:20,015 (140367419443008) INFO Done running file [flow.py]

2021-05-23 15:56:20,016 (140367419443008) INFO Done

If you have uploaded synthesis resources using the Storage API (see the Sample storage client), you can reference them in a synthesis request. Enter the resources in flow.py.

- Use run-mix-client.sh with –saveAudio and –saveAudioAsWav arguments.

. . . ./mix-client.py --token $MY_TOKEN --saveAudio --saveAudioAsWav

- Edit flow.py to specify a resource within the synthesis request, for example a user dictionary uploaded with the storage API.

from nuance.tts.v1.synthesizer_pb2 import * . . . # Synthesis request request = SynthesisRequest() request.voice.name = "Evan" request.voice.model = "enhanced" pcm = PCM(sample_rate_hz=22050) request.audio_params.audio_format.pcm.CopyFrom(pcm) user_dict = SynthesisResource() # Add a user dictionary user_dict.type = EnumResourceType.USER_DICTIONARY user_dict.uri = "urn:nuance-mix:tag:tuning:lang/coffee_app/coffee_dict/en-us/mix.tts" request.input.resources.extend([user_dict]) request.input.text.text = "This is a test. A very simple test." #Add request to list list_of_requests.append(request)

- Run the client using the script file.

$ ./run-mix-client.sh

See the results at the right and notice the user dictionary listed under resources.

Other input: SSML and control codes

The input in these examples is plain text ("This is a test," etc.) but you can also provide input in the form of SSML and control codes.

See Reference topics - Input to synthesize for details and examples you can use in this sample application.

What's list_of_requests?

The application expects all input files to declare a global array named list_of_requests. It sequentially processes the requests contained in that array.

You may optionally instruct the application to wait a number of seconds between requests, by appending a number value to list_of_requests. For example:

list_of_requests.append(request1) list_of_requests.append(1.5) list_of_requests.append(request2)

Once request1 is complete, the application pauses for 1.5 seconds before executing request2.

Run client for unary response

Unary response gives one response for each request

...

2021-09-09 14:28:00,425 (140444352841536) INFO Sending Unary Synthesis request

2021-09-09 14:28:00,425 (140444352841536) INFO Received audio: 127916 bytes

2021-09-09 14:28:00,425 (140444352841536) INFO First chunk latency: 0.1435282602906227 seconds

2021-09-09 14:28:00,425 (140444352841536) INFO Average first-chunk latency (over 1 synthesis requests): 0.1435282602906227 seconds

2021-09-09 14:28:00,426 (140444352841536) INFO Received events

2021-09-09 14:28:00,428 (140444352841536) INFO events {

. . .

2021-09-09 14:28:00,428 (140444352841536) INFO Received status response: SUCCESS

2021-09-09 14:28:00,429 (140444352841536) INFO Wrote audio to flow.py_i1_s1.wav

2021-09-09 14:28:00,429 (140444352841536) INFO Done running file [flow.py]

2021-09-09 14:28:00,431 (140444352841536) INFO Iteration #1 complete

2021-09-09 14:28:00,431 (140444352841536) INFO Average first-chunk latency (over 1 synthesis requests): 0.1435282602906227 seconds

2021-09-09 14:28:00,431 (140444352841536) INFO Done

By default, the synthesized voice is streamed back to the client, but you may request a unary (non-streamed, single package) response. Using the sample client, include the ‑‑sendUnary argument as you run mix-client.py in run-mix-client.sh, for example:

. . . ./mix-client.py --token $MY_TOKEN --saveAudio --saveAudioAsWav --sendUnary

This example uses the same input flow.py file as Run client for synthesis. In this unary response, the request returns a single non-streamed audio package. See the results at the right.

If you have multiple requests, each request returns a single audio package.

See also Streamed vs. unary response.

Sample storage client

Download and extract the sample storage client

$ unzip sample-storage-client.zip

Archive: sample-storage-client.zip

inflating: run-storage-client.sh

inflating: storage-client.py

$ chmod +x storage-client.py

$ chmod +x run-storage-client.sh

Location of client files, above the directory holding the Python stubs

├── storage-client.py

├── run-storage-client.sh

└── nuance

├── rpc (RPC message files)

└── tts

├── storage

│ └── v1beta1

│ ├── storage_pb2_grpc.py

│ ├── storage_pb2.py

│ └── storage.proto

└── v1 (Synthesizer files)

This section contains a Python client for uploading and deleting synthesis resources using the Storage API. To run this client, you need:

- Python 3.6 or later.

- The generated Python stubs from gRPC setup.

- Your client ID and secret from Prerequisites from Mix.

The OAuth scope for the NVC service:

tts.A zip file containing the client files: sample-storage-client.zip. Download this zip file and extract its files into the same directory as the nuance directory, which contains your proto files and Python stubs.

Give storage-client.py and run-storage-client.sh execute permission with

chmod +x

You can use the application to upload and delete synthesis resources to storage.

Run storage client for help

To check that the client is working, and to see the arguments it accepts, run it using the help (-h or --help) option.

$ ./storage-client.py --help

Some options, shown in bold below, are required in all requests. Others are needed depending on the type.

| Option | Description |

|---|---|

| -h, --help | Show help message. |

| --server_url url | Hostname of NVC server, default localhost. Use tts.api.nuance.co.uk |

| --token token | Access token generated by Nuance Oauth service: https://auth.crt.nuance.co.uk/oauth2/token. See General options next. |

| ‑‑max_chunk_size_bytes num | Maximum size, in bytes, of each file chunk. Default is 4096 (4 MB). |

| --upload | Send an upload RPC. Requires --context_tag, --name, and resource-specific options. One of --upload or --delete is mandatory. |

| --delete | Send a delete RPC. Requires the --uri option. |

| --file file | File to upload. For ActivePrompt database, must be a zip file. |

| --context_tag tag | A group name, either existing or new. If it doesn't exist, it will be created. |

| --name name | A name for the resource within the context. |

| --type type | The resource type, one of: activeprompt, user_dictionary, text_ruleset, or wav. |

| --language code | IETF language code. Required when type is user_dictionary or text_ruleset. |

| --voice voice | A Nuance voice. Required when type is activeprompt. |

| --voice_model model | The voice model. Required when type is activeprompt. |

| --voice_version version | The version of the voice. Required when type is activeprompt. |

| ‑‑vocalizer_studio_version version | The Nuance Vocalizer Studio version. Required when type is activeprompt. |

| --uri urn | For the delete operation, the URN of the object to delete. |

General options

First edit the shell script, run-storage-client.sh, to add your credentials to generate an access token, and check the general access options.

#!/bin/bash CLIENT_ID=<Mix client ID, replace colons with %3A> SECRET=<Mix client secret> export MY_TOKEN="`curl -s -u "$CLIENT_ID:$SECRET" \ "https://auth.crt.nuance.co.uk/oauth2/token" \ -d "grant_type=client_credentials" -d "scope=asr nlu tts" \ | python -c 'import sys, json; print(json.load(sys.stdin)["access_token"])'`" ./storage-client.py server_url tts.api.nuance.co.uk --token $MY_TOKEN --upload --type user_dictionary --file coffee-dictionary.dcb \ --context_tag coffee_app --name coffee_dict \ --language en-us

Add or verify these values in the shell script:

CLIENT_ID: Your client ID from Mix, starting withappID%3ASECRET: The secret you generated for your client in Mix--server_url: The host name of the NVC service, usuallytts.api.nuance.co.uk--token: The environment variable containing your generated access token, in this example$MY_TOKEN- The OAuth scope,

tts, is included in the script, along with scopes for other Mix services.

Then use the shell script to add the options required for the type of resource you want to upload. See the following scenarios for details.

Upload user dictionary

Follow these steps to upload a user dictionary created in Nuance Vocalizer Studio. See Reference topics - User dictionary.

Make sure run-storage-client.sh contains your credentials as described in General options.

Add the arguments for uploading a user dictionary, for example:

./storage-client.py --server_url tts.api.nuance.co.uk --token $MY_TOKEN \ --upload --type user_dictionary --file coffee-dictionary.dcb \ --context_tag coffee_app --name coffee_dict \ --language en-us

Run the client using the script file to upload the user dictionary.

$ ./run-storage-client.sh 2021-05-20 11:38:36,060 INFO Type is User Dictionary 2021-05-20 11:38:36,205 INFO Done reading data 2021-05-20 11:38:36,474 INFO status { status_code: OK } uri: "urn:nuance-mix:tag:tuning:lang/coffee_app/coffee_dict/en-us/mix.tts?type=userdict

To use this dictionary in your synthesis requests, reference it using the URN. The type=userdict field is for information only and is not required as part of the reference.

Upload ActivePrompts

Follow these steps to upload an ActivePrompt database created in Nuance Vocalizer Studio. See Reference topics - ActivePrompt database.

Make sure run-storage-client.sh contains your credentials as described in General options.

Add the arguments for uploading an ActivePrompt database, for example:

./storage-client.py --server_url tts.api.nuance.co.uk --token $MY_TOKEN \ --upload --type activeprompt --file coffee-activeprompts.zip \ --context_tag coffee_app --name coffee_prompts \ --voice evan --voice_model enhanced --voice_version 1.0.0 \ --vocalizer_studio_version 3.4

Run the client using the script file to upload the ActivePrompt database.

$ ./run-storage-client.sh 2021-05-20 11:40:16,389 INFO Type is ActivePromptDB 2021-05-20 11:40:16,648 INFO Done reading data 2021-05-20 11:40:16,961 INFO status { status_code: OK } uri: "urn:nuance-mix:tag:tuning:voice/coffee_app/coffee_prompts/evan/mix.tts?type=activeprompt"

To use this ActivePrompt database in your synthesis requests, reference it using the URN. The type=activeprompt field is for information only and is not required as part of the reference.

Upload rulesets

Follow these steps to upload a text ruleset. (Binary, or encrypted, rulesets are not supported.) See Reference topics - Ruleset.

Make sure run-storage-client.sh contains your credentials as described in General options.

Add the arguments for uploading a text ruleset, for example:

./storage-client.py --server_url tts.api.nuance.co.uk --token $MY_TOKEN \ --upload --type text_ruleset --file coffee-ruleset.rst.txt \ --context_tag coffee_app --name coffee_rules \ --language en-us

Run the client using the script file to upload the ruleset.

$ ./run-storage-client.sh 2021-05-20 11:44:08,234 INFO Type is Text User Ruleset 2021-05-20 11:44:08,386 INFO Done reading data 2021-05-20 11:44:08,476 INFO status { status_code: OK } uri: "urn:nuance-mix:tag:tuning:lang/coffee_app/coffee_rules/en-us/mix.tts?type=textruleset"

To use this ruleset in your synthesis requests, reference it using the URN. The type=textruleset field is for information only and is not required as part of the reference.

Upload audio

Follow these steps to upload an audio wave file. See Reference topics - Audio file.

Make sure run-storage-client.sh contains your credentials as described in General options.

Add the arguments for uploading an audio file, for example:

./storage-client.py --server_url tts.api.nuance.co.uk --token $MY_TOKEN \ --upload --type wav --file greetings.wav \ --context_tag coffee_app --name audio_hi

Run the client using the script file to upload the audio file.

$ ./run-storage-client.sh 2021-05-20 11:53:55,761 INFO Type is Wav 2021-05-20 11:53:56,080 INFO Done reading data 2021-05-20 11:53:56,189 INFO status { status_code: OK } uri: "urn:nuance-mix:tag:tuning:audio/coffee_app/audio_hi/mix.tts?type=wav"

To use this audio recording in your synthesis requests, reference it using the URN. The type=wav field is for information only and is not required as part of the reference.

Delete resource

If you need to remove a resource from storage, include the --delete option and the resource URN.

Make sure run-storage-client.sh contains your credentials as described in General options.

Add the arguments for deleting a resource. For example, this removes a previously-uploaded ruleset:

./storage-client.py --server_url tts.api.nuance.co.uk --token $MY_TOKEN \ --delete \ --uri urn:nuance-mix:tag:tuning:lang/coffee_app/coffee_rules/en-us/mix.tts

Run the client using the script file to delete the resource.

$ ./run-storage-client.sh 2021-05-26 08:53:51,584 INFO status { status_code: OK }

The resource is removed from storage.

Reference topics

This section provides more information about topics in the gRPC APIs.

Status codes

An HTML status code is returned for all requests.

| Code | Message | Indicates |

|---|---|---|

| 200 | Success | Successful response. |

| 400 | Bad request | A malformed or unsupported client request was rejected. |

| 401 | Unauthenticated | Request could not be authorized. See Authorize. |

| 403 | Forbidden | A restricted voice was requested but you are not authorized to use it. |

| 413 | Payload too large | A synthesis request has exceeded the limits for voice switching. |

| 500 | Internal server error | An unknown error has occurred on the server. |

| 502 | Resource error | An error has occurred with a synthesis resource. |

Streamed vs. unary response

One request, two possible responses (from proto file)

service Synthesizer {

rpc Synthesize(SynthesisRequest) returns (stream SynthesisResponse) {}

rpc UnarySynthesize(SynthesisRequest) returns (UnarySynthesisResponse {}

. . .

message SynthesisRequest {

Voice voice = 1;

AudioParameters audio_params = 2;

Input input = 3;

EventParameters event_params = 4;

map<string, string> client_data = 5;

}

message SynthesisResponse {

oneof response {

Status status = 1;

Events events = 2;

bytes audio = 3;

}

}

message UnarySynthesisResponse {

Status status = 1;

Events events = 2;

bytes audio = 3;

}

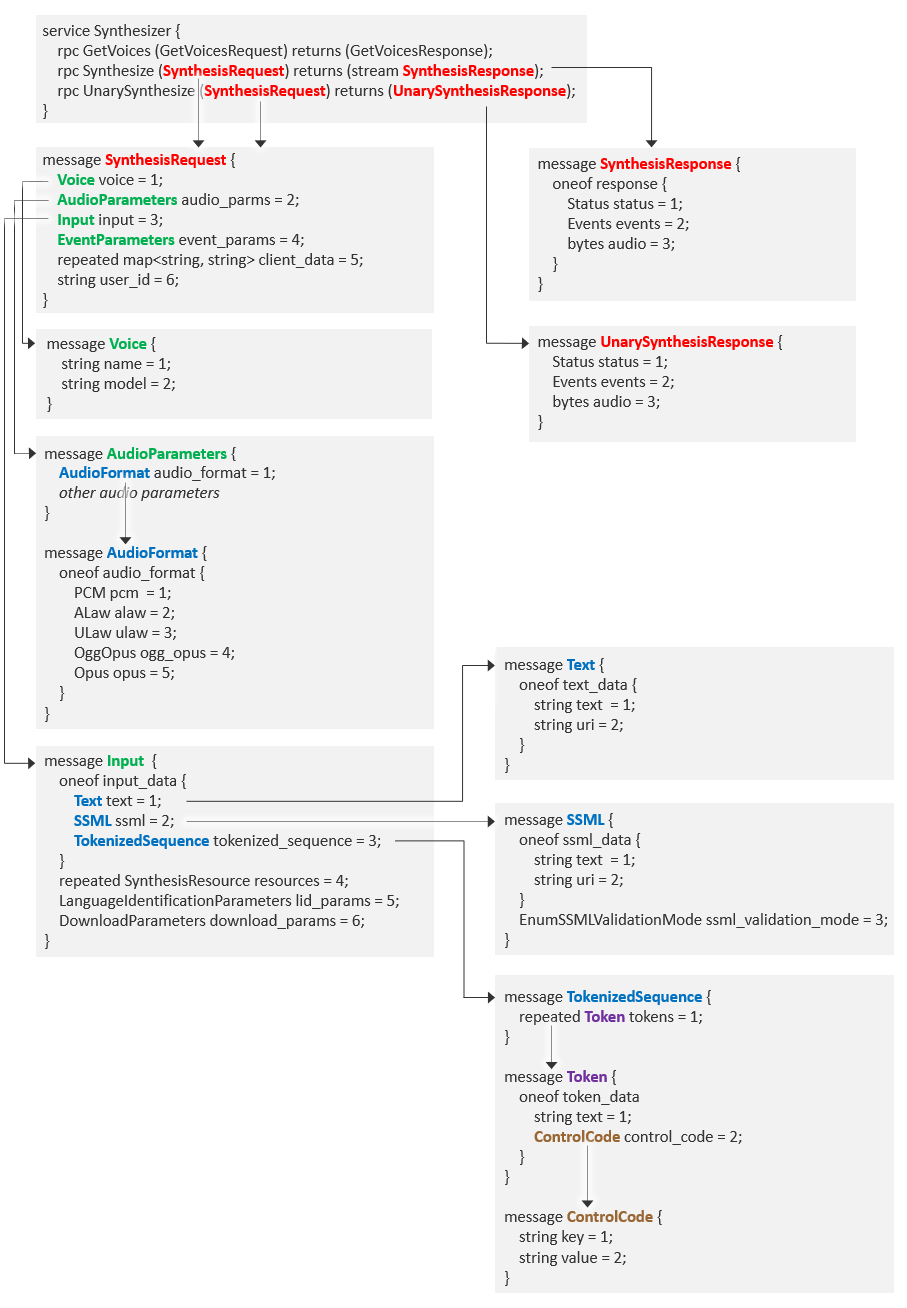

NVC offers two types of synthesis response: a streamed response available in SynthesisResponse and a non-streamed response in UnarySynthesisResponse.

The request is the same in both cases: SynthesisRequest specifies a voice, the input text to synthesize, and optional parameters. The response can be either:

SynthesisResponse: Returns one status message followed by multiple streamed audio buffers, each including the markers or other events specified in the request. Each audio buffer contains the latest synthesized audio.

UnarySynthesisResponse: Returns one status message and one audio buffer, containing all the markers and events specified in the request. The underlying NVC engine caps the audio response size.

See Run client for unary response to run the sample Python client with a unary response, activated by a command line flag.

Defaults

The proto file provides the following defaults for messages in SynthesisRequest. Mandatory fields are shown in bold.

| Items in SynthesisRequest | Default | |||

|---|---|---|---|---|

| voice (Voice) | ||||

| name | Mandatory, e.g. Evan | |||

| model | Mandatory, e.g. enhanced | |||

| age_group (EnumAgeGroup) | ADULT | |||

| gender (EnumGender) | ANY | |||

| audio_params (AudioParameters) | ||||

| audio_format (AudioFormat) | PCM 22.5kHz | |||

| volume_percentage | 80 | |||

| speaking_rate_factor | 1.0 | |||

| audio_chunk_duration_ms | 20000 (20 seconds) | |||

| target_audio_length_ms | 0, meaning no maximum duration | |||

| disable_early_emission | False: Send audio segments as soon as possible | |||

| input (Input) | ||||

| text (Text) | Mandatory: one of text, tokenized_sequence, or ssmls | |||

| tokenized_sequence (TokenizedSequence) | ||||

| ssml (SSML) | ||||

| ssml_validation_mode (EnumSSMLValidationMode) | STRICT | |||

| escape_sequence (non-modifiable field) | \! and <ESC> | |||

| resources (SynthesisResource) | ||||

| type (EnumResourceType) | USER_DICTIONARY | |||

| lid_params (LanguageIdentificationParameters) | ||||

| disable | False: LID is turned on | |||

| languages | Empty, meaning use all available languages | |||

| always_use_ highest_confidence | False: Use highest language with any confidence score | |||

| download_params (DownloadParameters) | ||||

| headers | Empty | |||

| refuse_cookies | False: Accept cookies | |||

| request_timeout_ms | NVC server default, usually 30000 (30 seconds) | |||

| event_params (EventParameters) | ||||

| send_sentence_marker_events | False: Do not send | |||

| send_word_marker_events | False: Do not send | |||

| send_phoneme_marker_events | False: Do not send | |||

| send_bookmark_marker_events | False: Do not send | |||

| send_paragraph_marker_events | False: Do not send | |||

| send_visemes | False: Do not send | |||

| send_log_events | False: Do not send | |||

| suppress_input | False: Include text and URIs in logs | |||

| client_data | Empty | |||

| user_id | Empty | |||

Input to synthesize

Plain text input

SynthesisRequest (

voice = Voice (

name = "Evan",

model = "enhanced"),

input.text.text = "Your order will be ready to pick up in 45 minutes."

)

SSML input containing plain text only

SynthesisRequest (

voice = Voice (

name = "Evan",

model = "enhanced"),

input = Input (

ssml = SSML (

text = "<speak>It's 24,901 miles around the earth, or 40,075 km.</speak>",

ssml_validation_mode = WARN)

)

)

SSML input containing text and SSML elements to change the volume

SynthesisRequest (

voice = Voice (

name = "Evan",

model = "enhanced"),

input = Input (

ssml = SSML (

text = '<speak><prosody volume="10">I can speak rather quietly,</prosody>

<prosody volume="90">But also very loudly.</prosody></speak>',

ssml_validation_mode = WARN)

)

)

Tokenized sequence input

SynthesisRequest (

voice = Voice (

name = "Evan",

model = "enhanced"),

input = Input (

tokenized_sequence = TokenizedSequence (

tokens = [

Token (text = "My name is "),

Token (control_code = ControlCode (key = "pause", value = "300")),

Token (text = "Jeremiah Jones") ]

)

)

)

You provide the text for NVC to synthesize in the Input message. It can be plain text, SSML code, or a sequence of plain text and Nuance control codes.

If you are using the Sample synthesis client, enter the different types of input as request.input lines in the input file, flow.py. (When flow.py contains multiple requests, it executes only the last uncommented section.) For example, using an American English voice:

Plain text input, synthesized as: "Your order will be ready to pick up in forty five minutes."

request.input.text.text = "Your order will be ready to pick up in 45 minutes."

SSML input, synthesized as "It’s twenty four thousand nine hundred one miles around the earth, or forty thousand seventy five kilometers."

request.input.ssml.text = "<speak>It's 24,901 miles around the earth, or 40,075 km.</speak>"

SSML input with elements, synthesized as "I can speak rather quietly, BUT ALSO VERY LOUDLY."

request.input.ssml.text = '<speak><prosody volume="10">I can speak rather quietly, </prosody><prosody volume="90">But also very loudly.</prosody></speak>'

Tokenized sequence of text and Nuance control codes, synthesized as: "My name is... Jeremiah Jones."

request.input.tokenized_sequence.tokens.extend([ Token(text="My name is "), Token(control_code=ControlCode(key="pause", value="300")), Token(text="Jeremiah Jones") ])

Another tokenized sequence, synthesized as: "The time and date is: ten o'clock, May twenty-sixth, two thousand twenty. My phone number is: one eight hundred, six eight eight, zero zero six eight."

request.input.tokenized_sequence.tokens.extend([ Token(text="The time and date is."), Token(control_code=ControlCode(key="tn", value="time")), Token(text="10:00"), Token(control_code=ControlCode(key="pause", value="300")), Token(control_code=ControlCode(key="tn", value="date")), Token(text="05/26/2020"), Token(control_code=ControlCode(key="pause", value="300")), Token(text="My phone number is."), Token(control_code=ControlCode(key="tn", value="phone")), Token(text="1-800-688-0068") ])

SSML tags

Generic example, with optional elements omitted

<speak>Text before SSML element.

<prosody volume="10">Text following or affected by SSML element code.</prosody>

</speak>

Optional elements may be included without error

<?xml version="1.0"?>

<speak xmlns="http://www.w3.org/2001/10/synthesis" xml:lang="en-US" version="1.0">

Text before SSML element.

<prosody volume="10">Text following or affected by SSML element code.</prosody>

</speak>

Examples using flow.py with sample client

# You can enclose the SSML in double quotes with single quotes inside

request.input.ssml.text = "<speak>It's easy. Take a deep breath, pause for a second or two <break time='1500ms'/> and then exhale slowly.</speak>"

# Or vice versa, escaping any apostrophes

request.input.ssml.text = '<speak>It\'s easy. Take a deep breath, pause for a second or two <break time="1500ms"/> and then exhale slowly.</speak>'

# Or enclose in three single (or double) quotes for multiline text

request.input.ssml.text = '''<speak>It's easy.

Take a deep breath, pause for a second or two

<break time="1500ms"/> and then exhale slowly.

</speak>'''

SSML elements may be included when using the input type Input - SSML. These tags indicate how the text segments within the tag should be spoken.

See Control codes to accomplish the same type of control in tokenized sequence input.

NVC supports the following SSML elements and attributes in SSML input. For details about these items, see SSML Specification 1.0. Note that NVC does not support all SSML elements and attributes listed in the W3C specification.

Switching voice and/or language

You can change the voice and/or the language of the speaker within SSML input, using several methods. These elements change to a voice with a different language:

And this control code changes the language in a multilingual voice:

langwith escape codes

xml

xml (optional) and speak (with optional attributes)

<speak>Input text and tags</speak>

Optional elements may be included if wanted

<?xml version="1.0"?>

<speak xmlns="http://www.w3.org/2001/10/synthesis" xml:lang="en-US" version="1.0">

Input text and tags</speak>

An XML declaration, specifying the XML version, 1.0.

In NVC, this element is optional. If omitted, NVC adds it automatically.

speak

The root SSML element. Mandatory. It contains the required attributes, xml:lang and version, and encloses text to be synthesized along with optional elements shown below. The xml:lang attribute sets the base language for the synthesis.

In NVC, the attributes of this element are optional: only <speak> is required. If the attributes are omitted, NVC adds them automatically to the speak element.

Optional attributes may be specified if wanted. If you include the language with xml:lang, it must match the language of the principal voice.

audio

Audio file in cloud storage via URN

<speak>Please leave your name after the tone.

<audio src="urn:nuance-mix:tag:tuning:audio/coffee_app/beep/mix.tts" />

</speak>

Audio file via secure URL

<speak>Please leave your name after the tone.

<audio src="https://mtl-host42.nuance.co.uk/audio/beep.wav" />

</speak>

The audio element inserts a digital audio recording at the current location. The src attribute specifies the location of the recording as either:

A URN in Mix cloud storage. Use the Storage API to upload the audio file. See Sample storage client - Upload audio.

A secure URL. The file must be a WAV file on a web server accessed through a secure (https) URL, with a valid TLS certificate.

Alternative text

Alternative text for URN or URL access

<speak>Please leave your name after the tone.

<audio src="urn:nuance-mix:tag:tuning:audio/coffee_app/beep/mix.tts">Beep</audio>

</speak>

<speak>Please leave your name after the tone.

<audio src="https://mtl-host42.nuance.co.uk/audio/beep.wav">Beep</audio>

</speak>

For both URN and URL access, you may include alternative text in the <audio> element. If the audio file cannot be found or is not a WAV file, NVC synthesizes the alternative text and includes it in the results. In these examples, if the audio file is unavailable, the synthesis results are: "Please leave your name after the tone. Beep."

Without the alternative text, NVC reports an error if the file is not a WAV file or is not accessed through a URN or an https URL.

WAV format

NVC supports WAV files containing 16-bit PCM samples.

break

break

<speak>His name is <break time="300ms"/> Michael. </speak>

<speak>Tom lives in New York City. So does John. He\'s at 180 Park Ave. <break strength="none"/> Room 24.</speak>

The break element controls pausing between words, overriding the default breaks based on punctuation in the text. The break tag has two optional attributes:

timespecifies the duration of the break as seconds (1s) or milliseconds (300ms).strengthspecifies a keyword to indicate the duration of the break: none, x-weak, weak, medium (default), strong, or x-strong.break strength="none"can prevent a pause (caused by a comma or period, for example) that would otherwise occur.

These examples are read as: "His name is... Michael" and "Tom lives in New York City. So does John. He’s at one hundred eighty Park Avenue room twenty four." Notice there's no break between "Park Avenue" and "room twenty four."

lang

lang using escape code, in input flow file

request.voice.name = "Zoe-Ml"

request.voice.model = "enhanced"

request.input.ssml.text = "<speak>Hello and welcome to \!\lang=fr-CA\ St-Jean-sur-Richelieu \!\lang=normal\. </speak>"

When used in SSML with a multilingual (-Ml) voice, the lang control code switches to another language supported by the voice. This example uses Zoe-Ml, defined with two languages apart from American English.

voices {

name: "Zoe-Ml"

model: "enhanced"

language: "en-us"

. . .

foreign_languages: "es-mx"

foreign_languages: "fr-ca"

}

In this example, Zoe starts in her English voice, then switches to her French voice to read "St-Jean-sur-Richelieu" using French pronunciation. When lang is used with a non-multilingual voice, the text is pronounced using the voice's base language.

This code is supported in SSML using escape code format, as shown.

mark

mark

<speak>This bookmark <mark name="bookmark1"/> marks a reference point.

Another <mark name="bookmark2"/> does the same.</speak>

The mark element inserts a bookmark that is returned in the results. The value can be any string.

p

p

<speak><p>Welcome to Vocalizer.</p>

<p>Vocalizer is a state-of-the-art text to speech system.</p></speak>

p with change to a Spanish voice

<speak>Say English for an English message.

<p xml:lang="es-MX">O decir español para un mensaje en español.</p></speak>

The p element indicates a paragraph break. A paragraph break is equivalent to break strength="x-strong".

The optional xml:lang attribute switches to a voice whose base language is the locale specified. It does not use a foreign language of the current voice. If possible, the same gender as the original voice is used.

In the second scenario, installed voices include en-US voices, Evan and Zoe-Ml, as well as es-MX voices, Javier and Paulina-Ml. In this example, Evan reads the English text, then Javier reads the Spanish. When starting with Zoe-Ml, the female Paulina-Ml voice is selected as the Spanish voice.

prosody

The prosody element specifies intonation in the generated voice using several attributes. You may combine multiple attributes within the same prosody element.

prosody - pitch

prosody - pitch

<speak>Hi, I\'m Zoe. This is the normal pitch and timbre of my voice.

<prosody pitch="80" timbre="90">But now my voice sounds lower and richer.</prosody></speak>

Prosody pitch changes the speaking voice to sound lower (lower values) or higher (higher values). Not supported for all languages. The value is a keyword, a number (50-200, default is 100), or a relative percentage (+/-n%). The keywords are:

- x-low (-30%)

- low (-15%)

- medium (0%)

- default (0%)

- high

- x-high

You may combine pitch, rate, and timbre for more precise results. For example, pitch and timbre values of 80 or 90 for a female voice give a more neutral voice.

prosody - rate

prosody - rate

<speak>This is my normal speaking rate.

<prosody rate="+50%"> But I can speed up the rate.</prosody>

<prosody rate="-25%"> Or I can slow it down.</prosody></speak>

Prosody rate sets the speaking rate as a keyword, a number (0-100), or a relative percentage (+/-n%). The keywords are:

- x-slow (-50%)

- slow (-30%)

- medium (0%)

- fast (+60%)

- x-fast (+150%)

prosody - timbre

prosody - timbre

<speak>This is the normal timbre of my voice.

<prosody timbre="young"> I can sound a bit younger. </prosody>

<prosody timbre="old" rate="-10%"> Or older and hopefully wiser. </prosody></speak>

Prosody timbre changes the speaking voice to sound bigger and older (lower values) or smaller and younger (higher values). Not supported for all languages. The value is a keyword, a number (50-200, default is 100), or a relative percentage (+/-n%). The keywords are:

- x-young (+35%)

- young (+20%)

- medium (0%)

- default (0%)

- old (-20%)

- x-old (-35%)

prosody - volume

prosody - volume

<speak>This is my normal speaking volume.

<prosody volume="-50%">I can also speak rather quietly,</prosody>

<prosody volume="+50%"> or also very loudly.</prosody></speak>

Prosody volume changes the speaking volume. The value is a keyword, a number (0-100), or a relative percentage (+/-n%). The keywords are: silent, x-soft, soft, medium (default), loud, or x-loud.

s

s

<speak><s>The wind was a torrent of darkness, among the gusty trees</s>

<s>The moon was a ghostly galleon, tossed upon cloudy seas</s></speak>

p with change to a French Canadian voice

<speak>The name of the song is <s xml:lang="fr-CA"> Je ne regrette rien.</s></speak>

The s element indicates a sentence break. A sentence break is equivalent to break strength="strong".

The optional xml:lang attribute works as for the p element. In this example, it switches to a fr-CA voice to say the name of the song.

say-as

say-as

<speak>My address is: <say-as interpret-as="address">Apt. 17, 28 N. Whitney St., Saint Augustine Beach, FL 32084-6715</say-as></speak>

<say-as interpret-as="currency">12USD</say-as>

<say-as interpret-as="date">11/21/2020</say-as>

<say-as interpret-as="name">Care Telecom Ltd</say-as>

<say-as interpret-as="name">King Richard III</say-as>

<say-as interpret-as="ordinal">12th</say-as>

<say-as interpret-as="phone">1-800-688-0068</say-as>

<say-as interpret-as="raw">app.</say-as>

<say-as interpret-as="sms">CU :-)</say-as>

<say-as interpret-as="spell" format="alphanumeric">a34y - 347</say-as>

<say-as interpret-as="spell" format="strict">a34y - 347</say-as>

<say-as interpret-as="state">FL</say-as>

<say-as interpret-as="streetname">Emerson Rd.</say-as>

<say-as interpret-as="streetnumber">11001-11010</say-as>

<say-as interpret-as="time">10:00</say-as>

<say-as interpret-as="zip">01803</say-as>

The say-as element controls how to say specific types of text, using the interpret-as attribute to specify a value and (in some cases) a format. A wide range of input is accepted for most values. The values are:

address: Provides optimal reading for complete postal addresses. For example, "Apt. 17, 28 N. Whitney St., Saint Augustine Beach, FL 32084-6715" is read as "apartment seventeen, twenty eight north Whitney street, Saint Augustine Beach, Florida three two zero eight four six seven one five."

currency: Reads text as currency. For example, "123.45USD" is read as "one hundred twenty three U S dollars and forty five cents."

date: Reads text as a date. For example, "11/21/2020" is read as "November twenty-first, two thousand twenty." The

formatattribute is ignored for date values. It may be specified without error but has no effect.The precise date output is determined by the voice, and ambiguous dates are interpreted according to the conventions of the voice's locale. For example, "05/12/2020" is read by an American English voice as "May twelfth two thousand twenty" and by a British English voice as "the fifth of December two thousand and twenty."

name: Gives correct reading of names, including personal names with roman numerals, such as Pius IX (read as "Pius the ninth"), John I ("John the first"), and Richard III ("Richard the third"). The name must be capitalized but the roman numeral may be in upper or lowercase (III or iii). Do not add a punctuation mark immediately following the roman numeral.

ordinal: Reads positional numbers such as 1st, 2nd, 3rd, and so on. For example, "12th" is read as "twelfth."

phone: Reads telephone numbers. For example, "1-800-688-0068" is read as "One, eight hundred, six eight eight, zero zero six eight."

raw: Provides a literal reading of the text, such as blocking undesired abbreviation expansion. It operates principally on the abbreviations and acronyms but may impact the surrounding text as well.

sms: Gives short message service (SMS) reading. For example, "ttyl, James, :-)" is read as "Talk to you later, James, smiley happy."

spell format=alphanumeric: Spells out all alphabetic and numeric characters, but does not read white space, special characters, and punctuation marks. This is how items are spoken with and without this tag, in American English.

Input With spell - alphanumeric Without spell - alphanumeric a34y - 347 A three four Y three four seven a thirty-four y three hundred forty-seven 12345 one two three four five twelve thousand three hundred forty-five Smythe capital S M Y T H E smith spell format=strict: Spells out all characters, including white space, special characters, and punctuation marks. For example, "a34y - 347" is pronounced "A three four Y space hyphen space three four seven."

For both types of spelling, accented and capital characters are indicated. For example: "café" is spoken as "C A F E acute" and "Abc" is spoken as "capital A B C."

state: Expands and pronounces state, city, and province names and abbreviations, as appropriate for the locale. For example, "FL" is read as "Florida." Not supported for all languages.

streetname: Reads street names and abbreviations. For example, "Emerson Rd." is prounounced "Emerson road." Not supported for all languages.

streetnumber: Reads street numbers. For example, "11001-11010" is read as "eleven oh oh one to eleven oh ten." Not supported for all languages.

time: Gives a time of day reading. For example, "10:00" is pronounced "ten o'clock." The

formatattribute is ignored for time values. It may be specified without error but has no effect.zip: Reads US zip codes. Supported for American English only.

style

style

<speak>Hello, this is Samantha. <style name="lively">Hope you’re having a nice day!</style></speak>

<speak>Hello, this is Samantha. <style name="lively">Hope you’re having a nice day!</style>

<voice name="nathan">Hello, this is Nathan.</voice></speak>

The style element sets the speaking style of the voice. Values for name depend on the voice but are usually neutral, lively, forceful, and apologetic. The default depends on the voice. If you request a style that the voice does not support, there is no effect.

The first example reads "Hello, this is Samantha" in Samantha's default style, then switches to lively style to say "Hope you're having a nice day!"

The style resets to default at the end of the synthesis request or if it encounters a change of voice. The second example continues with Nathan in default style saying "Hello, I am Nathan."

voice

voice

<speak><voice name="samantha">Hello, this is Samantha. </voice>

<voice name="tom">Hello, this is Tom.</voice></speak>

This voices changes to a French voice, Audrey-Ml

<speak>Hi, my name is Zoe.

<voice name="Audrey-Ml">Bonjour, je m\'appelle Audrey.</voice></speak>

The voice element changes the speaking voice, which also forces a sentence break. Values for name are the voices available to the session.

If you specify a voice with another language, the text is spoken using that language.

Control codes

Tokenized sequence structure

SynthesisRequest - Input - TokenizedSequence -

Token (text = "Text before control code"),

Token (control_code=ControlCode (key="code name", value="code value")),

Token (text = "Text following or affected by control code")

Example using flow.py with sample client

request.input.tokenized_sequence.tokens.extend([

Token (text = "My name and address is: "),

Token (control_code = ControlCode (key = "tn", value = "name")),

Token (text = "Aardvark & Sons Co. Inc.,"),

Token (control_code = ControlCode (key = "tn", value = "address")),

Token (text = "123 E. Forest Ave., Portland, ME 04103"),

Token (control_code = ControlCode (key = "tn", value = "normal"))

])

Control codes, sometimes known as control sequences, may be included in the input text when using the input type Input - TokenizedSequence. These codes indicate how the text segments following the code should be spoken.

See Input to synthesize for an example using the sample client.

See SSML tags to accomplish the same types of control in SSML input.

Nuance supports the following control codes and values in TokenizedSequence.

audio

Audio file in cloud storage via URN

Token (text = "Please leave your name after the tone. "),

Token (control_code = ControlCode (key = "audio",

value = "urn:nuance-mix:tag:tuning:audio/coffee_app/beep/mix.tts"))

Audio file from URL

Token (text = "Please leave your name after the tone. "),

Token (control_code = ControlCode (key = "audio",

value = "https://mtl-host42.nuance.co.uk/audio/beep.wav"))

The audio code inserts a digital audio recording at the current location. The value attribute specifies the location of the recording, as either:

A URN in Mix cloud storage. Use the Storage API to upload the audio file. See Sample storage client - Upload audio.

A secure URL. The file must be a WAV file on a web server accessed through a secure (https) URL, with a valid TLS certificate.

NVC supports WAV files containing 16-bit PCM samples.

If the audio file cannot be found or is not a WAV file, NVC reports an error. With the Synthesize method, NVC synthesizes any text tokens in the sequence but does not download or include the file. In these examples, if the audio file is unavailable, the results are only: "Please leave your name after the tone."

With UnarySynthesize, NVC does not synthesize anything and simply reports an error.

eos

eos

Token (text = "Tom lives in the U.S."),

Token (control_code=ControlCode (key="eos", value="1")),

Token (text = "So does John. 180 Park Ave."),

Token (control_code=ControlCode (key="eos", value="0")),

Token (text = "Room 24")

The eos code controls end-of-sentence detection. Values are:

- 1: Forces a sentence break.

- 0: Suppresses a sentence break. To suppress a sentence break, eos 0 must appear immediately after the symbol (such as a period) that triggers the break.

To disable automatic end-of-sentence detection for a block of text, use readmode explicit_eos.

lang

lang with unknown

Token (text = "The name of the song is."),

Token (control_code=ControlCode (key="lang", value="unknown")),

Token (text = "Au clair de la lune."),

Token (control_code=ControlCode (key="lang", value="normal")),

Token (text = "It's a folk song meaning, in the light of the moon.")

lang with specific language

Token (text = "Hello and welcome to the city of "),

Token (control_code=ControlCode (key="lang", value="fr-CA")),

Token (text = "St-Jean-sur-Richelieu."),

Token (control_code=ControlCode (key="lang", value="normal"))

The lang code labels text identified as from an unknown language, or a specific language. Values are:

- normal: The current voice language.

- unknown: Any other language.

- xx-XX: A specific language

The value lang unknown labels all text from that position (up to a lang normal or the end of input) as being from an unknown language. NVC then uses its language identification feature on a sentence-by-sentence basis to determine the language, and switches to a voice for that language if necessary. The original voice is restored at the next lang normal or the end of the synthesis request.

See LanguageIdentificationParameters.

Language identification is only supported for a limited set of languages.

When used with a multilingual (-Ml) voice, the lang code switches to another language supported by the voice. This example uses Zoe-Ml, defined with two languages apart from American English.

voices {

name: "Zoe-Ml"

model: "enhanced"

language: "en-us"

. . .

foreign_languages: "es-mx"

foreign_languages: "fr-ca"

}

In this example, the voice reads "St-Jean-sur-Richelieu" using French pronunciation, while the rest of the sentence is in English. When the lang code is used with a non-multilingual voice, "St-Jean-sur-Richelieu" is pronounced using the voice's base language.

mrk

mrk

Token (control_code=ControlCode (key="mrk", value="important")),

Token (text = "This is an important point. ")

The mrk code inserts a bookmark that is returned in the results. The value can be any name.

pause

pause

Token (text = "My name is "),

Token (control_code=ControlCode (key="pause", value="300")),

Token (text = "Jeremiah Jones. ")

The pause code inserts a pause of a specified duration in milliseconds. Values from 1 to 65,535.

para

para

Token (text = "Introduction to Vocalizer"),

Token (control_code=ControlCode (key="para")),

Token (text = "Vocalizer is a state-of-the-art text-to-speech system.")

The para code indicates a paragraph break and implies a sentence break. The difference between this and eos 1 (end of sentence) is that this triggers the delivery of a paragraph mark event.

pitch

pitch

Token (text = "Hi I'm Zoe. This is the normal pitch and timbre of my voice."),

Token (control_code=ControlCode (key="pitch", value="80")),

Token (control_code=ControlCode (key="timbre", value="90")),

Token (text = "But now my voice sounds lower and richer.")

The pitch code changes the speaking voice to sound lower (lower values) or higher (higher values). Values are between 50 and 200, and 100 is typical.